1. Introduction

There are plenty of origin stories for a company’s decision to invest in sustaining and improving the effectiveness of its engineering organization. Sometimes it’s a simple conversation among leaders: “Is it just me, or did we ship things faster in the past?” Sometimes it’s preceded by painful failure: “We missed most of our objectives last half, and product and engineering are pointing fingers at each other.” And sometimes, it’s driven more by curiosity about an opportunity than an acute or targeted need: “I’ve heard of SPACE and DORA, and I think they could help us.”

Each of these origin stories — and every other story that eventually leads a person like you to read a book like this — has a unique motivation. How the problem is stated tells you much about the underlying issues you’ll find when you dig into the situation. It’s relatively easy to adopt a new approach when you can operate with curiosity and a mindset of continuous improvement, but it’s much more challenging when you’re trying to solve an acute problem within the constraints of a company’s current size, age, and culture.

This book aims to collect the best practices of software product development, drawing on lean principles, modern product and project management principles, systems thinking, and much more. Much has been written on these individual topics across various books — see our recommended reading at the end of each of the following chapters — but here, we attempt to pull it all together into a coherent framework for running a software organization.

How we approach effectiveness

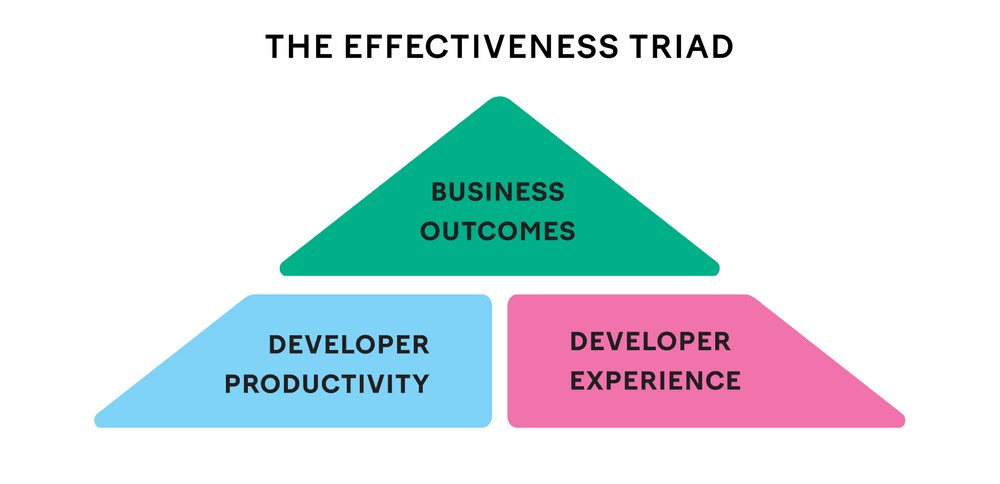

We like to think of effectiveness by breaking it down into three concepts: business outcomes, developer productivity, and developer experience. Delivering business outcomes is the ultimate goal of any software organization. Once you know where you’re headed, developer productivity is about getting there quickly. Developer experience is about discovering how you might increase the continuous time an engineer can focus on valuable work while remaining satisfied and engaged with their job.

Many discussions of engineering effectiveness focus on just one of these concepts without recognizing that they are all intertwined. In this book, we look at each area individually and then discuss how to bring them together into a coherent and actionable plan for improvement.

Business outcomes

A fundamental challenge of delivering a successful product is intelligently allocating finite resources to seemingly infinite problems and opportunities. The decisions involved here are difficult at any organization size, and they aren’t limited to software engineering. Organizational design plays a huge role in how well a business can achieve its goals. There’s a real risk of trying to do too many things at once, with the inevitable result that few of them get done well, if at all. In larger organizations, these decisions often happen organically and implicitly, with fuzzy lines of accountability and no clear overarching picture of who’s spending time (and money) on what.

Effective software organizations focus their investments on the right outcomes.

Developer productivity

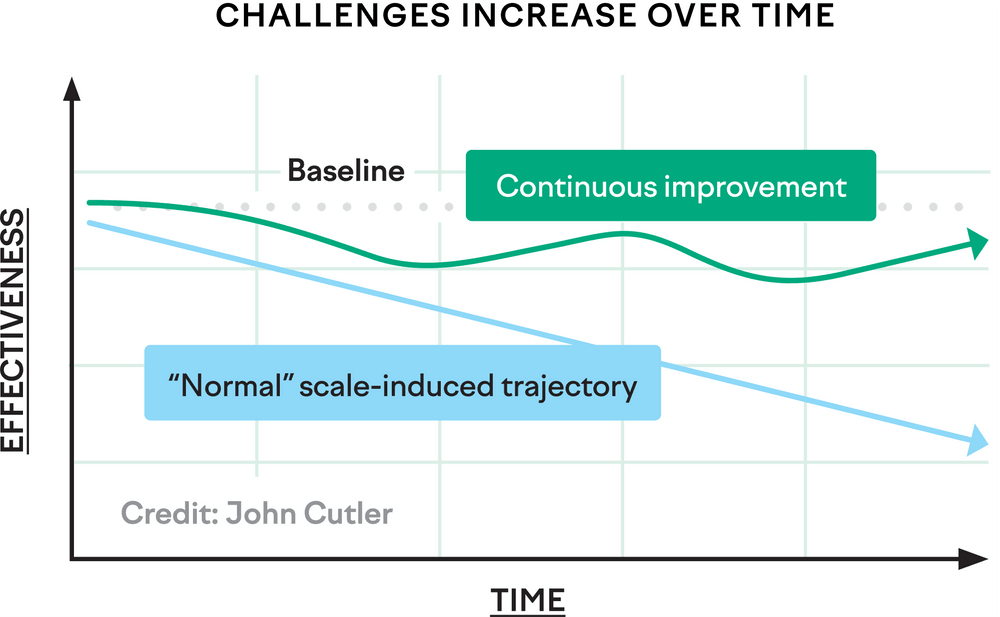

Without intention and intervention, the pace of shipping value will decline over time, and doing what has always worked won’t always keep working. Engineering leaders are increasingly held accountable for the value their organizations deliver — and they are increasingly at risk of people outside engineering deciding how to quantify this value. The processes that move work through an engineering organization — ideally creating customer value at the end — are evolving, emergent, and often difficult to inspect or understand. As an organization grows larger, the leverage points to drive improvement move from the team to the organization as a whole, and the forces that speed or impede delivery become more varied and broadly distributed.

Effective software organizations make fast and consistent progress toward their goals.

Developer experience

Developer experience is arguably the other side of the developer productivity coin, and it can be hard to separate the two. Developer experience focuses on what it’s like to work within your organization’s code and deliver its software. Developer experience efforts should emphasize eliminating wait time and interruptions, ensuring that your codebase isn’t making work harder than it needs to be.

Effective software organizations give engineers the support and tools they need to feel engaged.

How we talk about teams

Throughout the book, we use a few words consistently to describe the scope of a situation, problem, or solution.

- The business. The overarching entity that pays the bills. It encompasses the engineering organization as well as other functions such as sales, marketing, customer support, finance, and more.

- The organization or the engineering organization. The group of people responsible for delivering technical solutions to achieve business objectives, including software engineers, product managers, product designers, and other supporting roles.

- The group. A group of related engineering teams, usually led by a director, that’s part of a larger engineering organization.

- The team. A cross-functional group of people focused on delivering technical solutions to specific business problems, usually in the context of a specific problem, product, or persona.

Setting the stage for an effectiveness effort

If creating an effective software organization is A Thing You Should Care About in your role — whether you’re a line manager or the CTO — it’s good to ask yourself a few questions to prepare for the conversation ahead.

- Why is this important? What’s motivating the company to spend time on this topic? How does it beat out other goals? How high up does the plan go?

- Why is this important now? Software organizations can always be more effective, but now is suddenly the time you’re paying attention. What changed?

- What have you tried so far? How did you decide you needed to do something else?

- What metrics are you tracking today? Where are they falling short? How are they changing over time?

Smaller companies may still need to nail the delivery fundamentals at the team level, while larger companies may form dedicated teams to standardize, automate, and speed up development processes. At a certain size, it takes effort just to sustain the same amount of productivity; even if the engineering headcount isn’t growing, the codebase is, and quickly. As a company grows, its investment in its continued effectiveness needs to grow too, as the later that investment starts, the more debt must be paid down. At a certain size, you’ll consider a dedicated platform team to keep that software development ecosystem humming as you continually accumulate lines of code.

A company’s culture determines the likely pace, breadth, and “stickiness” of its improvements. Companies that highly value team technical autonomy face different challenges than companies with standardized tooling and centralized processes. The depth of trust throughout the leadership chain will influence how readily people embrace productivity efforts, and the company’s engineering ladder will play a big part in who raises their hand to do the work. When thinking about how to drive change, don’t pick fights with the culture. Instead, use it to your advantage whenever you can and reshape it (gently) only when you must.

Answering the following questions will deepen your understanding of how these three factors come into play.

- What does “better” look like? Your engineering effectiveness investment proposal was approved. It’s two years later, and everything is better. You can’t believe you used to spend time doing … what? What has changed? Looking at the current reality, what’s kept you from making these changes?

- Who benefits when we achieve better? This is a trick question because the answer is “everyone,” from product to sales, customer support to engineering to users. Where will you find reliable allies and champions for more effective delivery — even if it comes at some near-term costs such as slower delivery of bug fixes and new features?

- What kinds of potential changes are in scope? Does the business think of this as an engineering problem, a business problem, or both? What is the scope of the most senior person who will sponsor necessary change, even if it has near-term costs? Who will warm up to the cause after a couple of success stories?

- What are the biggest obstacles you expect? Now is not the time for rosy optimism. Talk openly with anyone who wants to pitch in about what will be hard. Maybe two different engineering organizations aren’t aligned on what’s important; maybe you expect the CEO to defer to product priorities instead. Maybe everyone’s on the same page but you worry that procuring a tool will take six months. This sort of effort can go sideways in many regards, so anticipate whatever you can.

Each company takes its own path to arrive at the start of its productivity journey, and the path it follows after that will likewise be unique. There is plenty to learn from what others are doing, plenty we can standardize as an industry, and plenty you can discover from this book.

Anyone who tells you there’s One True Way is lying. The way to improve your situation will be unique to the size, age, and culture of the company in which you operate.

Measurement and goal-setting

Being a software leader would be a lot easier if we didn’t have to figure out whether we were doing a good job. Of course, every data-driven bone in our bodies says we need to measure something to know we’re going in the right direction, and every company leader would likely agree.

We’ll delve into specific measurements in the upcoming chapters, but a few caveats generally apply to measuring things in this space.

First, it’s easy to get bogged down in figuring out how to measure the impact of something rather than doing The Thing. With enough time and code, you’ll probably get there, but remember to ask yourself whether that time is worth the benefit. Sometimes, all you need to do is 1) make sure no one thinks The Thing is a terrible idea, 2) do The Thing, and 3) check in with your users or stakeholders to see whether they noticed that you did The Thing. Don’t fall into the trap of delaying action — and thereby delaying benefit — just because you haven’t yet worked out how you’ll count something. Be prepared to advocate for and celebrate clear-if-unmeasurable wins.

Second, metrics — especially qualitative ones — can be difficult to interpret correctly and consistently. The space is full of both lagging indicators of success and indicators that can be hard to trust because they’re biased by a moment in time. Self-reported satisfaction scores, for example, are deeply subject to moment-in-time bias, even to the whims of traffic on the commute to work that day. They can drop quickly and tend to recover slowly. Decisions on how you slice your data can also hide problems. An average metric might overemphasize outliers, while p50 can hide pathological cases at p99. On the other hand, looking at p99 all the time can lead to optimizations that benefit relatively few use cases.

Third, there’s a fine balance between metrics that guide improvements and those that make people perceive a lack of trust. However, this tension shouldn’t stop you from measuring. Instead, it emphasizes the need to be open and transparent about the data you collect, how you collect it, and how you use it to evaluate individuals and teams. Be transparent with individual contributors (ICs) about what you’re measuring and how it will be used. Make it easy for them to see the data they’re contributing.

Finally, remember that these kinds of metrics work best as conversation starters and pretty terribly as comparison metrics when there’s a change in context — for example, a staffing change or a change in priorities. The conversations the metrics drive will differ from team to team — teams tend to have meaningful differences in their skill sets, tenure, seniority, codebases, complexity, and so much more that it becomes irresponsible to compare them head to head.

With these caveats in mind, you can see how goal-setting around any metric in the effectiveness domain will likely have some gotchas. Be especially wary of setting goals around human-reported metrics — for example, a developer satisfaction metric or one that counts how often engineers complain about something.

Choosing metrics and tracking progress

The desire for measurement can paralyze an effectiveness effort. Metrics are valuable, but a lack of them shouldn’t block progress on well-known problems.

Decisions on how and whether to measure something should be the output of a thoughtful and deliberative process about what better would look like. It’s okay if some of your ambitions are intangible, such as “Deploy issues shouldn’t dominate our next developer survey.”

The Goals, Signals, and Metrics framework is helpful here — and note that metrics come last.

- Goals focus on outcomes, not the anticipated implementation.

- Signals are things that humans can watch for to know if you’re on track.

- Metrics are the actual things you measure and report on to track progress toward the goal.

In this framework, you first agree that there’s a problem worth solving. Then, you set a goal that, if achieved, would be clearly understood as progress toward solving the problem. Next, you have the “I know it when I see it” conversation — what statements, if true, would have everyone nodding in agreement that you were progressing? These are your signals.

Finally, you arrive at the metrics, but again, a word of caution: don’t beat yourself up to measure something when broad agreement about the existence of a clear signal would be sufficient to declare success, nor when the change has another, more notable business impact. There’s a ton of accruing value to measuring your development process, but not all aspects of productivity can be measured conveniently, if at all.

Working on a goal often starts by establishing a baseline for the current reality. Stay focused on the desired outcome, not the metric or tactic. Keep your focus on making things easier for engineers, use that focus to motivate increased observability of processes, execute on the opportunities you find, and know that quantitative data will sometimes disagree with the stories you’ve been told.

You may initially find it difficult to set a specific target for the metrics, and that’s not just okay but expected. When tackling a problem, focus on trends — up or down and to the right as appropriate. If you continue to focus on the issue over subsequent quarters, you’ll have more information to set targets or acceptable thresholds.

A note on frameworks

There are numerous frameworks to help you improve your software organization — SPACE and DORA are a couple that are currently in fashion. Each framework is useful in its own way, and they’re all worth knowing about, but none tell you what to do in your particular situation. None of them can claim to offer a single metric that you can observe and set goals around — in fact, only DORA is particularly prescriptive about any metrics at all.

If you approach the productivity space with a mindset of “I need to implement DORA” or “We’ll just follow SPACE,” you’ll likely have difficulty driving meaningful change. Frameworks offer a way of thinking about a problem, not a to-do list. They’re a skeleton on which you hang some ideas that will turn into a plan, which you’ll then implement and iterate upon.

A framework can also offer guardrails against counterproductive decisions if stakeholders agree to it on principle. For example, a core tenet of SPACE is that metrics that span the framework can often be in tension with one another. This tenet can be a good reminder when metrics aren’t moving the way you might have expected.

Table stakes

Any effectiveness effort becomes significantly easier if you adopt and embrace a few proven principles. These principles are so essential that we’ll revisit them throughout the rest of this book, whose guidance will be of limited use if you don’t also embrace or move toward these principles in your organization. Indeed, if your engineering organization struggles to be effective, at least one of these principles is probably absent.

- Empowered teams. When teams can make autonomous decisions about their work, organizations can respond more quickly to changes, improve motivation, and ship solutions more likely to meet customer needs. When they must rely on others to make progress, the effectiveness of their teams suffers.

- Rapid feedback. Quick and frequent feedback enables rapid learning and adjustments. This agility helps better align the product with market needs and customer expectations. When you have weeks-long feedback cycles, a lot can go wrong between check-ins.

- Outcomes over outputs. Focus on the value and impact (outcomes) of engineering work rather than just the volume or efficiency of deliverables produced (outputs). This ensures that development efforts actually contribute to business goals and customer value.

Let’s dig into each of these in more detail to see what they look like in practice.

Empowered teams

Empowering teams means delegating decision-making authority to those closest to the work. Providing teams with the necessary context and trusting them to make informed decisions can enhance efficiency and motivation.

Consider a scenario where a software development team regularly encounters delays due to a cumbersome and outdated deployment process. Instead of management dictating a specific solution, empowering the team would involve giving them the authority to research, propose, and implement a new deployment strategy. This could include choosing new deployment tools, redesigning the deployment pipeline, or adopting new practices like continuous deployment.

This approach recognizes that the team that knows the deployment process is best positioned to improve it based on their experience. It also makes the team more invested in the outcome than if there’s just a top-down mandate. Allowing the team to experiment and take risks can lead to more innovative solutions than if decisions are made solely by management. It also speeds up decision-making, as there’s no need for multiple rounds of external approval and feedback.

Note that this doesn’t mean all decisions should or will fall to individual teams; some decisions properly belong at the organization or even business level. An empowered team will feel confident in providing input and feedback on those decisions when they have it.

Rapid feedback

Rapid feedback can include frequent automated testing, continuous integration, code review, stakeholder reviews, and many other moments in the software development lifecycle where you need information to decide whether to proceed or change course.

Delayed feedback results in rework, wasted time and effort, and missed opportunities. We get feedback from our tools, our peers, our stakeholders, and our customers; according to this principle, we want to solicit this feedback regularly and frequently rather than bundling up large chunks of work for one cumbersome and time-consuming mega-review.

When there is a need for an approval or review process, one of the best ways to ensure rapid feedback is to establish a feedback cadence so that there is never a large backlog of work to be reviewed. By reviewing smaller batches, future batches can be informed by the feedback on earlier batches, rather than working on a large batch of work and then learning at feedback time that you’ve missed the mark.

Outcomes over outputs

Goals and success measurements should be based on outcomes, such as customer satisfaction or market share, rather than outputs, such as the number of features released, bugs closed, or story points completed. Incentivizing teams based on output volume can steer them to invest in quantity over business impact.

When you align team objectives with business outcomes and use metrics that reflect these outcomes, you encourage innovation and creative problem-solving, ensuring that the work contributes effectively to the organization’s goals.

The table below highlights key differences between the two approaches.

| Aspect | Output-based approach | Outcome-based approach |

| Definition of success | The quantity of what is produced, such as features, documentation, or lines of code. | The impact on customer behavior and business results, such as improved customer satisfaction or increased sales. |

| Key metrics | Measures include the number of features deployed, code commit frequency, and deadlines met. | Measures include customer engagement metrics, conversion rates, market share, and revenue growth. |

| Development focus | Focus is on executing a predefined set of tasks and deliverables. | Focus is on validating hypotheses about customer needs and business value by delivering the smallest viable increment. |

| Feedback loop | Feedback is often related to whether the product is delivered on time and within budget. | Feedback is based on how well the product changes user behavior or improves key business metrics. |

| Decision-making | The progress of deliverables drives decisions according to the project timeline. | Decisions are driven by data and insights about what will move the needle on desired outcomes. |

| Approach to change | Changes are often viewed as a setback or a sign of planning failure. | Changes are viewed as opportunities to learn and pivot toward more impactful results. |

| Team alignment | Teams may work in silos, with each department focusing on their own set of deliverables. | Cross-functional teams work collaboratively, with a shared understanding that the goal is to achieve the desired outcomes. |

| Response to failure | When a feature or project does not meet the specifications or deadlines, it is considered a failure. | Failure is viewed as a learning opportunity that informs the next iteration and brings the team closer to achieving the outcomes. |

What we left out

There are a few topics you might expect to see in a book like this that aren’t present — leadership, performance management, and compensation, to name a few. This was a deliberate choice to keep the book focused on the interaction among business outcomes, developer productivity, and developer experience.

Of course, leadership, performance management, and compensation do play a role in the satisfaction of your engineers, just like the technical tools they use. Although we don’t address these topics at length, keep in mind that they can all be levers for improvement.

What to expect from this book

So far, we’ve surveyed the engineering effectiveness landscape and all the factors likely to make your situation inconveniently unique. We also looked at three ways of working — empowered teams, rapid feedback, and outcomes over outputs — that are key to any effectiveness effort.

In the next three chapters, we’ll look at each of the areas of effectiveness we outlined above: business outcomes, developer productivity, and developer experience. In these chapters, we’ll share guidance that’s broadly applicable despite company differences. We’ll conclude with a chapter that offers a loose roadmap encompassing all three areas to address organization-wide improvements in effectiveness.

Let’s get to work.