3. Developer productivity

Effective software organizations make fast and consistent progress toward their goals.

The unfortunate reality about complexity in software is that if you just continue doing what you’ve always been doing, you’ll keep slowing down. When starting a fresh project, you’ll be surprised by how much you can accomplish in a day or two. In some other, more established environments, you could spend a week trying to get a new database column added.

Many things that slow down work are systemic, not individual. Even the most talented engineer might not fully understand how much time is wasted when work is bounced between teams, half-completed features are shelved as priorities change, or all the code gets reviewed by just one person. It’s easy to think you’re solving a quality problem by introducing code freezes and release approvals, but you might only be making things worse.

In this chapter, we’ll talk about some of the perils of measuring productivity before we move on to the mechanics of making it happen in a way that’s perceived as broadly beneficial.

But first, let’s talk about the biggest question of all: what is productivity, anyway?

Defining developer productivity

If you ask a group of seasoned engineering leaders to define developer productivity, there will typically be no unified answer. For the purposes of this book, we consider developer productivity in the context of how organizations can minimize the time and effort required in the software delivery process to create valuable business outcomes. We will focus primarily on team- or service-level delivery and eliminating bottlenecks — often process bottlenecks — in the software delivery process.

We’ll also center our conversation on aggregate productivity instead of the efforts and contributions of individuals. A healthy productivity effort may involve automating more parts of the team’s deployment process, addressing flaky tests that cause failing builds, or just getting a team to commit to reviewing open pull requests before starting on their own work. A healthy productivity effort should not, on the other hand, require a certain number of pull requests for each engineer every week. That approach is unlikely to create business value and very likely to create a toxic environment.

Productivity table stakes

Just as we discussed organizational table stakes in the first chapter — empowered teams, rapid feedback, and outcomes over output — there are three clear ways of working that you’ll see on any highly productive team.

- Limited queue depth. Controlling the number of tasks waiting to be processed (also known as a backlog) reduces lead times, improves predictability, and smooths the flow of work, thereby increasing efficiency and reducing the risk of bottlenecks.

- Small batch sizes. Smaller batches of work are processed more quickly and with less variability, leading to faster feedback and reduced risk. This approach enhances learning and allows for more rapid adjustments to the product.

- Limited work-in-progress (WIP). By restricting the number of tasks in progress at any given time, teams can focus better, reduce context switching, and accelerate the completion of tasks, thus improving overall throughput.

Limited queue depth

It’s okay to admit it: we’ve all added a task to a backlog with a vague certainty that it will never get done.

Limiting queue depth means rigorously monitoring and managing the number of tasks awaiting work. This involves implementing systems to track and control the queue size, such as using a Kanban board to visualize work and enforce limits on the number of items in each stage. This principle also means you can’t let backlogs grow unchecked, as this can lead to delays, rushed work, and increased stress.

Regularly review your work queues and adjust priorities to ensure that valuable and time-sensitive tasks are getting addressed promptly. When you encourage teams to complete current tasks before taking on new ones and use metrics like cycle time to identify bottlenecks, you can significantly enhance the flow and efficiency of the development process.

Implementing this in practice usually means limiting the number of tasks awaiting development, review, or deployment at any given time. In addition to providing clarity about what to work on next, this practice also dramatically improves the predictability of delivery once something reaches that initial awaiting development status.

Small batch sizes

Breaking down large projects into smaller, more manageable parts allows for quicker completion of each part, enabling faster feedback and iterative improvements. For instance, deploying completed tasks incrementally rather than releasing a large set at once makes it easier to release more tasks in a given period of time; regressions will tend to be small, readily attributed, and readily fixed without blocking other tasks.

Large batches often complicate integration and make it difficult to track down problems. A continuous delivery model, where small updates are released whenever they’re ready, is a practical application of this principle. Encourage teams to think in terms of small changes, which helps in managing risk and improving the ability to adapt to new information.

Limited WIP

When you introduce and regularly monitor WIP limits, you ensure that teams focus on completing ongoing tasks before starting new ones. Overloading team members with multiple tasks leads to reduced focus and increased cycle times. A culture where teams are encouraged to complete current work before embarking on new tasks improves focus, reduces waste, and speeds up work delivery.

The Kanban process embraces this explicitly, although you don’t need to use Kanban to follow this principle. In that process, the team always focuses on completing the team’s in-flight tasks before starting new ones — a process sometimes called “walking the board from right to left” — to encourage teammates to help each other before starting a new task. Similarly, scrum limits the number of story points in an individual sprint.

In the absence of WIP limits, a team can quickly start to juggle more than it can reasonably handle, and it’s common for tasks to remain in progress for an extended period even though they aren’t being actively worked upon.

Productivity vs. quality

A common misconception is that productivity and quality are in tension. If your version of quality is to manually test every change you make and test your whole product before releasing it, there will naturally be tension between the two. Any scenario that relies heavily on manual testing often leads to the creation of more processes — like a definition-of-done checklist on every pull request — further delaying time to value.

Fascinatingly, one of the best ways to achieve developer productivity involves improving the quality of your product through automated testing. If you’re doing productivity right, quality will tend to increase over time, as it becomes easier to ship smaller changes and easier to roll back or disable features.

Broadly, this involves four things.

- Make it easy to write tests. Most programming languages have somewhat standard testing frameworks, and many software frameworks also come with clear patterns for testing. Educate your engineers on how to use these testing tools, making setup easy.

- Make it easy to get the right data. Tests shouldn’t be talking to production to get data, but they need data that’s a realistic simulation of the kind you’d see in production. If you ask individual engineers to solve the data problem independently, their approaches will be varied and surprising (and often quite bad).

- Make it easy to manually test. While you want to limit the amount of manual testing we’re doing, there are lots of situations during the development of a feature where you’d like to be able to kick the tires and see how it works — for example, to show something to a product partner or another developer working remotely. Make it easy to interact with code that’s on a feature branch.

- Make it easy to release (and roll back) small changes. One of the reasons teams get in a position of doing a ton of pre-release manual testing is that the release process itself is so onerous — and the rollback process is worse. Individual tasks stack up so that a release includes dozens of changes and tens of thousands of lines of code. When you make it trivial to release small changes, engineers will start making smaller changes, leading to vastly less risk for any given release.

If you’ve put these pieces in place — which can be harder than it sounds — you’ve given your engineers powerful tools that make their job easier, and you’ve also taken a big step toward a better product. Add a ratchet to CI to make sure test coverage of your code only goes up, and incentivize writing tests and sharing strategies within and across teams.

Once again, team structure (as discussed in Chapter 2) comes into play. Establishing a culture of (automated) quality requires that your teams have sufficient domain knowledge in testing methods for the language or framework being used. Emphasizing automated testing also encourages you to limit the complexity any single team has to deal with, so you in turn limit the surfaces they need to test.

Frameworks for thinking about productivity

There are a couple of frameworks that can be useful when considering the broad topic of productivity.

The DevOps Research and Assessment (DORA) framework has become a standard in the productivity realm for a reason: it offers a set of valuable metrics that shed light on where engineering teams might be able to improve their software delivery. By providing a baseline that captures a team’s current state, DORA sets the benchmark for your team’s processes. The aim isn’t to become obsessed with numbers but to continually evaluate whether you’re satisfied with what the numbers are telling you.

The success of the DORA framework — which originated from work by Nicole Forsgren, Jez Humble, and Gene Kim — lies in its simplicity and ability to capture various aspects of software development through its four core metrics: lead time for changes, deployment frequency, time to restore service, and change failure rate. These metrics are in healthy tension with each other, which means improving one could unintentionally lead to the degradation of another.

Of course, there are limitations to the DORA metrics. While they offer a snapshot of your team’s performance, they don’t explain why something might be off. Nor do they tell you how to improve. The DORA framework is not a diagnostic tool; it doesn’t point out bottlenecks in your processes or identify cultural issues inhibiting your team’s effectiveness. It’s much like having a compass — it will tell you what direction you’re headed in, but not what obstacles lie in the way or how to navigate around them.

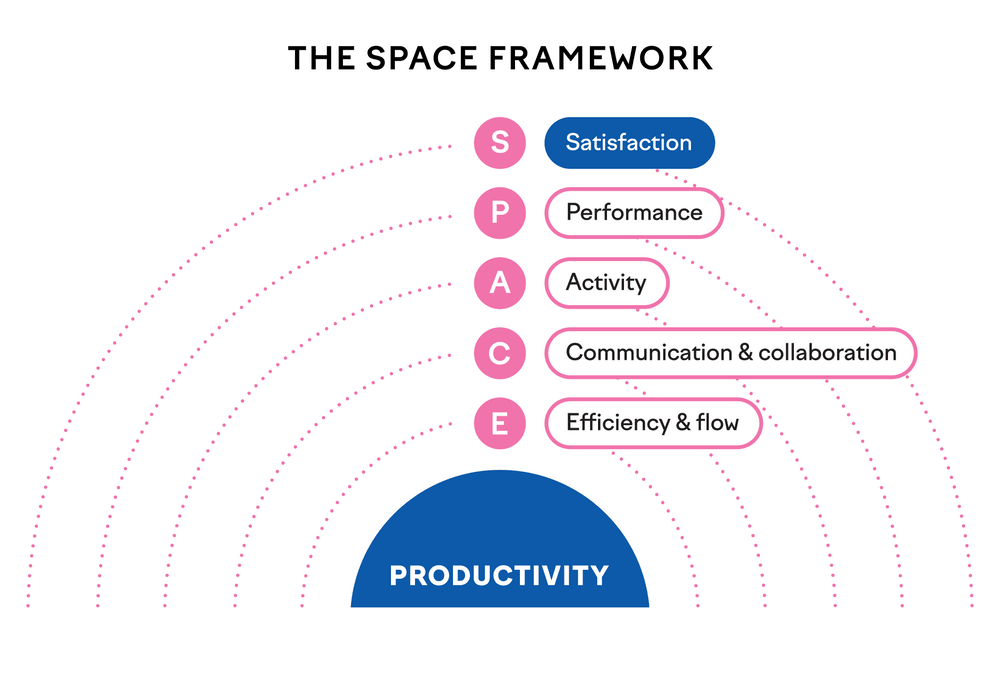

The SPACE framework, developed by Forsgren along with Margaret-Anne Storey, Chandra Maddila, Thomas Zimmerman, Brian Houck, and Jenna Butler, grew out of an attempt to create a more comprehensive tool to capture the complex and interrelated aspects of software delivery and operations. The goal was to create a model that would acknowledge the competing tensions within software development and use those tensions as catalysts for improvement.

Unlike DORA, SPACE embraces quantitative and qualitative metrics, identifying five critical dimensions of software delivery and operational performance. The acronym stands for satisfaction, performance, activity, communication and collaboration, and efficiency and flow.

- Satisfaction is how fulfilled and satisfied engineers feel about their work, team, tools, and culture. It also involves evaluating how that sentiment affects their engagement and fulfillment based on the work they do.

- Performance evaluates whether the output of the engineering organization has the desired outcome relative to the investment. For example, what is the ROI of adding 20 engineers to an organization? This is notoriously difficult to measure in a concrete way when it comes to software engineering, meaning it’s more of a theoretical concept than a roadmap to specific metrics.

- Activity is a count of actions or outputs completed while performing work. These include outputs like design documents and actions like incident mitigation, as well as commits, pull requests, and code review comments.

- Communication and collaboration captures how people and teams communicate and work together.

- Efficiency and flow captures the ability to complete work or make progress on it with minimal interruptions or delays, whether individually or through a system.

SPACE offers a comprehensive (though fuzzy) approach to improving productivity. It acknowledges the interplay between different aspects of software development and provides a balanced and holistic model for assessment and improvement. Still, it is just a framework — it doesn’t offer any specifics about what exactly to measure or what “good” should look like.

A set of universal metrics can’t fully capture the effectiveness of your organization because organizations vary in size, age, and culture. A mature, larger organization may have very different challenges and therefore different areas to focus on for improvement compared to a smaller, newer organization. This means that while DORA metrics are incredibly useful, they must be complemented by other qualitative assessments, leadership insights, and perhaps more localized metrics that take into account the unique characteristics of specific teams.

Unfortunately, there is no definition of productivity that boils down to keeping an eye on a few simple metrics. Measuring productivity is actually pretty hard.

Measuring productivity

Engineering organizations measure developer productivity to eliminate bottlenecks and make data-informed decisions about resource allocation and business objective alignment. Assessing productivity also provides insights into project predictability, which aids in planning and forecasting. This data acts as an early warning system to recognize when teams are overburdened, allowing for proactive interventions to alleviate stressors and redistribute workloads.

Even when the intent of measuring productivity is to improve team and organizational effectiveness, individual engineers can still be concerned that the data will be used against them. There’s a pervasive worry that these metrics could translate into some form of individual performance review, even when that’s not the intended use. This concern can contribute to a culture of apprehension, where engineers might be less willing to take risks, innovate, or openly discuss challenges. Any perception that the data will be weaponized for performance purposes can doom an effectiveness effort. Say that you won’t use the data to target individuals and mean it.

Transparency in communicating the intent, scope, and limitations of productivity metrics can go a long way in assuaging these concerns. The metrics themselves likewise need to be transparent. By involving engineers in the process of deciding what to measure, how to measure it, and how the data will be used, you can mitigate fears and build a more cooperative culture focused on continuous improvement rather than punitive action.

Despite these risks, measuring productivity can foster healthy conversations about organizational improvement. Metrics can highlight inefficiencies or bottlenecks and open the door to constructive dialogue about how to solve these problems. This becomes especially necessary as a business grows and alignment between engineering objectives and broader business goals becomes more challenging. Software delivery metrics offer a standardized way to communicate the department’s status to other organizational stakeholders.

Choose your metrics carefully. Besides the risk of impacting the psychological safety of your engineers, there are other pitfalls to be aware of. Don’t rely on misleading or irrelevant metrics that provide a distorted view of what’s happening within the teams (for example, pull requests per engineer or lines of code committed). Poorly chosen metrics can lead to misguided decisions and even undermine the credibility of the whole measurement process.

Consider, too, the incentives that are created when you choose metrics. Overemphasizing activity-focused numbers might lead engineers to game the system in a way that boosts activity metrics but doesn’t genuinely improve their productivity or the value created by their work. This can result in a culture where superficial metrics are prized over substantive improvements, leading to technical debt and inefficiencies. On the other hand, if your metrics encourage engineers to submit more but smaller pull requests, you’re likely to see benefits in quality and speed of delivery.

Cycle time

The work of delivering code changes for individual tasks is often measured in terms of cycle time. This term comes from manufacturing processes, where cycle time is the time it takes to produce a unit of product and lead time is the time it takes to fulfill a delivery request.

In software development, these terms are often mixed. For most features, it might not be reasonable to track the full lead time of a feature, as in the time from a customer requesting a feature to its delivery. Assuming the team is working on a product that’s supposed to serve many customers, it’s unrealistic to expect features to be shipped as soon as the team hears the idea.

Although we’re reusing manufacturing terms, remember that there is no unit of product in software development. A car can only be sold by the manufacturer once. The work that happens in an engineering organization can be sold over and over again, with near-zero marginal cost for each additional sale of the exact same code.

When talking about cycle time for code, we’re talking about the time it takes for code to reach production through development, reviews, and other process steps. Cycle time is the most important flow metric because it indicates how well your engine is running. When diagnosing a high cycle time, your team might have a conversation about topics like this:

- What other things are we working on? Start by visualizing all the work in progress. Be aware that your issue tracker might not tell the whole truth because development teams typically work on all kinds of ad hoc tasks.

- How do we split our work? It’s generally a good idea to ship in small increments. This might be more difficult if you can’t use feature flags to gradually roll out features to customers. Lack of infrastructure often leads to a bad branching strategy, with long-lived branches and additional coordination overhead.

- What does our automated testing setup look like? Is it easy to write and run tests? Can you trust the results from the continuous integration (CI) server?

- How do we review code? Is only one person in the team responsible for code reviews? Do you need to request reviews from an outside technology expert? Is it clear who’s supposed to review code? Do we as a team value that work, or is someone pushing us to get back to coding?

- How well do team members know the codebase? If all the software was built by someone who left the company a while ago, chances are that development will be slow for a while.

- Is there a separate testing/quality assurance stage? Is testing happening close to the development team, or is the work handed off to someone on the outside?

- How often do we deploy to production/release our software? If test coverage is low, you might not feel like deploying on Fridays, or if deployment is not automated, you won’t do it after every change. Deploying less frequently increases the batch size of a deployment, adding more risk and again reducing frequency.

- How much time is spent on tasks beyond writing code? Engineers need focus time; getting back to code on a 30-minute break between meetings is difficult.

There are perfectly good reasons for cycle time to fluctuate, and simply optimizing for a lower cycle time would be harmful. However, when used responsibly, it can be a great discussion starter. Even better, consider tools that help visualize how this number moves over time, leading to a deeper understanding of trends and causes.

Issue cycle time captures how long your epics, stories, and tasks (or however you plan your work) are in progress. Each team splits work differently, so they’re not directly comparable. If you end up creating customer value, it probably doesn’t matter whether that happens in five tasks taking four hours each or four tasks taking five hours each.

Things don’t always go smoothly. When you expected something to take three days and it took four weeks of grinding, your team most likely missed an opportunity to adjust plans together. When you find yourself in this type of situation, here are some questions to ask.

- What other things are we working on? Chances are that your team delivered something, just not this feature. Visualizing work and limiting work in progress is a common cure.

- How many people worked on this? Gravitating toward solo projects might feel like it eliminates the communication overhead and helps move things faster, but this is only true from an individual’s perspective, not the whole team’s.

- Are we good at sharing work? Splitting work is both a personal skill and an organizational capability. Engineers will argue it’s difficult to do. Nevertheless, do more of it, not less.

- How accurate were our plans? Suppose the scope of the feature increased by 200% during development. In that case, it’s possible that you didn’t understand the customer use cases, got surprised by the technical implementation, or simply discovered some nasty corner cases on the way.

- Was it possible to split this feature into smaller but still functional slices? Product management, product design, and engineers must work together to find a smart way to create the smallest possible end-to-end implementations. This is always difficult.

It feels great to work with a team that consistently delivers value to customers; that’s what you get by improving issue cycle time.

Deployment frequency

Depending on the type of software you’re building, “deployment” or “release” might mean different things. For a mobile app with an extensive QA process, getting to a two-week release cadence is already a good target, while the best teams building web backends deploy to production whenever a change is ready.

Deployment frequency serves as both a throughput and a quality metric. When a team is afraid to deploy, they’ll do so less frequently. When they deploy less frequently, bigger deployment batches increase risk. Solving the problem typically requires building more infrastructure. Here are some of the main considerations:

- If the build passes, can we feel good about deploying to production? If not, you’ll likely want to start building tests from the top of the pyramid to test for significant regressions, build the infrastructure for writing good tests, and ensure the team keeps writing tests for all new code. Whether tests get written cannot be dictated by outside stakeholders; this needs to be owned by the team.

- If the build fails, do we know if it failed randomly or because of flaky tests? You need to understand which tests are causing most of your headaches so that you can focus efforts on improving the situation.

- Is the deployment pipeline to production fully automated? If not, it’s a good idea to keep automating it one step at a time. CI/CD pipeline investments start to pay off almost immediately.

- Do we understand what happens in production after deployment? Building observability and alerting takes time. If you have a good baseline setup, it’s easy to keep adding these along with your regular development tasks. If you have nothing set up, it will never feel like it’s the right time to add observability.

- Are engineers educated on the production infrastructure? Some engineers have never needed to touch a production environment. If it’s not part of their onboarding, few people are courageous enough to start making improvements independently.

Some measures to avoid

Historically, agile teams have tracked velocity or story points. Originally meant as a way to help teams get better at splitting work and shipping value, these units have been abused ever since as a way to directly compare teams and steer an organization toward output-based thinking.

If talking about story points helps you be more disciplined about limiting queue depth and WIP, go for it. If not, don’t feel bad about dropping story points as long as you understand your cycle times.

Another traditional management pitfall is to focus on utilization, thinking that you want your engineers to be 100% occupied. As utilization approaches 100%, cycle times shoot up and teams slow down. You’ll also lose the ability to handle any reactive work that comes along without causing major disruptions to your other plans.

There’s a time and place to look at metrics around individual engineers. In very healthy environments, they can be used to improve the quality of coaching conversations while understanding the shortcomings of these measures. In a bigger organization, an effort to focus on individual metrics will likely derail your good intentions around data-driven continuous improvement. Engineers will rightfully point out how the number of daily commits doesn’t tell you anything about how good they are at their jobs.

On the other hand, opportunities abound at the team level without shining a spotlight on any individual. Start your conversations there instead.

Classic productivity challenges

Assessing productivity challenges in software engineering teams requires looking beyond output metrics. Consider these potential culprits when trying to debug a productivity issue:

- Insufficient collaboration. Collaboration among team members is essential to improve issue cycle time. Collaboration allows for more effective planning and prioritization, reducing multitasking and aligning the team on common goals. Individual efforts may seem efficient in the short term, but they lack the collective intelligence and shared context that comes from teamwork.

- Siloing. To find gaps in collaboration, observe your issue tracker to see if projects are often completed by single contributors. A lack of multiple contributors on larger issues indicates a problem. Preventing siloing may involve setting team agreements and ensuring that tasks are broken down sufficiently for multiple people to work on.

- Multitasking. Taking on too many tasks simultaneously slows progress and creates waste. Track open stories, tasks, and epics against the number of engineers to gauge if there's an overload. Listen to the team’s qualitative feedback on how they feel about their WIP levels. Introduce WIP limits to align everyone on completing existing tasks before starting new ones.

- Large increments. If projects often overrun, is the team trying to tackle overly large problems? Examine the time it takes to complete issues and look for scope creep to indicate planning deficiencies.

- Planning quality. When scope creep is common, consider it in future planning. You can also scrutinize long-running tasks to understand if they could have been broken down into smaller, more manageable parts, aiding in better planning for future issues.

- Cross-team sequencing. Even in the best-designed organizations, it’s sometimes necessary for two teams to work together to deliver customer value. Without care and attention, these partnerships can struggle to stay coordinated and deliver the right thing at the right time for the other team to make progress.

It’s worth mentioning that scope creep isn’t necessarily a bad thing! Mitigating its effects should be focused on building in time for learning, feedback, and discovery; reducing scope creep via extensive up-front planning and specification rarely produces good results.

Setting goals around productivity

If you’re just starting out on your productivity journey, goal-setting can feel intimidating, especially if you’re trying to prove the value of investing in this area. It can be tempting to go straight to frameworks like DORA and SPACE and try to set goals around those concepts. Still, you’ll have more luck if you identify a single opportunity from your conversations with engineers and execute on it (we’ll talk more about this in the final chapter).

For example, if you learn that CI builds fail 20% of the time due to seemingly random environmental issues, that’s a concrete data point to measure and set a target around. Once you hit the target, you can ensure you’ll notice if you exceed it again. Rinse and repeat the process with different metrics for different kinds of improvements.

Once you’ve embraced that pattern, it’s a good time to get DORA metrics in place if you haven’t already and start using them to track the impact of improvements on teams and services. In many ways, the core DORA metrics cover the activity pillar in SPACE, and establishing them within your organization will quickly highlight potential opportunities.

As your productivity journey progresses, DORA metrics will continue to be useful for tracking trends, but they will never tell your whole productivity story. As you start to recognize themes in your work and your users’ reported issues, embracing SPACE more thoroughly beyond the activity dimension will make sense. The SPACE framework is best used to identify various indicators of overall productivity, from OKR/goal attainment to meeting load to cross-team collaboration burden.

Setting goals around SPACE pillars is also fraught; there’s no way, for example, to boil efficiency and flow down to a single number. On the other hand, SPACE is great as a framework to classify problems and brainstorm specific metrics you might use to track trends and validate improvements.

When it comes to setting metrics goals, you’ll sometimes find yourself pressured to set a goal before you know how you’re going to solve the fundamental problem. Even under pressure, set goals around potential valuable outcomes from working on the problem, not on a restatement of the problem itself.

Tools and tactics

Opportunities to improve flow exist throughout the reporting chain and sometimes straight up to senior leadership. Culturally, you need to get people at all levels to understand and internalize the idea that interruptions for software engineers are bad and should be minimized.

Of course, some interruptions are inevitable, but many are imposed without recognizing the cost. Before you do anything else with developer productivity, ensure there’s general agreement on reducing interruptions (we’ll discuss this in more detail in the next chapter).

At the team level, some interruptions are within the team’s control and some are not. For example, suppose a code change requires a review from another team. In that case, the originating engineer is interrupted in their task until a person from the other team accepts the change, and the originating team may not feel in control of the situation in the meantime.

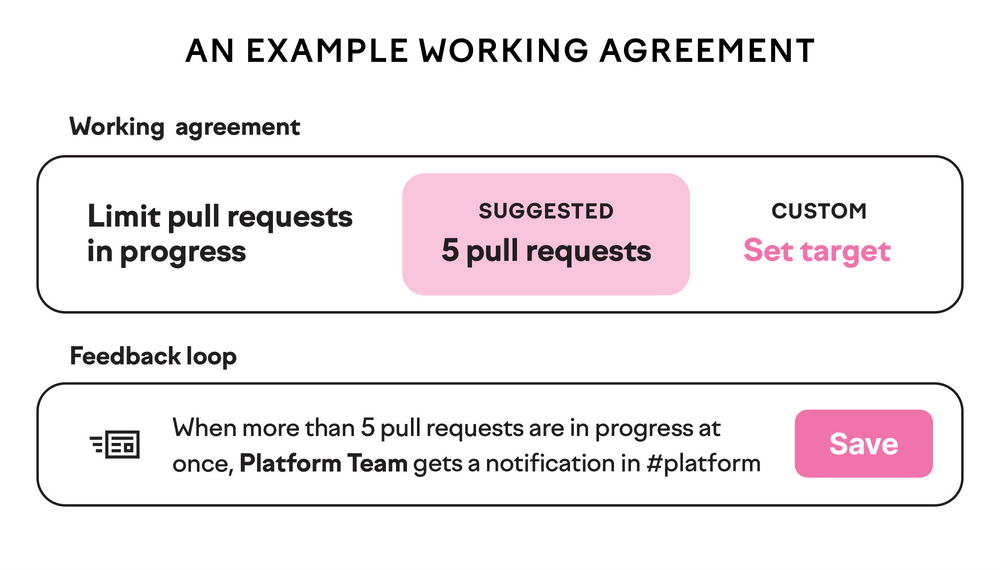

Nonetheless, plenty is in the control of individual teams: what they prioritize, how they work together, how they ensure quality, how they automate tedious tasks, and much more. Working agreements and retrospectives are two tools to use at the team level.

- Working agreements. Team members agree on how they want to work. For example, team members could agree that they will release code at least once a day and that reviews should be completed within two hours of the assignment. By setting and monitoring these agreements, the team can recognize where they’re falling short and identify resolutions that could be technical or process-focused.

- Retrospectives. Team members assess the work of the previous period, how they worked together, and how well they upheld the working agreements. They then propose ideas and accept action items for future iterations.

At the organizational level, we start to talk about more ambient interruptions, which no one is responsible for but just seem to appear. Tackling these interruptions is outside the scope of any one team unless a team is specifically responsible for this kind of thing. This is where things get more challenging but also more rewarding; solving these cross-team problems tends to have more leverage than focusing solely on team-level opportunities.

Once you reach a certain size, it’s useful to be explicit about who is accountable for developer productivity and what it’s like to build software at your company. If your immediate response is “everyone,” either you are still a relatively small organization or it’s time to start thinking about a more definitive answer.

What’s next?

In this chapter, we discussed developer productivity, including ways to quantify it and guidance on goal-setting in the developer productivity space. Next, we’ll talk about the less quantifiable but equally important developer experience.

Further reading

- The Principles of Product Development Flow: Second Generation Lean Product Development, by Donald G. Reinertsen. A comprehensive guide on applying lean principles to software and product development, enhancing productivity and efficiency.

- The DevOps Handbook: How to Create World-Class Agility, Reliability, and Security in Technology Organizations, by Gene Kim, Patrick Debois, John Willis, and Jez Humble. Explains DevOps principles and practices, emphasizing collaboration and productivity in software development.

- Making Work Visible: Exposing Time Theft to Optimize Work & Flow, by Dominica DeGrandis. Focuses on the importance of making work visible to improve productivity and efficiency in software development.

- The Mythical Man-Month: Essays on Software Engineering, by Frederick P. Brooks Jr. A classic book in software engineering that discusses the challenges and pitfalls of managing complex software projects.

- The SPACE of Developer Productivity, by Nicole Forsgren et al. The white paper that describes the SPACE framework and the multidimensional nature of “productivity.”