4. Developer experience

Effective software organizations give engineers the support and tools they need to feel engaged.

In the previous chapter, we discussed how processes impact developer productivity and how we might measure it. Here, we look at the other side of software development: developer experience. We’ll revisit the table stakes we discussed in previous chapters and explore the aspects of experience that we can measure and set goals around.

Measuring developer experience

Developer experience metrics are more qualitative than the metrics we saw in Chapter 3. For example, it’s table stakes to capture employee satisfaction and engagement data. Still, you’d be hard-pressed to suggest that this is quantitative data; the small number of data points makes the error bars quite wide.

Suppose you want to understand how developer experience affects your team’s effectiveness. In that case, you need to evaluate how employees feel about their work and other factors contributing to overall job satisfaction, examining the following points:

- Sources of frustration. Software engineers get frustrated when their flow is interrupted — sometimes by a tool, sometimes by a process, and sometimes by another human. These frustrations add up, impacting the engineer’s sense of satisfaction at getting things done while also working against timely delivery. Consider making it easy and obvious to report engineer frustrations to a ticket queue that you check regularly.

- Employee satisfaction and engagement. This measures how content and committed employees are. Regular employee surveys can help capture this data. Additionally, exit interviews and employee reviews on job websites can offer insightful perspectives on employee satisfaction and engagement.

- Employee turnover and regretted attrition. Employee turnover refers to the rate at which employees leave an organization. A high turnover rate, especially among high-performing or recently hired individuals, could indicate underlying organizational issues. An increase in regretted attrition — the loss of employees that the organization would have preferred to retain — is a warning sign of poor organizational health.

- Leadership trust and communication effectiveness. Leadership and organizational communication effectiveness can significantly impact employee satisfaction. Regular surveys can gauge employees’ trust in leadership and the effectiveness of organization-wide communications, providing insight into potential areas for improvement in leadership and communication strategies.

Note that a couple of downsides plague each of these metrics: the data arrives long after the damage is done, and the data is noisy and nuanced.

Identifying improvements

The people whose productivity you are trying to improve are the best source of information about what needs improving. You can better understand their needs by approaching this on two fronts: talking to the users of your internal development systems and collecting data about tool behavior as engineers go about their day.

Review the table stakes

We discussed organization-wide table stakes in Chapter 1 (empowered teams, rapid feedback, and outcomes over outputs), and we discussed team-specific table stakes in Chapter 3 (limited queue depth, small batch sizes, limited work in progress).

All of these come into play in developer experience. The absence of any one of these is known to reduce a software engineer’s satisfaction and engagement with the job.

As a leader, you need to honestly evaluate where your team and/or organization stands regarding this must-have list. If any of these ways of working are missing or on shaky ground, you (and your leadership) must acknowledge that there’s a ceiling on the improvements you can make until that changes.

Talk to your users

The phrase “talk to your users” may be unexpected here, but it’s a surprisingly helpful framing. Your engineering colleagues are your users, and your product is effectiveness. As with the real-world users of your company’s product, talking to your internal users can be a source of powerful insights. This can take a few forms.

Have as many in-person conversations with small groups of engineers — including both veterans and new hires, product and platform teams — as you can manage. You could do this via a survey, but have at least some of these conversations in person with a few teams; that environment tends to generate usefully divergent ideas.

You can use prompts like these:

- What could we improve about your tools?

- What’s an annoyance for engineers today that could become a real risk in the future?

- What would help the company learn more quickly through rapid feedback?

If you’ve established a high-trust environment, go a step further and shadow engineers while they do their job. You’ll be amazed at the workarounds you never knew people were employing and the things you didn’t realize people were putting up with.

Many or even most of the ideas you’ll come across will have technical solutions, but don’t tune out people, processes, and political challenges that merit different approaches. Increasing engineering leverage without spending engineering time could be a huge win.

Collect empirical data

Your users will suggest lots of opportunities for improvement — so many, in fact, that you’ll have difficulty choosing from among them, and the initial list will feel infinite. This is when it’s essential to have quantitative data to help guide your prioritization and validate the qualitative stories you hear. Be honest about what you can, can’t, will, and won’t do.

It’s relatively easy to build observability into your internal tooling. If you don’t already have a system to record the behavior of internal tools, now might be the time to consider buying or building one. An internal tool should be able to record every invocation and its outcome, along with various metadata about the interaction. Most importantly, it should record how long a developer has waited to get output from the tool.

If you make it easy to capture user experience data from internal tools — say, by providing a standard API that other engineers can use to collect signals that can be stored usefully alongside other tooling data — internal tool authors will tend to capture some metrics.

Developer surveys

Surveys are integral tools for comprehending developer experience beyond the team level. They provide two kinds of value:

- Validation. Surveys act as a barometer, gauging whether the organization's strategies, tools, and policies align with its intended outcomes. Essentially, they confirm whether you’re on the right path toward improving the developer experience.

- Discovery. Beyond mere validation, surveys also function as windows into the uncharted territories of developer needs, wants, and challenges. They help organizations discover fresh avenues for improvement.

How to use surveys

It’s good to do a comprehensive developer survey once or twice a year, plus more informal but more frequent surveys with smaller audiences. Here are a few statements that we’ve found particularly useful to evaluate:

- I feel safe expressing concerns to my team.

- My team makes frequent improvements based on feedback.

- My team systematically validates user needs.

- I have enough uninterrupted time for focus work.

- It’s simple to make changes to the codebases I work with.

Ask about a timeframe short enough to remember but long enough to be representative: “the last month” or “the last week,” but probably not “the last six months.” Clearly defining the period reduces random bias from people’s interpretations and assumptions. With that in mind, avoid questions and prompts that include “since the last survey,” as well as those that ask how or whether something has improved over an indefinite timeframe. Use past survey data to assess changes over time (and recognize that fully rolling out a survey question will take at least two rounds).

You can make the responses fully open to promote transparency and discussion, or you can run a confidential survey to lower the threshold for reporting problems. Either way, explicitly clarify how the responses will be used and reported. If you go with confidential surveys, you need to be mindful of a few key points:

- Limit access to identifying data. For example, a breakdown of survey results by tenure can be extremely identifying in a small-ish company that’s been around for a while.

- If you say the responses are anonymous, mean it. Make it impossible to link a response back to a person or any identifying metadata.

- Anonymous doesn’t mean unpublished. Make clear to survey respondents whether you will publish unattributed commentary.

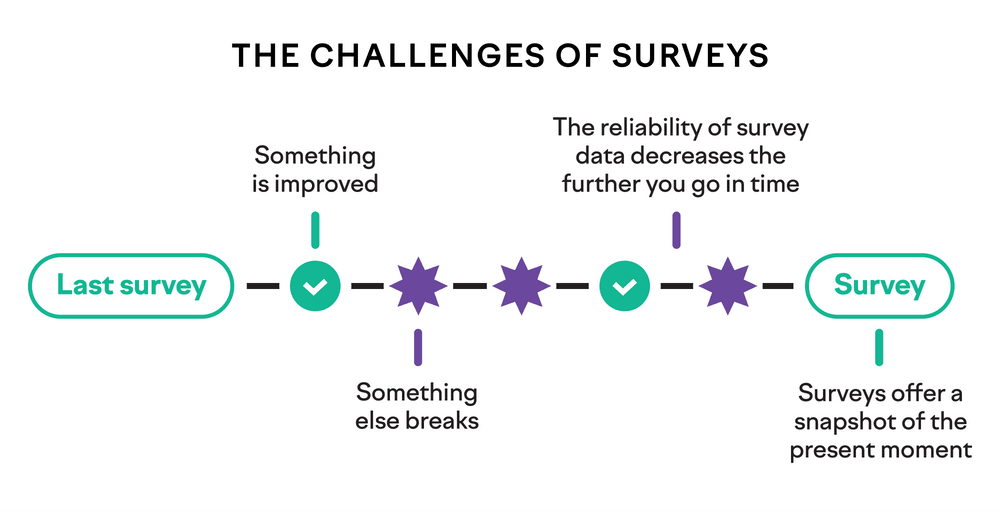

The challenges of surveys

One of the primary issues you’ll run into with surveys is the squeaky wheel syndrome, where the loudest voices overshadow more valuable feedback. In this situation, you could inadvertently channel resources to appease this vocal subset, neglecting the broader (and sometimes more pertinent) issues. Another challenge is recency bias, where respondents predominantly focus on recent events while filling out the survey, leaving behind older yet still impactful concerns. This bias can sometimes amplify the significance of recent minor issues while diminishing long-standing critical ones.

Sampling bias further complicates the survey landscape. Without meticulous design and execution, surveys might inadvertently cater to a specific developer subset. You might end up with feedback that doesn’t holistically represent the sentiments of the entire organization. Your best way to avoid this bias is to encourage participation at a level close to 100% of the engineering organization.

Then there’s the challenge of striking the right frequency. If you deploy surveys too often, you may run into survey fatigue, diminishing the quality and quantity of feedback. However, sparse surveys can fail to capture rapidly evolving sentiments.

There’s also an inherent risk in tying objectives too tightly to survey outcomes. While responding to feedback is vital, it’s equally important to recognize that surveys are but one facet of a multi-dimensional landscape. Over-reliance can lead to reactive strategies rather than proactive ones.

Diversifying feedback channels

While surveys provide valuable insights, diversifying feedback channels ensures a richer, more rounded understanding of developer experience. Regular one-on-one sessions, open discussions, a forum for submitting frustrations, shadowing sessions, or even casual coffee chats can offer more continuous insights into developer sentiments. Telemetry can also provide continuous, passive feedback on tool usage patterns and potential pain points.

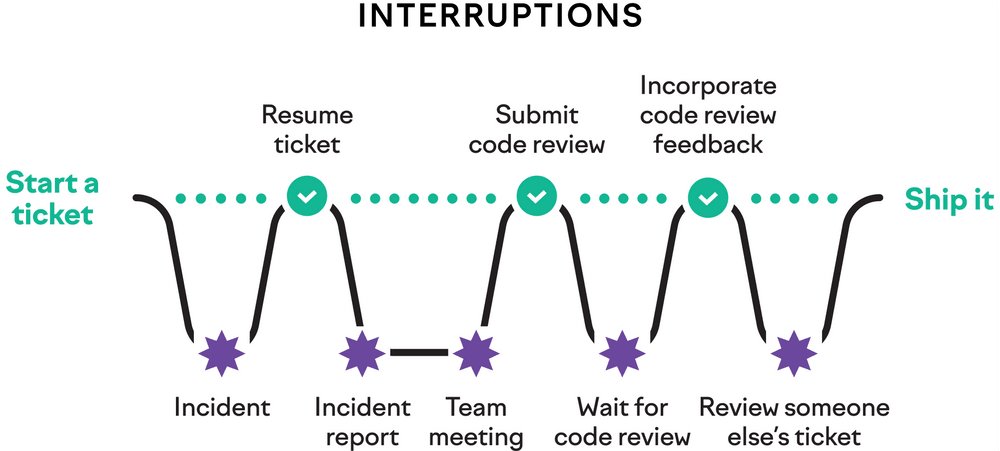

Fighting back against interruptions

One of the critical concepts in productivity is flow, as represented by the efficiency and flow pillar of SPACE. Uninterrupted time is the building block of flow; in most organizations, there tend to be plenty of interruptions to measure. These come in all shapes and sizes, from meetings to GitHub outages and everything in between. Some interruptions are more negatively impactful than others, especially in aggregate. The right metrics for your purposes will depend on how you understand the nature of the productivity challenges in your organization.

Interruptions — anything that yanks a developer out of that elusive flow state — can appear out of nowhere. They’re often untracked and underestimated in their ability to derail focus and productivity.

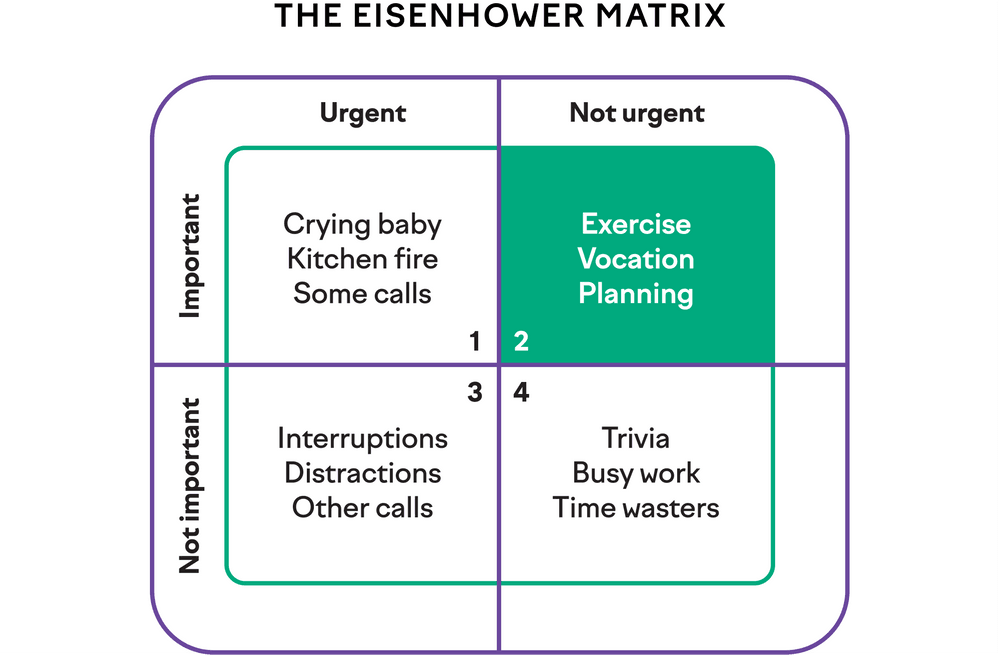

Some interruptions are genuinely urgent and require immediate attention. Others stem from outdated processes or habits and can be scheduled for later. An approach based on the Eisenhower matrix can involve categorizing interruptions based on urgency and impact and then devising a strategy to handle each category effectively.

- Urgent and important. Issues like production outages that demand immediate attention and generally have team-wide consensus for prioritization. Certain customer situations can also fall into this category.

- Important but not urgent. Things like discussing plans for a new feature are important, but not necessarily time-sensitive.

- Urgent but unimportant. This is the class of interruptions that an engineer could solve but an equally good and more timely response is available elsewhere. For example, this kind of interruption happens when a junior engineer asks a senior to answer a blocking question, even though the answer is well-documented and was also answered via chat last week.

- Neither urgent nor important. Questions or issues that could have waited or been solved through other means. These are especially disruptive because they often don’t warrant the break in focus they cause. For example, this can happen when a manager stops by an engineer’s desk without recognizing that the engineer is otherwise focused.

Certain types of interruptions require a broader organizational fix rather than individual adjustments — interruptions like meetings, internal support, external support, and production incidents. These not only impact the effectiveness of individual software developers but can also destabilize teams and processes as a whole, especially as a company scales.

The meeting dilemma

Meetings within an organization exhibit a wide range of effectiveness. Some prove to be instrumental in decision-making and collaboration, while others can frankly be worthless (and occasionally verging on harmful). The underlying cost of a meeting isn’t limited to its duration; it extends to the interruption of deep focus and to the trust that the meeting either creates or erodes.

Engineers should designate blocks of time for focused work, and these should remain inviolate. Calendar features like auto-decline can safeguard these precious hours, preserving dedicated work time.

The frequency of meetings often correlates with job responsibilities. Leadership roles, such as engineering managers and tech leads, may find their schedules more populated with meetings than other team members. Despite this variance, a universal objective should be to secure uninterrupted blocks of concentration for all roles. For example, among ICs, you could aim for at least four hours of focused work on four days each week.

Minimizing and optimizing meetings frees up significant blocks of productive time for teams. Here are some effective strategies to consider:

- Clear objectives. Before scheduling a meeting, clarify its purpose. If the objective can be achieved through an email or a quick chat, opt for that instead.

- Audit recurring meetings. Periodically review standing meetings to determine if they’re still relevant or if their frequency can be reduced. Some weekly meetings might be just as effective if held bi-weekly or monthly.

- Agenda requirement. Insist on an agenda for every meeting. This ensures that the meeting stays on track and can also help participants evaluate if their attendance is essential.

- Time limits. Meetings that exceed 30 minutes should be rare, and meetings that exceed an hour should be exceptional. Even for large undertakings, long meetings tend to hurt more than they help. Conversely, a series of shorter meetings, with time to reflect on each, is more likely to result in powerful outcomes.

- Limit attendees. Invite only those who are essential to the meeting’s objective. A smaller, more relevant group can often make decisions more quickly.

- Share the outcomes of meetings. Small, focused, agenda-driven meetings don’t need to be secretive. Create a mailing list or chat channel where people can stay up to date on projects or meetings they’re interested in without having to attend all the time or feel like they’re missing out.

- Empowered decision-making. Establish clear protocols for decision-making that don’t always rely on group consensus. Empower individuals or smaller teams to make decisions where appropriate.

- Asynchronous updates. For meetings that are informational or offer updates, consider asynchronous methods. This could be recorded video updates or written reports (or both) that individuals can review independently. Remember that you’ll frequently need to provide the same message multiple times in multiple ways, so if it’s important — like an all-hands meeting — make a point of ensuring that people receive and incorporate the information.

A note on asynchronous collaboration

Asynchronous collaboration offers a significant advantage over certain in-person meetings: it allows engineers to choose when to engage with a task rather than disrupt their focus for a meeting at a potentially inconvenient time. It also alleviates the need to cram knowledge work into a 30-minute timeslot.

To be successful at working asynchronously on decisions, it’s useful to specifically define how you’ll handle them. One practical step is to create templates for common decision-making processes. These templates provide a structured approach to things like:

- Design reviews. A document to propose designs for a significant new feature or capability. It describes the business need, explains non-goals and tradeoffs, and solicits feedback on key decisions.

- Build vs. buy decisions. A document to capture the pros and cons of building a solution in-house versus purchasing an off-the-shelf solution.

- New API or common library designs. A document detailing the requirements, expected benefits, and potential impacts of introducing a new API or shared library.

Shared documents become a central part of asynchronous collaboration. They allow team members to add their input, edit, and comment in real time or at their convenience. Establishing a window of time for commenting — a set period during which team members can review and provide feedback — ensures that discussions are timely but not rushed.

While the goal is to minimize live meetings, some topics may still require synchronous communication to move the conversation forward. A meeting is valid in this case, but think carefully about who needs to be there. To make it easy for people to consume the meeting without attending, record the meeting and designate someone to take notes.

The effectiveness of asynchronous collaboration depends on the tools at hand. Even today, some mainstream tools fall far short of supporting collaborative asynchronous work. Invest in tools that enable real-time editing, commenting, and sharing.

While asynchronous collaboration is powerful, there are also times when a quick synchronous discussion is more effective. Providing the means to effortlessly transition to an audio or video call, or the physical space to have a quick conversation, can resolve complex issues more quickly.

Internal support

Internal support in a software organization ensures the smooth functioning of teams, particularly as software engineers assist their peers in navigating and completing tasks. It acts as a bridge, filling in knowledge gaps, clarifying doubts, and facilitating better understanding. As vital as it is, this very support system is typically disorganized, ad hoc, unrecognized, and itself unsupported — for example, a single developer support channel in a messaging tool where everyone asks everything. As such, it can become a significant source of interruptions, especially when the demand surpasses the supply of knowledgeable peers who can assist.

One common cause of increased demand for internal support is the absence of self-serve solutions. In an ideal scenario, engineers would have tools, platforms, and documentation at their disposal to independently find answers to their queries. Without these, they’re left with no choice but to seek help from others, leading to frequent interruptions for both the one seeking help and the one providing it. Similarly, when clear, straightforward processes (aka happy paths) for common tasks aren’t established, engineers often find themselves in a labyrinth of trial and error, pulling in colleagues to help navigate.

Perhaps more insidious is the issue of knowledge siloing. When knowledge becomes the domain of a select few and isn’t disseminated broadly, it creates an environment where constant queries become the norm. Those in the know are frequently interrupted, and those out of the loop continually seek guidance. If a subset of engineers always provides support, it may prevent others from developing problem-solving skills and self-sufficiency. You can solve this through knowledge-sharing sessions, shadowing sessions, and partnering on tasks unfamiliar to other team members.

However, relying heavily on certain team members can stifle growth opportunities for the wider team. Similarly, if only a few individuals are leaned on for support continuously, it may lead to a scenario where critical knowledge resides with only those few. This creates vulnerability in the team dynamics if these individuals are unavailable. As a leader, you need to make sure those people make a point of taking real time away from work so that the organization can see how it reacts.

While internal support is an invaluable aspect of work in software organizations, without the right structures and resources in place, it can morph from a support system into a persistent source of disruptions for a small group of people who could be producing a lot more value. AI search tools and knowledge-sharing sessions can help fill the gap, while collaborative ways of working can help it from showing up in the first place.

External support

External support, especially for customers, users, and user-facing colleagues, comes with its own set of challenges. The requests can be unpredictable, of varying quality, and cover a broad spectrum of topics. Some may be straightforward and easy to address, while others might be vague, complex, or even misdirected, requiring more time and effort to resolve.

To manage these sorts of demands, tools and processes like ticket queues and WIP limits are invaluable. Here’s why.

- Visibility. Ticket queues provide a clear view of incoming requests, shedding light on the current workload and types of issues being raised.

- Prioritization. Understanding the queue helps in resource allocation. It becomes feasible to triage requests, ensuring that high-priority or urgent issues are addressed swiftly and engineers are only pulled in when necessary.

- Workload management. WIP limits act as a buffer, ensuring that support teams aren’t swamped with an unmanageable number of requests at once. This allows for a consistent quality of support.

To further streamline the process, office hours can be a big help. Setting specific periods dedicated to addressing external queries ensures:

- Predictability. Both the support team and those seeking support have a defined window. This clarity helps in setting expectations.

- Focus. When not in the office hours window, teams can redirect their attention to other pressing tasks, ensuring a balanced distribution of effort and time.

Continually analyze your support workload to find things you could proactively address. Self-serve configuration, UX improvements, help center articles, guides, or training sessions can completely eliminate entire categories of customer support requests.

Production incidents

Just as all meetings aren’t created equal, the same goes for incidents. When assessing incidents, several factors matter.

- Frequency. How often are incidents happening?

- Severity. How significant is the problem — is it a minor hiccup or a full-blown outage?

- Impact. What were the broader consequences for systems and users?

- Time spent. How long did the incident last? How much time did we spend on it after that?

If you’re tracking these parameters, do so transparently. Incident metrics should inform, not intimidate, ensuring that no one feels the need to underreport or diminish the scale of an incident.

Truly blameless post-incident reviews can be transformative, providing a platform to dissect what went wrong and how to prevent future occurrences. By identifying patterns and drawing up actionable items from each incident, teams are better poised to anticipate and mitigate future challenges.

Moreover, integrating tools for incident analysis can offer granular insights, highlighting potential areas of vulnerability. Implementing a first-responder rotation ensures that a dedicated team is always on standby, primed to tackle incidents, and can distribute responsibility more evenly.

Are you interruption-aware?

Answering the following questions can reveal insights into how well the organization is prepared to manage interruptions. The goal isn’t to eliminate them entirely but rather to measure, reduce, and manage them in a way that aligns with the team’s needs and the organization’s objectives.

- Do you have a system for tracking interruptions? Understanding the nature and urgency of interruptions can go a long way in managing them effectively. Are you capturing data on what kinds of interruptions are most frequent and which types disproportionately affect certain team members? This will help in deciding where to invest time in process improvements.

- Are you measuring the right things? Metrics offer a quantitative way to understand the burden of interruptions, but are you measuring the things that truly matter? For instance, beyond just tracking the number of meetings, are we looking at their ROI? And when it comes to internal and external support, do you have visibility into how much time is spent and the quality of those interactions?

- How much slack do teams have? If you aim for 100% utilization, you’re setting yourself up for failure. What level of buffer time do you build into our sprints or roadmaps to account for inevitable interruptions, and are you revisiting these assumptions periodically to ensure they still hold?

- How do you capture and share knowledge? Many interruptions, especially internal support ones, can be reduced through better knowledge sharing. Do you have a centralized repository, internal forums, or other mechanisms where team members can find answers to common questions? How often is this resource updated, and is it easily accessible to everyone?

- Are there more things you could automate or make self-serve? Many interruptions stem from the fact that it’s never seemed worthwhile to automate something or make it self-serve for a non-engineer — it seems easier to just have an engineer do it when it needs doing. If you feel like interruptions are getting in your way, that mindset might not be helping. Automate the things that are pulling your engineers’ attention away from their work.

The dynamics of interruptions will change significantly as a company grows and its needs change. By taking an ongoing and proactive approach to these interruptions, software engineering organizations can build more sustainable, efficient, and resilient work environments. When you make your processes interruption-aware, your team can focus on what they do best: building great products.

Setting experience goals

When you get specific about the source of interruptions that prevent continuous focus, you have something more satisfying than just satisfaction surveys: metrics that can be measured reliably and consistently, and thus, metrics we can seek to improve. That doesn’t mean you throw out the satisfaction survey; you just accept it as a lagging indicator as you improve the things above. Satisfaction is a measurement you use to validate your work, not something you try to chase week to week.

User experience objectives (UXOs) offer a complementary framework for thinking about developer experience. With UXOs, you agree on acceptable behavior for your tools. As a few very basic examples, you can agree that _git pull_ should never take more than two minutes, saving in an editor should rarely take more than two seconds, and CI/CD checks should return results within 15 minutes.

These UXOs can operate independently, guiding experience goals for individual tools. Their potency increases when aggregated. When a developer experiences breaches in a certain number of UXOs, you know that the developer is having a bad day. Tracking bad days across the engineering organization provides insights into common pain points and opportunities for improvement.

UXOs also furnish real-time insights into engineers’ experiences, allowing for adaptable goal-setting and innovative problem-solving. Setting goals around UXOs versus completing a specific project or task lets you work to improve developer experience without being constrained by rigid plans.

Don’t confuse UXOs with service-level objectives (SLOs), as unlike SLO breaches, UXO breaches aren’t necessarily urgent; they define expectations for tool behavior that the user can measure their experience against, which can guide a tooling team on where to spend its time.

UXOs focus on meaningful enhancements, fostering a direct connection between the lived experiences of engineers and the people responsible for supporting those experiences. They fill the gap when you’re tempted to set goals based on surveys or other sources of organizational health metrics.

Just because you’re not setting goals for these metrics doesn’t mean that you shouldn’t know what “good” would look like in your organization. Getting to 100% satisfaction or zero regretted attrition is unrealistic, so what would your organization consider success? There’s likely to be a ceiling on overall satisfaction and a floor on regretted attrition, both put in place by your organization’s culture and incentive structure.

What’s next?

In this chapter, we looked at developer experience and the things that influence it, focusing especially on different types of interruptions and mitigations. We wrestled with the fact that most developer experience data will be qualitative and that many developer experience problems require non-code solutions and explored options to set developer experience goals.

In the next chapter, we’ll look at how to put the lessons of this and previous chapters into practice.

Further reading

- Drive: The Surprising Truth About What Motivates Us, by Daniel H. Pink. Explores the core elements of motivation and how they can be applied in a work environment, including for software developers.

- Flow: The Psychology of Optimal Experience, by Mihaly Csikszentmihalyi. Discusses in detail how uninterrupted focus allows people to reach a state of heightened efficiency and satisfaction in their work.

- Peopleware: Productive Projects and Teams, by Tom DeMarco and Timothy Lister. A classic in the software development field, focusing on the human side of software development and team dynamics.

- Deep Work: Rules for Focused Success in a Distracted World, by Cal Newport. A guide on how to achieve focused and productive work, which is particularly relevant for developers dealing with complex tasks and needing deep focus.

- Agile Retrospectives: Making Good Teams Great, by Esther Derby and Diana Larsen. Provides tools and techniques for effective agile retrospectives, emphasizing continuous improvement and problem-solving throughout a project's life.

- Site Reliability Engineering: How Google Runs Production Systems, by Niall Richard Murphy, Betsy Beyer, Chris Jones, and Jennifer Petoff. An in-depth look into Google’s approach to building, deploying, monitoring, and maintaining some of the largest software systems in the world, including incident management processes.

- The Field Guide to Understanding “Human Error”, by Sidney Dekker. While not exclusively about software engineering, this book is highly regarded in the Learning From Incidents community. It offers insights into how to understand and learn from human errors in complex systems.