- Product

- Changelog

- Pricing

- Customers

- LearnBlogInsights for software leaders, managers, and engineersHelp center↗Find the answers you need to make the most out of SwarmiaPodcastCatch interviews with software leaders on Engineering UnblockedBenchmarksFind the biggest opportunities for improvementBook: BuildRead for free, or buy a physical copy or the Kindle version

- About us

- Careers

What good Continuous Integration (CI) looks like

Continuous Integration (CI) is the process of integrating work from multiple developers, and assuring its quality automatically. It’s an integral component of any modern development effort, as it allows you to shift left the discovery of various problems. The cost of fixing bugs (or merge conflicts, or any number of other problems) only tends to increase over time. A CI pipeline helps you spot and fix those problems as quickly as possible.

Having CI is table stakes these days. Imagine pulling main without knowing if it compiles or not? Yep, sounds ridiculous.

At the same time, CI pipelines are a common cause of developer frustration. So clearly there’s room for improvement.

In this blog post, we’ll go through the five properties that make for a great CI experience — speed, reliability, maintainability, visibility and security — and some concrete tips on how to get there. We’ll use GitHub Actions as an example, but the same principles apply across most other CI providers you might end up using.

1. Speed

Probably the most common developer complaint you’ll hear about CI is that it’s slow. Indeed, we developers are busy people, and we hate waiting. Waiting means you end up doing something else in between, and multi-tasking is poison to any kind of “flow state”.

So what tricks do we have for making our CI blazing fast?

Caching

If your CI does the same expensive operation many times, you want to make sure you only pay that price once. A common example is installing external dependencies: an npm install on a large project can take a lot of time, as you pull dependencies in over the network.

GitHub Actions supports this via the built-in cache action. It allows you to define a cache key — a hash of your exact dependency versions, for instance — so that whenever that same cache key has been seen before, we can skip the full install process and just reuse the results from last time. And this reuse isn’t limited to steps within the same CI run: until your dependencies change, every CI run can enjoy that speed boost.

It’s worth noting that this requires the operation being cached to be deterministic. Determinism is an important property of any CI pipeline, and there’s a whole section on that below.

Parallelization

As your app grows, so does the number of automated checks and tests on your CI. This is great! Who doesn’t love good test coverage? But it also means there’s more for the CI to do during each run, meaning those runs tend to get slower over time.

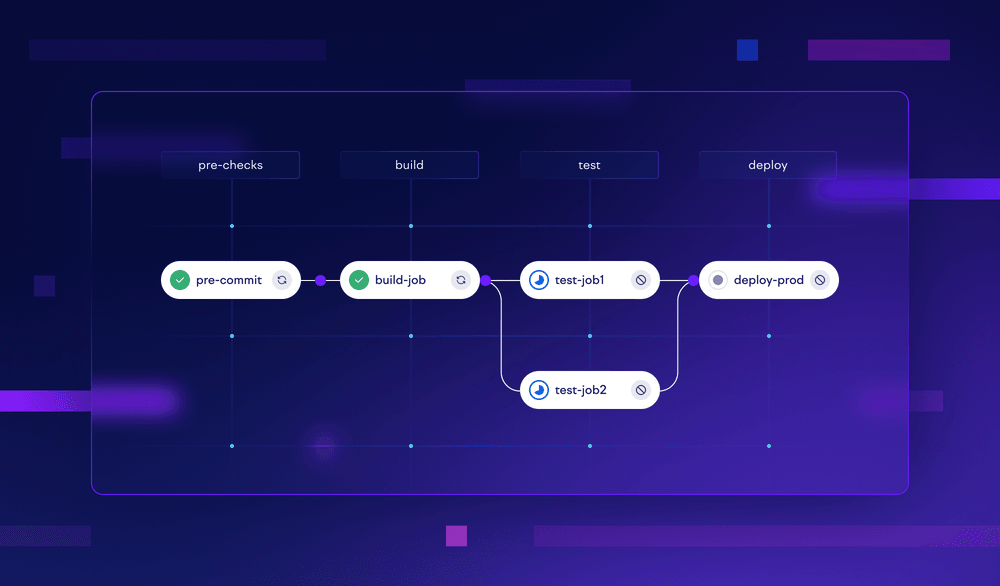

One of the simplest ways to make things faster is to chop your CI process up into independent chunks, and run those chunks in parallel.

If your CI does clearly separated steps (such as linting, building, and testing) sequentially, it’s often trivial to configure those to be run in parallel instead. GitHub Actions makes this really easy with the needs property, which you can use to affect which parts of the CI process can run in parallel, and which need to run sequentially.

Sometimes your CI steps are uneven in size: it’s not uncommon for all the other steps to take a minute or two, while your monster of a test suite takes a full 30 minutes. So running the fast steps and the slow step in parallel isn’t going to help a lot; the whole process will still take about 30 minutes.

Luckily, individual steps can often also be chopped up and parallelized. If your test suite is taking a long time to run, consider splitting your test cases into a few chunks, and then running those chunks in parallel. GitHub Actions makes this easy with the matrix strategy: it lets you run the same step multiple times, in parallel, with a different parameter. For instance:

strategy:

matrix:

chunk: [1, 2, 3, 4, 5, 6]Each step can then list your test cases, pick the ones modulo ${{matrix.chunk}}, and run only those test cases. This can turn a 30 minute test suite to a five minute one (barring some variance between chunk runtimes, of course).

But how many parallel chunks should you go for? Would your test suite complete in one minute with 30 chunks?

The more you parallelize your CI process, the more your runtime will be dominated by setup costs (runner startup, fetching files, code compilation, process startup, etc). Taken to an extreme, at any given point in time while your CI is running, you’re probably running setup code, not any of your actual automated checks and tests.

If running servers was free, this still wouldn’t be a problem. But alas, it is not. And while there’s certainly an argument to be made for paying a bit of extra so that humans have to wait around less, it’s not uncommon for monthly CI bills to be in the hundreds of thousands at larger organizations.

As with most things in life, the correct answer lies in neither extreme: your correct parallelization factor lies somewhere in between. Usually, if you add chunks, and monitor your CI runtimes (in terms of humans waiting for it), you’ll start seeing diminishing returns after some value. That’s probably a good starting value for your parallelization factor. This may change over time, at which point you can add another chunk, for example.

Beefier machines

Engineers love horizontal scalability. We know that you can’t get to web scale vertically.

But despite this common wisdom, sometimes it makes sense to just get a bigger machine. This advice isn’t limited to CI pipelines, but applies to them just as well.

The primary reason is reliability: running on one machine will always be simpler than running on and coordinating between multiple machines. We’ll discuss reliability more in a bit.

The secondary reason is cost: one big server may not cost more than a bunch of smaller ones. In terms of the previous discussion on parallelization, running on just one server allows us to also pay our setup costs just once. For instance, when running unit tests, you’re often able to compile your code once, and then run your test cases off of that in multiple threads or processes.

GitHub Actions supports machine selection via the runs-on option, which allows you to choose from a variety of machine types. You can also have different parts of your CI process run on different machine types. So if your test suite completes in five minutes on a beefy (but expensive) machine, but your other steps take at most five minutes on the default machine, a price-conscious engineer might as well keep those other steps running on the cheaper ones, because they won’t increase the total time humans need to wait around.

Fast-tracking reverts

The great thing about CI is that it always runs — it’s not up to you to remember to run your automated checks and tests before merging your PR. But there are cases when you may want to make an exception.

The main one is disaster recovery. If you’ve broken something in production, and you’re doing a revert PR, watching your CI do its thing can feel like an eternity. Especially if you’re reverting to a state of the codebase which has already passed your automated QA before, and you know it’ll pass again.

The above scenario is actually possible to reliably detect, by comparing the current state of the codebase to ones which have recently passed your CI. If you find a revision which has the exact same code as you now have (as is common with reverts), you can safely skip most of your automated QA, and give that revert PR its green badge significantly faster. Doing this fast-tracking check at the beginning of each CI run takes a few extra seconds, but can potentially save a lot of waiting time when it matters most.

It should also be noted that this should not be your primary disaster recovery mechanism. With properly separated CI and CD (Continuous Deployment), reverting to a known good revision should always be fast, and not depend on the CI pipeline at all. But CD strategies and fast rollbacks are a topic for another blog post!

Failing fast

Some steps are fast, some are slow. Generally you want feedback as soon as possible, so you want to make sure to run the fast ones first. (Though of course everything runs “first” if your pipeline is parallelized.)

Some kinds of failures mean the rest of the steps aren’t going to be particularly useful. For instance, a compilation error might make all other steps fail too. On the other hand, a linter error might mean the other steps can still execute normally.

So you should fail fast, but only in the sense of providing feedback to the developer as soon as possible. By letting the rest of the steps keep running even after an initial failure, by the time the developer has fixed that trivial compiler/linter issue, they might already see that the test suite was green. So they can push the trivial fix, and confidently request a review from their team right away, even if the CI will have to run once more for the newly pushed fix. You can be reasonably sure it’ll all be green in the end.

2. Reliability

If slowness is the most common source of developer CI anxiety, unreliable CI pipelines are easily the second most common one.

At the core of unreliable CI pipelines is nondeterminism: for the given input (i.e. source code), do you always get the same result? If not, your CI is nondeterministic, and this usually manifests as random failures, frustrated developers, and general erosion of trust in the safety nets provided by automated QA processes. A build failed? “Pfft, yeah, it does that, it means nothing.”

But how do you set yourself up for success in terms of CI reliability?

Dependency pinning

Modern software builds on top of tons of dependencies. The Node.js ecosystem is particularly notorious for having massive dependency trees, where every little thing needs to be a library.

Even if your package.json contains exact versions for your dependencies, they also have dependencies of their own, and might not have locked them down. Luckily the Node Package Manager has for a long time now created a package-lock.json for you automatically, which makes sure your transitive dependencies won’t change on their own.

Whichever dependency manager you use, make sure it does the same.

Clean initial state

You want your CI workflows to start from a clean slate, unaffected by past runs. If you’re using a SaaS CI product, such as GitHub Actions, that will be the case by default.

The same applies to any test databases or other support processes you need as part of your CI runs: it’s always best to create new ones, and throw them away at the end of the run. Trying to clean up a test database after a CI run is a recipe for a bad time.

Notably, this advice somewhat clashes with the previous one about caching the results of expensive operations: is each run really starting from a clean slate if it’s using data from a previous run? It’s usually a good tradeoff, just make doubly sure whatever you’re caching only gets reused when appropriate. In GitHub Actions, this means configuring your cache key correctly.

External services

Much in the same vein, you should avoid depending on external services in your CI, such as API’s provided by other teams in the organization.

There’s certainly an argument to be made that, if whatever you’re testing in your CI will depend on service X in production, you should also check for that compatibility as early as possible. But this will also be a source of non-determinism in your CI.

If you want to include such integration testing as part of your CI process — and sometimes you really should! — it’s a good idea to keep those tests separate from the ones that block your continuous integration. It may be better to block your CD pipeline on such failures, not prevent developers from merging PRs.

Going over the network

Networking is poison to determinism (much like multithreading). Ideally, your CI should only depend on things running locally, on the CI machine itself.

Often this can’t be avoided; it’s common to need things such as test databases and dependencies from over the network. But when possible, at least bring those closer to the CI. For instance, consider running your test database as a local container, as opposed to using the one from your cloud provider.

Timing and ordering assumptions

CI servers tend to run under quite variable conditions. They might be running on leftover capacity from a public cloud, might have really noisy neighbors, and often are quite underpowered compared to the machines used by developers when running their QA code locally. So code that runs a particular way on your local machine, might run quite differently on your CI.

Also, CI pipelines get a lot of runs. It’s not uncommon for a decently sized organization to run them tens of thousands of times every day. Given this many iterations, anything that’s unlikely to happen on any individual run, might still happen to someone every day.

Assuming some process is ready just because it’s gotten ample time for getting ready is one of the most common ways you can go wrong in your CI process. For instance, don’t assume your test database is up before starting your test suite, but probe its port for readiness.

Honorable mention: flaky tests

This post is about CI pipelines, but in reality, the vast majority of CI reliability issues and developer frustration doesn’t have to do with the pipeline itself, but the unreliability of the test cases they run. Tests that usually pass, but sometimes (seemingly) randomly fail, are referred to as “flaky”, and are the bane of many a developer’s existence.

Reducing the flakiness of a test suite would be another great topic for a blog post of its own, but suffice to say many of the principles discussed here apply equally within test suites: you want to start from a clean initial state, you should not rely on assumptions of timing and ordering, and you often need to make tradeoffs between realism and reliability.

It’s also worth pointing out that a high failure rate on your CI doesn’t necessarily imply flakiness: maybe you’re just shipping fast, and the CI is catching a bunch of mistakes. That’s working as intended! To assess flakiness, you should rather monitor how often your developers rerun the CI for the same git revision, hoping for a different result. Note that you may see this behavior even without actual flaky tests. It’s always an indication of distrust in the quality of your CI pipeline, and can be a good indication that something could be improved. Frequent reruns are expensive, because they make humans wait around for the CI.

3. Maintainability

If your CI configuration is a horrible mess, it’s unlikely anyone wants to touch it if they can avoid it. You probably won’t be receiving many small updates and improvements over time.

Here are some considerations for ensuring your CI pipeline has a great developer experience, and is a joy to maintain.

CI as code

With the prevalence of Infrastructure as Code, this almost goes without saying, but you should also define your CI pipelines as code. Your CI is mostly used by engineers, who often prefer editing files over clicking around a web UI. GitHub Actions for instance is configured via YAML files committed alongside your other code in the repository.

Configuring your CI pipeline as code has multiple benefits, such as:

- You can modify it with the tools you already know (i.e. your editor or IDE)

- You can keep it in version control, so you’ll always know if and when something was changed

- You can use the same peer review processes for the CI config as you use for the rest of your codebase

- You can easily attach comments explaining why some config is the way it is (not the case for most UI-based configuration)

Avoiding copy-pasta

CI systems have varying support for modularization. Some support a full-blown programming language for pipeline definition. Most are defined declaratively, with a configuration language such as YAML.

GitHub Actions uses YAML, and for the longest time didn’t support almost any mechanisms for step reuse. It was not uncommon to simply copy-paste large swaths of YAML to implement parallelization, for instance.

These days, GitHub Actions supports sharing and reusing both individual steps and entire pipelines (Actions and Workflows, in GitHub parlance), both within an organization, and with the global community.

As mentioned before, test matrices can also be used to run multiple copies of the same step.

Consider making use of these mechanisms to make your CI pipelines easier to maintain and understand.

Extracting scripts

For some things you can just use the built-in functionality of your CI provider. For others, you can reuse a community plugin. But invariably some things are unique to your team, and you end up writing some shell scripts.

Whenever your script ends up taking more than a handful of lines, consider extracting it from your pipeline definition code, and maintaining it as a standalone shell script instead. This is nice for several reasons, such as:

- Getting proper syntax highlighting (as opposed to bash embedded in YAML 😱)

- Simple local testing (you can just invoke the script locally)

- Letting you run static analysis tools to find potential bugs (not that anyone’s ever made mistakes with shell scripts… 😇)

4. Visibility

Sometimes things will fail on your CI. Depending on the overall stability of your CI pipeline, you may find this more or less of an understatement.

How do you make sure you’re able to quickly identify problems, fix them, and keep them from happening again?

Showing your work

Most CI systems will do this for you automatically, but if not, make sure yours prints out commands as it runs them.

This might seem like a trivial detail, but whenever something goes wrong, it might save you hours of debugging to immediately spot the incorrect argument being fed into a script or a reusable step definition, or some variable expansion not working where you expected it to.

If you’ve packaged parts of your CI process into reusable shell scripts (good on you!) you can still do the same. With bash, for instance, set -x will print each command in your script as they’re being run.

Failure notifications

When something fails on the CI, the person whose change caused that failure probably wants to know that right away: that’s when you have the most context in your head, and fixing the problem will be easiest. But we also tend to do other things while the CI works, and might not remember to immediately check back once the CI pipeline has finished running.

To make sure the right person knows about failures as soon as they happen, consider setting up personal notifications on Slack (or whatever communication tool your team uses). Ideally, you should include the relevant details of the error right there in the notification; sometimes a filename and a line number is enough to jump directly into your editor and fix the issue, not even requiring you to click on a link that takes you to your CI system’s log output.

Runtime metrics

As mentioned before, developers are busy people who hate waiting. It’s important to collect timing information from your CI runs, so that you can identify where the bottlenecks are. Usually anecdotes aren’t valuable — a slow part of a pipeline doesn’t really matter if it’s run by one engineer, once per month. But even small speedups quickly add up if hundreds of developers get to enjoy them multiple times per day. So you should invest in tooling that lets you find the spots where the smallest improvements can have the biggest impact.

CI runtimes also aren’t static. As the codebase grows and changes, things tend to slow down. This usually happens over time periods long enough for the boiling frog effect, where you never quite notice the slowdown, but eventually find yourself spending way more time waiting than you’d like to.

Because there might not be any explicit threshold where things become unbearable, it’s really easy to keep kicking the can down the road: I mean, you always have that one super important thing to finish first, right? Having runtime metrics over a longer time period can help you notice when this happens, and to have the right conversations with your team about setting time aside to make everyone’s lives better. Even in environments where your team might not be empowered to make investment decisions like this on their own, being able to point to hard data showing how you have to wait around 3 times longer than you had to 6 months ago should help convincing any Pointy-Haired Boss of faster CI being a good investment (they should be acutely aware of the value of engineering time).

Failure metrics

Similarly, you should invest in good visibility into the failure metrics of your CI pipeline. Fast runtimes won’t matter if you regularly need to rerun your pipeline multiple times to get it to pass.

Flaky tests can be particularly hard to get a handle on, because they’re sporadic by nature, usually pretty rare (though with enough runs, even rare things become common), and by the time they first make their appearance, the developer who unknowingly introduced them might’ve already moved on to other things.

But similar to runtime improvements, there’s often low hanging fruit, and fixing the top 3 of your 50 flaky tests might reduce total failures by 90%. You just need failure data for identifying that top 3, as opposed to anecdotes, like “Yeah, that test always fails.”

Bringing data where the audience is

You CI pipeline might produce all kinds of useful metrics, besides the ones mentioned above. Things like bundle sizes, test coverage numbers, or accessibility scores are often produced as part of your CI runs.

Consider making that data available where it’s most immediately useful: the PRs that affect those metrics. A common approach is to have the CI post a comment to a PR once it finishes its CI run, containing useful information such as the above. Even better is being able to compare them with their past values: if you notice that your PR doubles your bundle size, maybe you misconfigured something, and can take another look?

Contrary to failure notifications mentioned above, which are primarily useful for the developer working on the PR, this data is equally useful for the person reviewing it: if you notice the test coverage going down due to this PR, it might be a good trigger to politely ask whether the PR should have included a test or two?

If your CI is able to produce a preview build for the PR in question, this automatic comment is also an excellent place to include a link to that preview build, for easy access to both the PR owner and its reviewers.

5. Security

Security isn’t an optional part of modern software engineering. Good security is table stakes, great security is the goal. It’s also something you can’t just slap on at the end: security should be baked into the development process throughout. Your CI pipeline is great for ensuring some things always happen, even if you’re busy and like to move fast.

Here are some things you can do to help ensure the security of your CI pipeline itself, and how it can help contribute to the security of whatever it is you’re building with it.

Secrets management

Your CI system often needs access to other systems. At the risk of sounding obvious: don’t commit secrets (such as passwords, API keys or SSH keys) into your version control. And if you accidentally do, remember to carefully scrub your history: just undoing the commit is usually not enough.

Most CI services have a dedicated mechanism for managing secrets, with features such as write-only access via the configuration UI, and automatically scrubbing secrets from console output. Use that instead.

Be mindful of using environment variables for passing secrets into processes you run on our CI. The process environment is automatically inherited to child processes, commonly printed out as part of debug info, or even shipped out, as part of error monitoring. If you must use environment variables for passing secrets (sometimes it’s the only input supported by your program), only set them on the steps of your CI process that actually need them, and delete or overwrite them as soon as you’ve used them.

Similarly, be mindful of passing secrets in via files. Even though the runtime environments of modern CI services are ephemeral, the same working files usually persist between the various steps of the pipeline. If you use steps developed by a third party (as is common with GitHub Actions for example), be aware that their code will have access to the same files you do. If you must use files for passing secrets (again, sometimes the only option available to you), remove them as soon as you’ve used them.

Command line arguments are also not ideal for passing in secrets, as they might be visible to other users of the system (via process management tools), and might be included in error reporting (similar to environment variables).

Generally the most secure way to pass in secrets is via the standard input, but unfortunately that’s often not supported by whatever program you must use as part of your CI process. So choose the method you need to use for passing in secrets, keeping the caveats listed above in mind.

Allow-listing files

You should make sure whatever artifacts (such as website bundles or Docker images) you’re building as part of your CI process don’t include unwanted files. At best, they’ll bloat the size of the build artifact. At worst, they’ll include secrets you shouldn’t disclose.

This might seem like one of those “just don’t make mistakes” types of advice, but one practical thing you can do is to always allow-list (as opposed to deny-listing) files going into your build artifacts. For instance, if you’re building a static website, but don’t want to include your sourcemaps, instead of this:

cp build/* dist/ # copy everything

rm dist/*.js.map # remove specific fileConsider doing this:

cp build/index.html dist/ # copy only the file you specifically want

cp build/*.css dist/ # or very specific kinds of files

cp build/*.js dist/It’s annoying to be less concise, but being explicit about what you intend to include helps ensure unwanted files don’t tag along. Some of those unwanted files may not be there yet, but will appear later, as someone adjusts your build configuration.

Another good practice for avoiding unwanted files in build artifacts is never building them at the root of your working copy, as the root often contains plenty of things you don’t want to include (sometimes even files used for passing secrets; see above). Instead, consider:

- Explicitly picking the files you want to include in your artifact

- Copying them to a different directory (such as

distin the above example) - Moving to that directory

- Building the artifact there

Separating CI and CD

Security benefits from depth, in that no single obstacle might be insurmountable for an attacker… but the more obstacles you add, the less likely anyone is to defeat all of them. One of the obstacles you can add to your CI pipeline is to keep it isolated from your CD one.

Your CI runs lots of third party code, such as build tools, dependency install scripts, community-provided CI steps, etc. Should one of those get compromised, an attacker can be assumed to have access to everything your CI pipeline has access to. Usually that’s not too valuable in itself, as the juicier targets are things like user information and production databases. But if your CI pipeline is allowed to connect to your production infrastructure (in order to deploy new code), it will be one permission misconfiguration away from connecting to your production database.

Ideally, your CI pipeline won’t have any access to your production infrastructure. Instead, it should:

- Produce a build artifact (such as a website bundle or Docker image)

- Upload it to a registry

- Trigger a separate CD pipeline run, which takes the new code into production

It could be argued that this is pointless: if an attacker has compromised the machine making builds (i.e. your CI), won’t it just build its attack into the artifacts the CI uploads to the registry? Yes, but again, this places significant new obstacles on the attackers’ path, such as:

- Having to figure out the specifics of your build and deploy process, to be able to inject a payload that does something useful once ran in production (automated attacks are unlikely to do this, buying you time).

- Production workloads are usually highly containerized, and may still not have access to the really juicy targets (following the principle of least privilege).

- A CI pipeline usually has unfettered access to the public internet (for installing dependencies etc), but production might not: security hardened environments often have tight egress rules. This means that even if an attacker were able to connect to your production database, they might not have an effective way to phone home, or exfiltrate any data.

- A CD pipeline deploying compromised code likely won’t compromise the CD pipeline itself, meaning the attacker still won’t have access to the keys you use for deployment (as those keys might have privileges for more than just making deployments).

None of the above are guaranteed to stop an attack before it does real damage, but any of them might.

Scanning for vulnerabilities

Automated vulnerability scanners aren’t a panacea, and not exclusive to CI pipelines, but they are one additional way of adding depth to your security. Doing vulnerability scanning as part of your CI pipeline helps make sure it regularly happens.

By scanning your build artifacts for vulnerabilities before handing them off to your CD pipeline for deployment can also help contain the damage a compromised CI pipeline can do.

Static analysis tools can also help spot some categories of security issues. For instance, ESLint is a popular tool in the Node.js ecosystem, and has a rule plugin just for this.

Automating library updates

Finally, one of the best ways in which your CI can help improve the security of the application you’re developing is by helping you integrate security updates to your dependencies in a timely manner. GitHub has their built-in dependabot for this, and various third party services also exist.

It’s often a good idea to have separate cadences configured for security updates and regular dependency updates: while security updates should be considered as soon as possible, with other kinds of updates it’s often perfectly fine (or even advisable) to not be the first team in the world to install a something-zero-zero version of a library.

Wrapping up

In this post we laid out the five properties that make for a great CI experience — speed, reliability, maintainability, visibility and security — and shared concrete tips for excellence in each area.

The product we’re building — Swarmia — also happens to have great tools for helping teams with their CI experience: visibility into performance metrics and failure rates being current product features, soon expanding to cover things like flaky test analytics and custom metrics.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia