Reduce bias in retrospectives with better data

Many software organizations run retrospectives based on mostly subjective data collected from the retrospective participants. Studies show this leads to skewed insights that often miss broader organizational goals. One solution is to add a step to your retrospectives in which you present relevant hard data gathered from a wide array of data sources.

I recently wrote a blog post on well-researched advice on software team productivity that included a summary of a few excellent studies on the effectiveness of retrospectives. These studies highlight how teams often rely too much on the subjective personal experiences of the team members to collect data for the insights and actions generated from the retrospectives.

[Retrospective] discussions might suffer from participant bias, and in cases where they are not supported by hard evidence, they might not reflect reality, but rather the sometimes strong opinions of the participants.

Retrospectives focused on the personal experiences of the participants often work as great team-building events. The challenge is that retrospectives based only on subjective data collected from the participants are susceptible to multiple cognitive biases like recency and confirmation bias.

As these retrospectives often miss some crucial pieces of data, they may result in false insights. False insights result in actions that are at best a waste of time, at worst, a hindrance to the organization's goals.

What a data-driven retrospective looks like

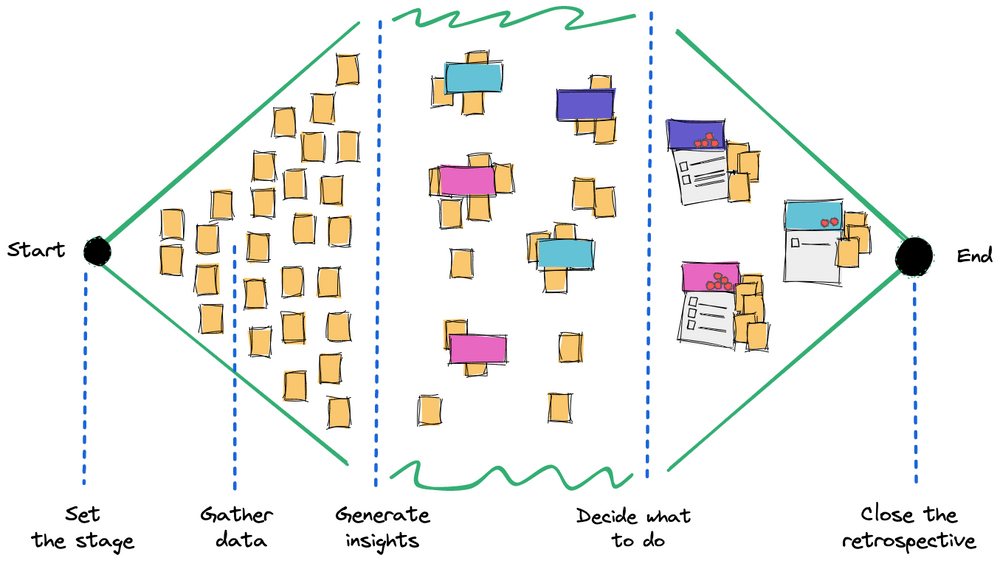

There is a multitude of ways you can run a retrospective. This blog post will use the 5-step retrospective structure from the book "Agile Retrospectives." It is one of the most well-known options and is a great, generally applicable approach to running a retrospective. Most retrospectives we've seen teams run use at least some parts of its structure.

The five steps of the structure are:

- Set the stage: Help people focus on the work at hand. Reiterate the goal. Create an atmosphere where people feel comfortable discussing issues. Get everyone to say a few words.

- Gather data: Collect the data you need to gain useful insights. Start with the hard data like events, metrics, features, or stories completed. Continue with personal experiences and feelings.

- Generate insights: Ask "why?" Think analytically. Examine the conditions, interactions, and patterns that contributed to successes or failures. Avoid jumping to the first solution, and consider additional possibilities to make sure you address the actual root cause.

- Decide what to do: Pick the top items from the list of improvements. Decide the actions you are going to get done. Assign the actions to individual people.

- Close the retrospective: Decide how to document the results, and plan for follow-up. Ask for feedback about the facilitation/retrospective.

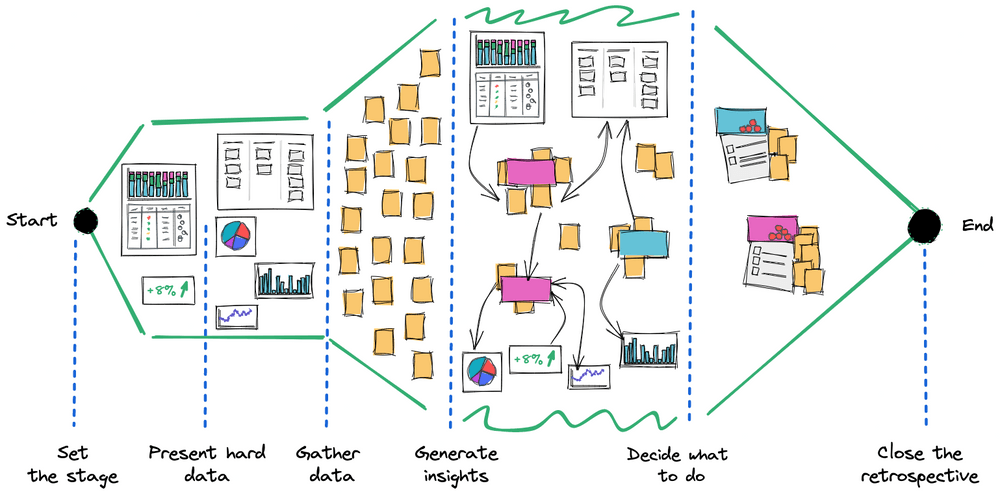

When running a data-driven retrospective, our focus is on the second step, "gather data." You should start the step by first gathering "hard data like events, metrics, features, or stories completed" and then continue to the personal experiences and feelings of the participants.

However, many teams more or less skip the first part. I've seen dozens of retrospectives where the organizer rushed to ask questions from the group like "What went well? What could be improved? What went badly?" without first thinking about the context.

To fix the situation, we can add an explicit step that we'll call "present hard data." Trying to gather hard data combined with personal experiences and feelings is a historic relic. Traditionally, retrospectives have been promoted by "agile people" and consultants—external facilitators. Thus, most retrospective advice is written for these kinds of "outsiders."

An outsider needs to start with "gathering all the data" because they don't often have access to it outside the retrospective. If you're running a modern software organization that employs retrospectives, you have no reason to do a separate data inventory every time. Your "hard data sources" are not likely to change often.

When we add the "present hard data" step to our retrospectives, we get four clear benefits:

- Better focus: We don't need to spend time trying to gather the hard data from the participants. Spending a few minutes going through the most important data is enough. Then, the "gather data" section can focus on getting people to share their personal experiences and feelings.

- More reliable data: Your data quality is better because the data is not based on only what people remember from the past.

- Better insights: Consistent hard data helps the participants generate better insights by reducing personal biases and making it easier to identify common patterns. This ultimately makes it more likely for the team to determine the actual root causes.

- Continuous evaluation of your hard data: The step works as a great "mini-retrospective" on what hard data your team uses to drive its decision-making. If the presented hard data feels irrelevant for the team's continuous improvement, it is a sign that either your hard data or your team may be missing something.

How to get started with data-driven retrospectives

To get started with data-driven retrospectives, take inventory of the "hard data" sources you already have. Many organizations have KPIs, OKRs, or similar to make sure the teams in the organization are prioritizing the right things business-wise. You might also have insights from your version control system like GitHub and issue tracker like Jira. Sales and customer success data is also often valuable. If you're already running developer surveys, you can run retrospectives using survey response data too.

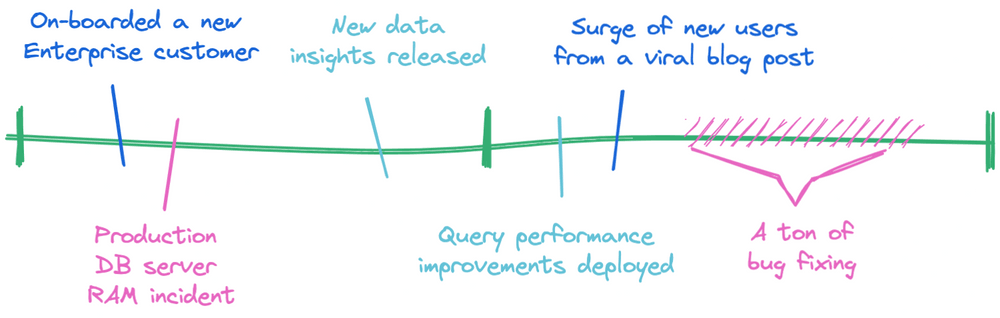

Then, think about what data you're currently not collecting but would need to get a holistic picture. For example, the SPACE framework is an excellent tool for this. Overall, your goal is to provide the people in the retrospective with an accurate timeline of what happened within the time period you're focusing on (e.g., last iteration or sprint).

It can be hard to correctly remember and jointly discuss past events in a constructive way. Fact-based timelines that visualize a project's events offer a possible solution.

You will likely identify hard data that you should have but cannot currently automatically gather. You can compensate for this by making a manual report or making sure you cover it when gathering personal experiences from people.

For example, many teams don't have very good insights into what kind of work they actually did during the last iteration. You can collect this information by answering these questions: "What did we ship? How much work went into the roadmap/toward our OKRs? How much went to fixing bugs?"

Another common practice used in many retrospectives is to start every session by building a manual timeline of the last iteration. This reminds people of the key events that may have contributed to the team's productivity and how everyone is feeling.

It is worth noting that the more automatic you can make your hard data sources, the better. If you rely on manual work in, for example, calculating the ratio between bugs and roadmap work, it is very likely that, at some point, your data will not be up to date.

Relying on manual work also often leads to the team dropping these metrics when they start feeling busy. This situation is precisely the opposite of what you want to achieve. If anything, you should have a clearer picture of what is essential when you're running low on time.

To be honest, I haven't personally seen a single team continually do great data-driven retrospectives unless the data and reports were automatically available.

A real-life example of holistic hard data

To give a real-life example of a diverse combination of hard data, I will describe the hard data sources we use at Swarmia. We have four different hard data sources: objectives and key results (OKRs), weekly customer reports, sales pipeline, and Swarmia's work log.

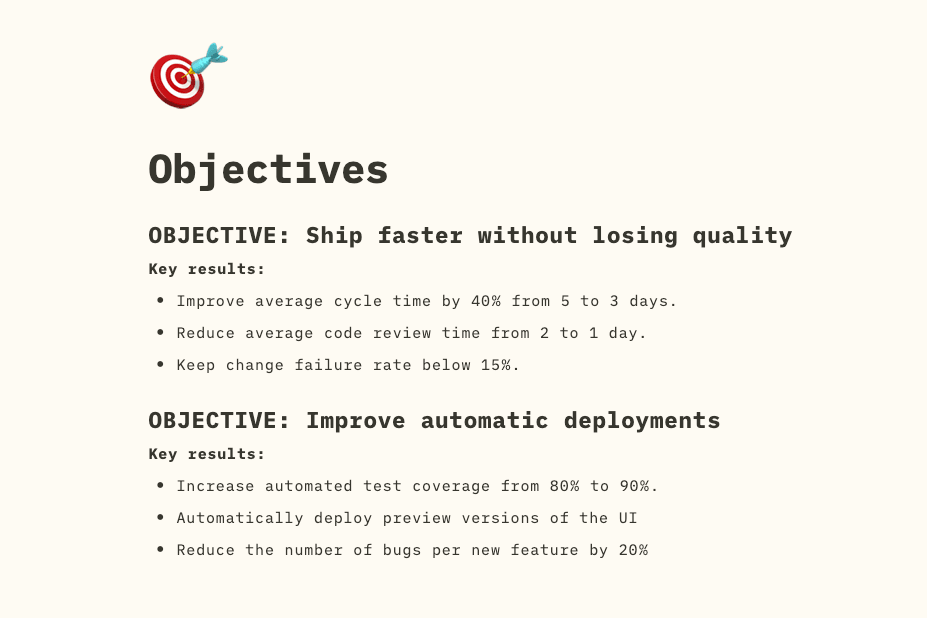

Objectives and key results (OKRs)

OKRs include the objectives that we feel are the most important goals for our business right now. Achieving these usually takes multiple months. Each objective has a few (2-6) key results to make them more actionable. We update the progress of our OKRs every Monday.

Especially in larger organizations, it can be easy to wander off from the things likely to have the most significant business impact. OKRs are an excellent data source for bringing those discussions into retrospectives.

Weekly customer report

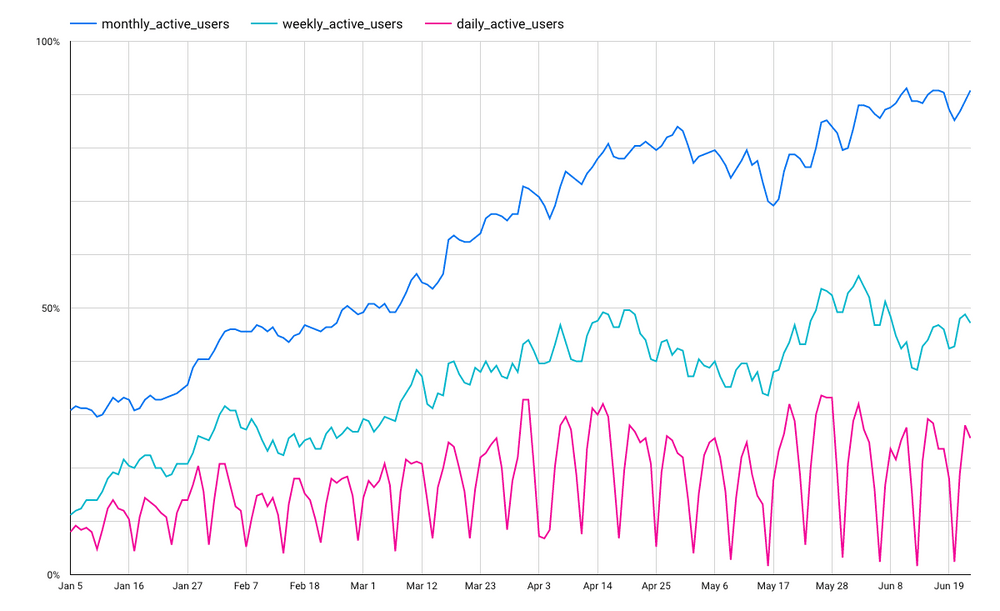

Our weekly customer report contains key performance indicators (KPIs) such as monthly recurring revenue (MRR), number of paid seats, user activity, and Slack notification adoption. It provides retrospectives with the necessary context for evaluating if our decisions have been impactful and served our users well.

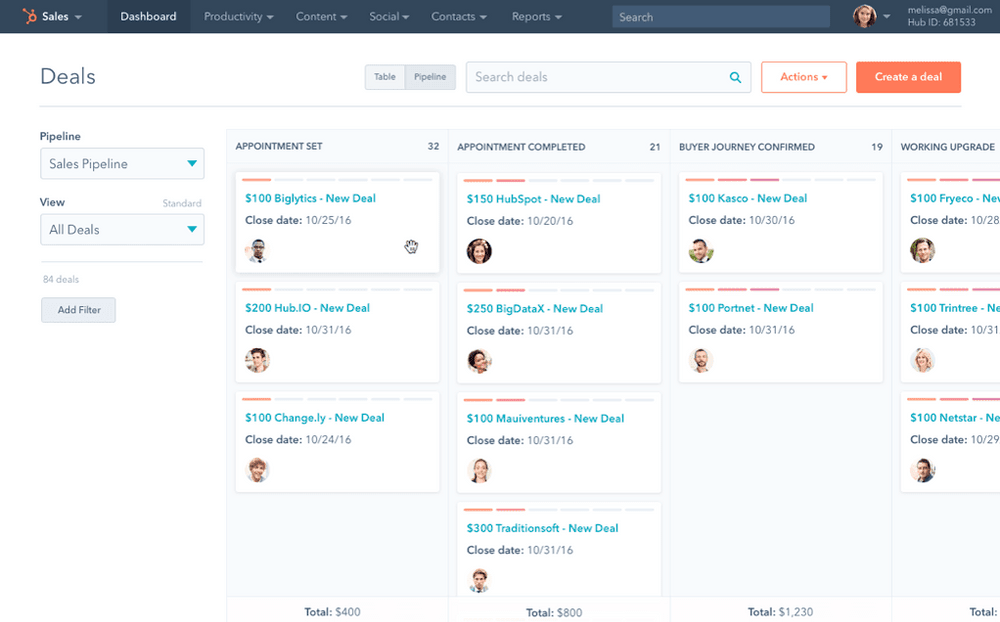

Sales pipeline

We use Hubspot to track our sales pipeline. Seeing the pipeline is helpful even for the engineers. It often provides great context for the features that may be included in the roadmap and is an excellent source of information when discussing the differences between our customers and their needs.

When your organization becomes bigger, looking at the sales pipeline becomes less valuable. Because of this, our sales team also maintains a "customer feature wishes" page that highlights the most common challenges across the deals.

Work log

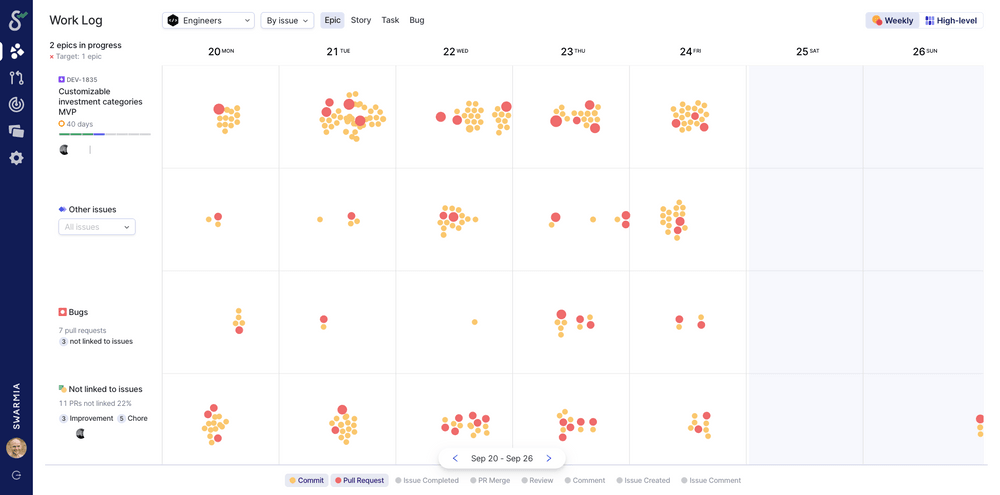

This is the part where I get to toot our own horn (toot, toot!) as we use our own product in our retrospectives. work log is Swarmia's unique feature that visualizes what has happened within a specific week. It allows us to see at a glance how much of our activities went into the roadmap (e.g., epics or stories) and how much attention we put into individual issues, bugs, and unplanned work. It includes most of the same information as the manually built "timeline" discussed above, except it automatically updates in real-time.

Conclusion

Hopefully, this blog post inspires you to include enough hard data in your retrospectives. It will allow your teams to create better insights and actions, ultimately leading to more productive teams and happier engineers.

You can still run retrospectives in which you put the hard data aside and focus on the feelings and personal experiences of the team. Having different kinds of retrospectives will benefit your team and help keep people engaged.

However, ensure you rely on a wide array of data sources when holding retrospectives on topics in which accurate insights are essential. Leaving hard data out of a retrospective should be a deliberate choice, not an accident.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia