Should you build or buy a software engineering intelligence platform?

“We could just build this ourselves.“

If you’ve been in engineering leadership for any length of time, you’ve heard this phrase in countless discussions about tooling. It’s a natural response, especially when it comes to engineering intelligence tools. After all, you have a team of talented engineers who build software for a living — why not point those skills at your internal needs?

However, the reality is more complex than it first appears. Having spoken with hundreds of engineering leaders and helped organizations navigate this exact decision, I’ve discovered some clear patterns in the journey from “let’s just build it” to a more nuanced understanding of the tradeoffs involved.

I figured now was about time to discuss the typical journey organizations take when considering engineering intelligence tooling, namely:

- Situations where building in-house makes the most sense

- The obvious and perhaps more surprising challenges when building internal tools

- Why buying often costs (much) less than you think

- How to build your business case for the decision

So here goes.

Your standard build to buy journey

Most engineering organizations follow a similar path when thinking about engineering effectiveness tooling.

It usually starts with solving isolated corners of the problem — maybe you want to track DORA metrics, improve CI pipelines, or gather more feedback from developers.

These initial efforts mostly come from good intentions. Someone notices an issue in delivery flow, or a directive comes down from leadership to improve visibility into engineering metrics. A small group starts building a simple dashboard, perhaps using tools you’re already paying for, like a BI platform connected to GitHub or Jira data.

As the organization grows, they try stitching these corner-of-the-problem solutions together, creating a patchwork of tooling that requires an increasing amount of maintenance. Each piece might solve its narrow problem well, but they typically don’t connect to provide a comprehensive view of engineering effectiveness.

The third phase is where reality sets in. As these projects progress, companies discover data quality and maintenance issues that weren’t apparent at the start. Teams realize that engineering data is surprisingly complex. Seemingly simple questions like “which teams do we have and who’s in them?” or “what’s the relationship between this Jira project and that GitHub repository?” become mapping nightmares that constantly need updating.

Then comes the recognition of total cost. Only after significant investment do teams fully understand the development, implementation, and ongoing maintenance costs. What started as a “simple dashboard” has become a complex system requiring dedicated engineering time that could otherwise be spent on your core product.

It's worth noting an important distinction here: while many organizations can successfully build specific reports or isolated dashboards in-house, building a complete engineering intelligence platform is an entirely different challenge. Reports address specific questions at a point in time, but a platform needs to handle changing team structures, evolving codebases, historical data preservation, and much more, all while maintaining data quality and providing useful insights.

Engineering should always be viewed as an investment with clear ROI expectations, so while building internal tools might seem like a tactical win for your platform team, buying a solution allows you to approach engineering intelligence investment more strategically.

How about we just vibe code it?

There’s another option that’s become popular recently: sitting down with an AI coding assistant and vibe coding your own engineering intelligence dashboard in a weekend (or so they say).

For certain situations, this works fine.

If you primarily need to report some aggregate numbers from a point in time up the chain to leadership, a vibe coded dashboard can get the job done. You want to show cycle time trends at the monthly board meeting? Pull some GitHub data, create a few charts, present them. Mission accomplished.

This approach makes sense when your main goal is reporting rather than improvement, you have someone whose job includes compiling metrics, and leadership is satisfied with high-level trends without needing to drill into details.

Where this approach falls short

The limitations show up fast when you move from just reporting, to actually trying to make engineering more effective.

When someone asks “Why did cycle time spike in Q2?” you need to see individual PRs, specific bottlenecks, the outliers that skewed everything. Your standard vibe-coded dashboard typically shows aggregates without the data structure to explore what’s behind them. You end up manually digging through GitHub, which defeats the entire purpose.

Data quality also becomes a problem as your organization evolves. That mapping between teams and repositories works great until someone creates a new repo, a team splits, or engineers move around. Suddenly your metrics are off and someone needs to manually update the configuration. Then another change happens. And another.

The solution still requires consistent maintenance. APIs change, requirements emerge, your org structure shifts. Unlike a proper internal build with architecture and documentation, AI-generated solutions often become black boxes that only their creator understands.

Most importantly: reporting isn’t the same as improving. Seeing that your cycle time is high doesn’t tell you where the bottlenecks are or what to do about them. Basic dashboards can highlight these problems, but can’t help you solve them.

If you’re maintaining it, you’re still building

If you’re investing the ongoing time to maintain an AI-generated solution and keep it useful for your organization, you’ve essentially committed to building internal tooling — just with a less robust foundation.

At that point, you’re not simply maintaining some code. You’re fielding questions about metrics that seem off, explaining methodology to stakeholders who don’t trust the numbers, updating configurations every time your org changes, adding features as requirements evolve, and troubleshooting data pipeline issues.

If you’re making that investment anyway, those hours might be better spent on a purpose-built solution that can actually help you improve, rather than just report.

A few situations where building actually makes sense

Despite the challenges, there are a few legitimate reasons to build in-house rather than buy engineering effectiveness tooling:

- You’ve already built a substantial part of the solution. If you’ve invested significantly and have a working system, it might make sense to continue. Though beware of the sunk cost fallacy — the fact that you’ve already invested doesn’t mean continuing is the best choice.

- You have unique scale or compliance requirements. If you’re at the scale of Amazon, Google, or Facebook, your needs may genuinely be unique enough that existing solutions won’t suffice. These companies operate at a scale where even the most sophisticated tools break down, and they have the resources to build specialized solutions.

- The tooling directly relates to your core IP or competitive advantage. If engineering intelligence is central to your business model or product, the specialized knowledge you develop while building might be worth the investment.

- You have highly unusual requirements that no commercial solution addresses. This is rare, but possible. Many companies think they have unique requirements, but when they look closely, they find their needs are actually pretty standard (note: every team using Jira in a different way is standard).

- You have excess engineering capacity and specialized expertise. This scenario is practically non-existent right now — most engineering teams are already stretched thin on delivering their core product.

If you don’t fit clearly into one of these categories, it might make sense to question whether building is truly the right approach for your business.

The hidden costs of building engineering metrics tooling

So let’s assume you do fit into one of the categories above, and you’re off on your build journey. Good stuff. There are, however, a few challenges that are likely to pop up unexpectedly (and despite your best efforts to avoid them).

Internal tool adoption is hard

Internal tools for engineering intelligence are often painfully average, in terms of usefulness.

If it was simple, easy, and universally beneficial for organizations to build engineering intelligence tools internally, companies like Swarmia wouldn’t exist. But we do, and the engineering intelligence market is growing rapidly, which should indicate something about the complexity of the problem.

Low adoption rates tend to happen because platform teams building engineering intelligence tools aren’t the end users, and they may lack the design expertise needed for good user experience. There’s also the internal cost of onboarding, which if fumbled, can mean an otherwise useful tool or dashboard gathers dust on the shelf.

This can end with metrics and dashboards that don’t lead to meaningful change, wasted engineering effort, and a return to gut-based decision making.

Data quality aka the ever-growing iceberg

Data quality is the iceberg that sinks many DIY engineering intelligence efforts — tactical solutions miss underlying themes and connections, leading to disjointed metrics. For example, a ticket-completion dashboard disconnected from code review metrics might show impressive velocity while hiding critical bottlenecks in the review process.

Data mapping can be a challenge for internal tools too. Team structures change, Jira projects come and go, and repositories get created and archived. Without a dedicated system for maintaining these relationships, your metrics become unreliable at best, and at worst, so obviously wrong that people actively distrust and dismiss them.

Historical data tracking is another thing. Questions like “who was in this team six months ago?” become nearly impossible to answer reliably without purpose-built systems, creating blind spots when trying to understand how your team performance has evolved over time.

Your perpetual maintenance burden

When building and maintaining internal tooling, most time ends up spent explaining and defending metrics rather than improving them. When someone questions why a number looks off, you need to be able prove the data is correct. Without this ability, trust erodes quickly.

Supporting API changes from GitHub, HR systems, Jira, and other tools requires constant attention. Every time one of these services updates their API, someone needs to update your internal tools as well. This maintenance is rarely accounted for in the initial build assessment.

There’s also the risk of knowledge loss when key team members leave. I’ve seen multiple organizations where just a single engineer understood how their metrics systems worked. When that person left, the entire system became a black box that no one was game enough to touch.

Finally, there’s the opportunity cost of dedicating engineering resources to non-core business functions. Every hour spent maintaining internal metrics tooling is an hour not spent building your actual product.

Why buying (Swarmia) costs much, much less

Data quality you can trust

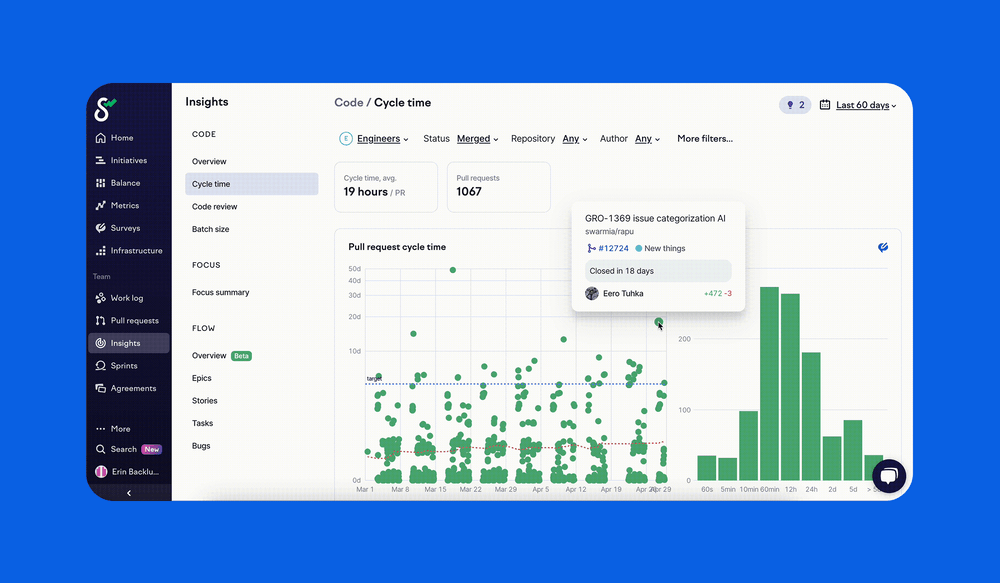

- In Swarmia, metrics are explorable to a granular level - you can see the individual PRs and activity behind any number. When someone asks, “Why does this metric seem off?“ you can immediately drill down to the exact pull requests and activities that contribute to it. This transparency is crucial for building trustworthy engineering intelligence data and makes troubleshooting straightforward.

- You have the ability to exclude outliers from your metrics. Every organization has those once-in-a-blue-moon massive refactorings or migrations that can completely skew your metrics. With Swarmia, you can easily exclude these statistical anomalies to maintain accurate tracking.

- There’s automatic mapping of configuration data ensures your metrics stay accurate without manual intervention. As teams change, repositories get created, and Jira projects evolve, the system automatically maintains the correct relationships without someone having to manually update configuration files or database entries.

Thoughtful integration

- Swarmia works with your existing workflows right out of the box. We integrate with GitHub, Jira, Linear, Slack, and many other tools your teams already use. You don’t need to build and maintain these integrations yourself.

- For organizations that want to combine engineering intelligence data with other business reporting, we’ve got Swarmia Data Cloud, which gives you the best of both worlds: specialized tooling for engineering intelligence with the ability to incorporate that data into company-wide analytics platforms.

- Your team doesn’t have to respond to API updates or changes in third-party systems. When GitHub changes its API, that’s our problem to solve, not yours. The same applies to changes in Jira, Linear, or any other integrated tool. This alone eliminates hundreds of hours of maintenance work annually.

Proven adoption, from leadership to engineers

- High engagement from development teams (up to 90%) means Swarmia’s insights actually create improvement for the teams doing the engineering work. Internal tools are often built for the management level only (or management-first), so the value they provide to engineers is limited.

- Designed for actual developer workflows – Swarmia is built with a deep understanding of how developers actually work. This contextual approach leads to much higher engagement than products that require engineers or even engineering managers to interrupt their flow of work to check metrics or make decisions (our Slack notifications are developers’ favorite Swarmia feature for a reason!)

- For leadership, Swarmia enables use cases you didn’t even foresee. Finance team asks you to track hours for cost capitalization reporting? Just pull the data from Swarmia, with no extra work required from the engineers.

Dedicated customer success included, always

- Flexible rollout options that let you decide whether to implement Swarmia across your entire organization at once or take a more targeted approach — either starting with leadership and cascading down, or focusing on specific teams first. This flexibility reduces disruption and allows you to tailor the onboarding process to your organization's culture and readiness.

- We'll help you plan and execute your rollout, not just hand you a tool and wish you luck. Our service model includes implementation planning and strategic guidance — without any extra costs. Engineering effectiveness can be complex, but with Swarmia, you’re never on your own.

Building your own business case

The most important step now is to evaluate your specific situation. Does your company fit into one of the scenarios where building makes sense? Or would a purpose-built solution provide faster, more reliable results with less risk?

To guide your decision and start to build your business case for leadership, gather your team to answer these key questions:

- Core focus: Does building engineering intelligence tooling directly contribute to our product's competitive advantage?

- Resource reality: Can we commit dedicated engineering resources not just for initial development, but for ongoing maintenance and improvements?

- Timeline pressure: How quickly do we need insights to make decisions? Can we wait 6-12 months for a homegrown solution?

- Data expertise: Do we have internal expertise in engineering metrics that correlates with business outcomes?

- Adoption strategy: How will we ensure teams actually use the tools we build? Who will champion adoption?

- Continuity plan: What happens if the engineers who build our internal tool leave the company?

Honest answers to these questions will point you in the right direction, and also help you start your ROI calculations — but that’s a whole other article.

Addressing some common objections

If you’ve made it this far, you’ll likely encounter some more practical concerns about buying a tool, and for the purposes of this article, I’ll answer them from a Swarmia point of view:

- “Is it too much work to implement?” This is a valid concern — but most of our customers are up and running with initial insights in days, not the months or years that internal tools require. Plus, we’re the the only major player in the engineering intelligence space with a fully self-serve option.

- ”Does it integrate with everything we use?” Chances are, we’ve got you covered – and if not, we probably will soon. We support the most popular version control, issue tracking, and team communication tools used by modern engineering organizations – and we’re always open to get feedback on what integrations we should build next.

- ”Can we get the data out?” Absolutely. Swarmia data cloud lets you export all data to your existing data warehouse. This gives you the best of both worlds: specialized tooling for engineering intelligence with the flexibility to incorporate that data into your broader business analytics.

- ”Is it secure?” We’re SOC 2 Type II certified and GDPR compliant. We’ve been through countless security reviews by some of the most security-conscious organizations in the world, and we’ve never failed one.

- “Will it be around long-term?” We've grown sustainably since our inception, and work with hundreds of leading companies who rely on our platform. We’re in this for the long haul, and our business model is built around long-term partnerships with our customers.

The build vs. buy decision – what will yours be?

This all might seem simple on the surface — just pull some data from GitHub and Jira and display it on a dashboard and you’re on your way. Right?

As I hope we’ve covered here: not really.

Data quality, user adoption, platform maintenance, and careful metric selection create a challenge that’s far more difficult than most companies have the resources for.

When you buy a specialized solution instead of building one, you’re not only saving development time, but drawing from the collective learning from hundreds of other organizations facing similar challenges. You’re benefiting from ongoing research into what metrics actually drive improvement. You’re freeing your team to focus on what makes your business unique.

I will say this: buying a tool like Swarmia won’t magically solve all your engineering effectiveness challenges.

What it does do is give you the visibility, confidence in your data, and insights you need to make engineering intelligence an active process, not just a metrics tracking and reporting exercise.

In the end, the build vs. buy question isn’t whether you could build engineering intelligence tooling — but whether your engineering resources are better spent delivering customer value rather than reinventing wheels that already exist and roll quite well.

And I think the answer, for the most part, is clear.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia