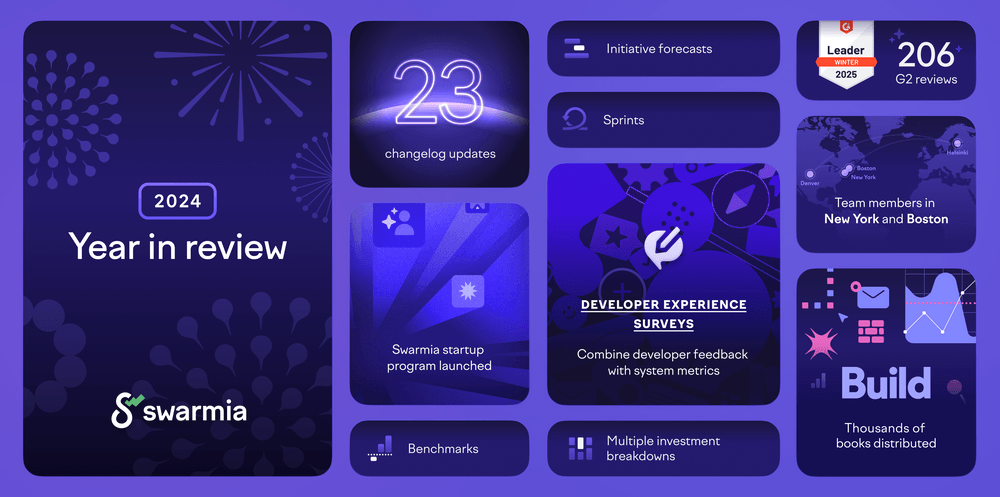

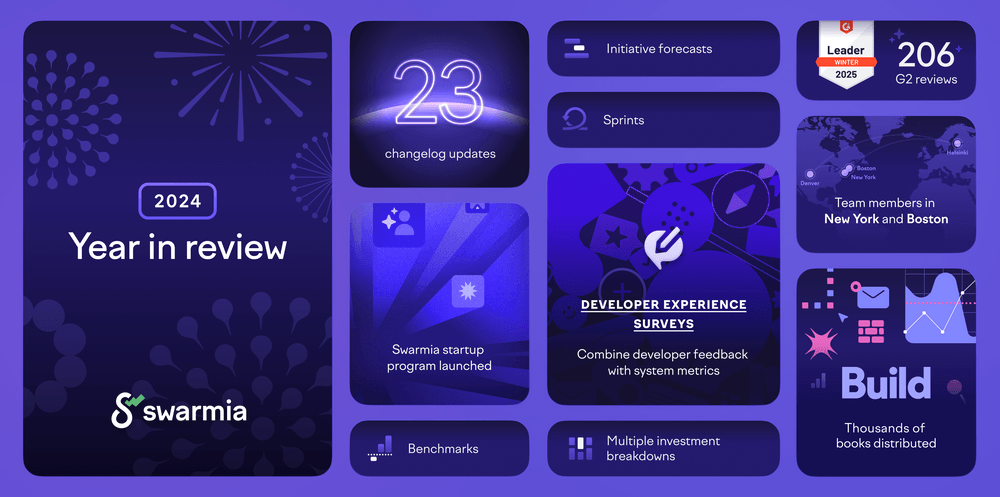

Comparing popular developer productivity frameworks: DORA, SPACE, and DX Core 4

Bright-eyed and full of optimism, your team has implemented an developer productivity framework. It’s exciting — you’re eager for results, and you’ve got a good feeling about it because it just makes sense. Or perhaps you heard on a podcast that another company similar to yours used it successfully.

A few months later, you find yourself staring at a dashboard, wondering why nothing has actually improved.

“What are we missing?” becomes the inevitable question. It’s a question many leaders ask when their chosen engineering metric or developer productivity framework isn’t solving their real problems.

While DORA metrics, the SPACE framework, and DX Core 4 are valuable starting points, they often identify problems without providing actionable solutions.

Why we gravitate towards frameworks

As engineering leaders, we reach for frameworks because they provide comfort and structure in an otherwise complex domain. Just like models, frameworks are always wrong, but sometimes useful. Engineering effectiveness and developer productivity have many facets, and frameworks give us conceptual handles to grasp.

These frameworks typically have research backing them, which builds confidence that we’re not just making things up. They also provide a shared vocabulary, giving teams precise terms to discuss abstract concepts like productivity and effectiveness.

The core problem, however, is that frameworks are often mistaken for roadmaps to solutions. In reality, they’re mental models that help us think about certain aspects of effectiveness. And when a framework comes with impressive research credentials, it’s tempting to believe it addresses everything that matters.

DORA metrics

DORA is a long-running research program that applies behavioral science methodology to understand the capabilities driving software delivery and operations performance.

It’s the longest-running academically rigorous research investigation of its kind, and has uncovered predictive pathways that connect working methods to software delivery performance, organizational goals, and individual well-being.

The program began at Puppet Labs in 2011, and DORA formed as a startup in 2015, led by Dr. Nicole Forsgren, Jez Humble, and Gene Kim. Their goal was to understand what makes teams successful at delivering high-quality software quickly. Google acquired the startup in 2018, and it continues to be the largest research program in the space. Each year, they survey tens of thousands of professionals, gathering data on key drivers of engineering delivery and performance.

The DORA Core Model

DORA Core is a collection of capabilities, metrics, and outcomes that represent the most firmly-established findings from across the history and breadth of DORA’s research program. The model is derived from DORA’s ongoing research, including analyses presented in their annual Accelerate State of DevOps Reports. It serves as a guide for practitioners and deliberately evolves more conservatively than the cutting-edge research.

Beyond the four key metrics

While the four key metrics (Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Mean Time to Restore Service) are the most well-known aspect of DORA’s work, the program’s findings extend far beyond these measurements. DORA’s research explores various capabilities that contribute to high performance in software delivery, — namely technical, process, and cultural dimensions.

1. Technical capabilities

Technical capabilities include continuous delivery, which relies on several other core DORA competencies. Their research shows that continuous delivery improves software delivery performance, system availability, reduces burnout and disruptive deployments, and improves psychological safety for teams.

Regarding change approvals, DORA’s 2019 research found that they’re best implemented through peer review during the development process and supplemented by automation to detect bad changes early in the software delivery lifecycle. Heavy-weight approaches to change approval slow down the delivery process and encourage less frequent releases of larger work batches, which increases change failure risk.

DORA analysis has also found a clear link between documentation quality and organizational performance — their research shows documentation quality driving the implementation of every technical practice they studied.

2. Cultural capabilities

The way to change culture is not to first change how people think, but instead to start by changing how people behave — what they do.

DORA research demonstrates that a high-trust, generative organizational culture predicts software delivery and organizational performance in technology. This finding is based on work by sociologist Dr. Ron Westrum, who noted that such culture influences how information flows through an organization.

A key insight from DORA is that “changing the way people work changes culture.” This echoes John Shook’s observation that “The way to change culture is not to first change how people think, but instead to start by changing how people behave — what they do.”

The research also shows that an organizational climate for learning is a significant predictor of software delivery performance and organizational performance. The climate for learning is directly related to the extent to which an organization treats learning as strategic — viewing it as an investment necessary for growth rather than a burden.

3. Organizational capabilities

DORA research indicates that high-trust and low-blame cultures tend to have higher organizational performance. Similarly, organizations with teams that feel supported through funding and leadership sponsorships tend to perform better. Team stability, positive perceptions about your own team, and flexible work arrangements also correlate with higher levels of organizational performance.

Additional organizational capabilities highlighted by DORA include empowered teams that can innovate faster by trying new ideas without external approval, effective leadership that drives adoption of technical and product management capabilities, and focus on employee happiness and work environment to improve performance and retain talent.

DORA has long recognized that many of these effects depend on a team’s broader context. A technical capability in one context could empower a team, but in another context, could have negative effects. For example, software delivery performance’s effect on organizational performance depends on operational performance (reliability) — high software delivery performance is only beneficial to organizational performance when operational performance is also high.

How do organizations apply DORA research in practice?

There are plenty of ways to implement DORA metrics in your organization. At the most basic level, DORA offers tools like a quick check assessment to help organizations discover how they compare to industry peers, identify specific capabilities they can use to improve performance, and make progress on their software delivery goals.

The DORA Community also provides opportunities to learn, discuss, and collaborate on software delivery and operational performance, enabling a culture of continuous improvement.

Some organizations will take a more holistic approach and use a platform to measure DORA metrics as a part of improving their engineering effectiveness as a whole.

The DORA framework is solidly evidence-based and — despite the popularity of four common metrics — the associated research does go beyond simple metrics to examine technical, process, and cultural capabilities, making its observations meaningful and actionable. Still, there are some challenges:

- Overwhelming complexity: The full model can be too much to digest if you're just starting your improvement journey.

- Not one-size-fits-all: Many capabilities won't apply equally well across different organizational contexts.

- Heavy lifting required: Changing culture and practices demands significant effort and commitment.

- Metrics tunnel vision: Teams often focus too much on improving the numbers themselves rather than the underlying capabilities.

- Measurement difficulties: Some capabilities, especially cultural ones, can be difficult to measure objectively.

Now that we’ve covered DORA, let’s take a look at the next of the frameworks: SPACE.

The SPACE framework

The SPACE framework takes a broader view of productivity through five dimensions: Satisfaction, Performance, Activity, Communication & collaboration, and Efficiency & flow. What makes SPACE valuable is its clear acknowledgment of the human aspects of software development and the multidimensional nature of productivity — that there really is no one measure of productivity.

Another plus for SPACE is that it suggests selecting separate metrics for each organizational levels for a more holistic picture of what's happening in an organization:

- Individual level

- Team or group level: People who work together.

- System level: End-to-end work through a system, e.g., the development pipeline of your organization from design to production.

Though for all its conceptual elegance, SPACE is still just a mental model — one that leaves the hard work of figuring out what to measure and how to do it entirely to us.

And at the end of the day, trying to implement it in any meaningful way is often fraught with issues:

- Lacks measurement specifics: SPACE identifies what dimensions matter but provides almost no guidance on what specific metrics to track within each dimension, making implementation highly subjective.

- Too theoretical: The framework remains largely conceptual with limited practical implementation guidance, making it difficult to operationalize in real-world settings.

- Implementation complexity: The breadth of the framework makes it challenging to implement comprehensively, often resulting in partial adoption that misses important interconnections.

- Difficult benchmarking: With such broad dimensions and no specific metrics, you may struggle to benchmark your organization’s performance against meaningful standards.

- Unclear prioritization: SPACE offers no guidance on which dimensions should be prioritized in different organizational contexts or stages of maturity.

- Integration challenges: The framework doesn’t clearly explain how the five dimensions interact with and influence each other, making it difficult to understand cause-and-effect relationships.

DX Core 4

Core 4 aims to find middle ground by measuring Speed, Effectiveness, Quality, and Impact. It’s more prescriptive than SPACE, with specific metrics bridging technical and business perspectives, making it simpler to implement.

This framework stands out from the other two for its limited and specific metrics recommendations, and some companies are satisfied with it.

However, despite the “DX” name, Core 4 focuses primarily on outcomes rather than the lived experience of development. In practice, it’s often positioned to leadership more as “your developers will tell you how to fix things” than “take better care of your developers.”

Core 4 offers practical metrics but lacks the depth to understand the full picture of engineering effectiveness. It attempts to quantify experience and productivity but doesn’t fully embrace the complexity of either. As Will Larson explained, it also lacks a “theory of improvement” — a reasoned articulation of why following the framework will produce better results for the business.

If you’re considering implementing DX Core 4 in your organization, it’s important to be aware of a few things:

- Potentially harmful metrics: Some metrics recommended by the framework — like PRs per developer — can encourage harmful behaviors if used for individual performance evaluation. PRs per developer can be a useful, lagging metric in a sufficiently large organization

- Limited validation: The research behind the framework isn’t publicly available, making it more difficult to validate.

- Organizational blind spots: The framework provides limited insight into how organizational structures and processes impact the metrics being measured.

- Incentive misalignment: Some metrics may inadvertently create incentives that conflict with long-term organizational health (e.g., optimizing for PR speed or volume at the expense of quality).

- Proprietary methods: The Core 4 framework was developed to support a software engineering intelligence product; as such, the framework is unlikely to address issues not supported by the product.

- Pointless metrics: Revenue per engineer (RPE) is perhaps the most disappointing metric to see included. John Cutler systematically broke down how RPE fails across all eight criteria for a good metric: actionability, timeliness, context, alignment, clarity, resistance to gaming, behavior influence, and comparability.

What’s missing: connecting outcomes, productivity, and experience

Beyond the specific limitations of each framework, several universal challenges apply to all developer productivity frameworks that we have right now:

- The “now what?” problem: Frameworks are great at identifying issues but provide limited guidance on specific actions to improve in your unique context.

- Static measurement: Frameworks typically offer point-in-time measurements without addressing how metrics should evolve throughout an organization’s maturity journey.

- Limited change management guidance: They lack direction on how to implement and drive adoption of new practices or processes to improve metrics.

- Context blindness: Most frameworks suggest universal metrics without accounting for differences in organization size, industry, product type, or team structure.

- Overlooking organizational forces: Team structures, reporting lines, incentive systems, and communication patterns profoundly impact effectiveness but receive limited attention.

- Business outcome disconnect: Few frameworks directly connect engineering metrics to business results, making it difficult to demonstrate ROI on engineering investments.

The disconnect between diagnostics and action creates a familiar cycle of frustration. Engineers grow skeptical of measurement initiatives that consume time without driving change, leaders wonder why impressive dashboards haven’t translated to improved outcomes, and the initial enthusiasm for data-informed improvement fades as that “now what?” question remains unanswered.

The seeds for all of these frameworks were planted at a time when funding flowed more freely and headcount was plentiful. Today's reality is different.

Modern frameworks need to directly address the ROI we're getting from our engineering investment, and fully capture the heart of what makes engineering organizations effective: the interplay between business outcomes, developer productivity, and developer experience.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia