You don’t need a Git analytics tool

I have a confession to make: I used to be a customer of an early Git analytics vendor. But I’d be lying if I told you that there were no warning signs. In fact, before I bought the tool, their sales rep told me not to show it to our developers because they “might freak out.”

My experimentation with these products stemmed from a real use case. Scaling an engineering organization is hard, and I wanted to give my teams all the support they needed. Just like I wanted to invest in our production infrastructure, I also wanted to invest in developer experience and productivity.

But I quickly realized that Git analytics was not the way to go. Here’s why — and what you should be doing instead.

Most Git metrics seem to arise from the question “what can we measure from source code?” rather than “how can the team improve?”

The problem with Git metrics

As developers, we’re often tempted to visualize and observe any data we can find. In software development, the version control system seems like a natural place to start.

Most of these Git metrics seem to arise from “what can we measure from source code?” rather than “how can the team improve?” Some of the metrics are nonsensical. Some of them are commonly misused. And some of them are straight-up bad for business. Here are a few of them:

Code churn

Code churn is defined as code that the developer touches again shortly after writing it.

One of the biggest problems with scaling an engineering team is that people are often working on a codebase they’re not very familiar with, and it’s easy to get stuck. A common solution for getting unstuck is to write a simple version of the change (with some hard-coded values, etc.) and then refactor it to accommodate the surroundings.

There’s nothing in the rule book that says developers would be better off without editing their code.

Commits per day per developer

Given that software engineers are expected to commit code, commits per day per developer might seem like a tempting thing to measure.

If a developer is not committing anything, that might indicate that they’re working on something else — or that they are, indeed, stuck.

This seemingly innocent measure becomes problematic when it’s used to compare developers. People have different preferences for breaking their work down to commits. And there’s no logical reason for why eight commits would be better than four.

The problem is, we’re all drawn to simple solutions. Any report that shows a list of developers and the number of commits they’ve written is going to be interpreted as an opportunity to stack rank developers without any additional context.

New work vs. Rework vs. Legacy refactor

Some Git analytics products split your work into a few different buckets based on the Git diff — it could be completely new code, something that got moved around, or a more established (read: legacy) area of the codebase.

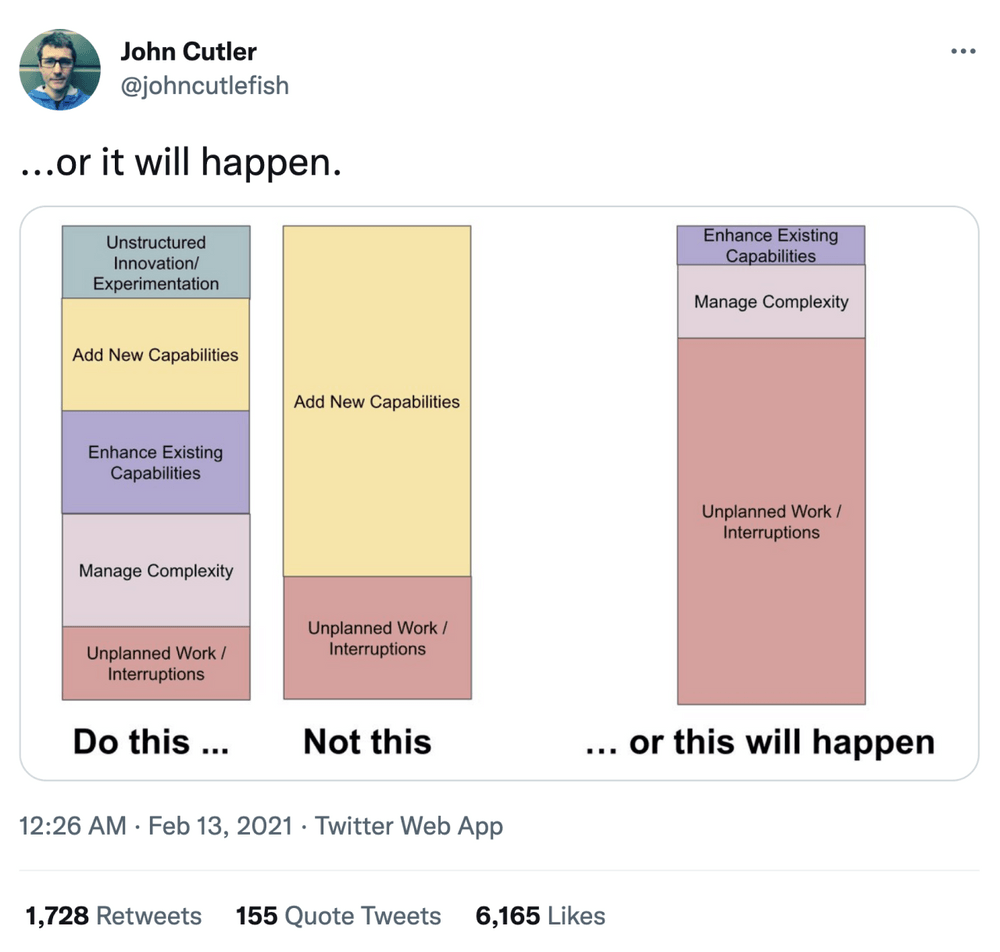

The intention is pure: software development is ultimately a balancing act, and finding a sustainable pace is really important.

Unfortunately, though, you can’t deduce the type of work from a Git diff. Small refactorings should be a normal occurrence in any development work, and bigger investments in the technical architecture are likely to look more like new feature development.

“Impact”

Code volume is sometimes branded as “impact.” The idea is that since everyone knows measuring lines of code is a bad idea, we’ll measure lines of code in a slightly smarter way — taking into account things like function entry points and control structures. While it may scale the numbers a bit differently, it really doesn’t make it a better measure.

Impact is one of the most important things for software development organizations to measure, but it can’t be measured with Git analytics. Instead, I’d suggest focusing on measuring impact through business outcomes — more on that below.

Git analytics tools focus on the wrong problem

Just because something can be measured doesn’t automatically mean that it should be measured. We could technically count how many words a sales rep writes per week, but I doubt that data would be helpful in any way.

A bunch of newer tools has been copying the same metrics from the Git analytics pioneers without really thinking about what people should do with them and how — or whether — they’ll help teams improve.

It would be great if there was one number that told you how well each developer is doing. But there isn’t. And pursuing that number will lead you in the wrong direction because it erodes the psychological safety that’s required for the team to objectively look at their situation.

Traditionally, these issues meant that most teams chose to ignore productivity measures entirely. Luckily, there’s some hope in form of new research and non-toxic metrics.

What comes after Git analytics

The best software development organizations are great at one thing: getting a little bit better every day. They’re aware of the latest research and tools, and apply that knowledge to design a solution that works in their situation. They get the whole team on board, rather than treating improvement as a management project.

There’s newer research like the State of DevOps report and the book Accelerate that introduced the four DORA metrics. It’s the first attempt to show a connection between software development measures and business performance. The authors have since moved on to propose something called the SPACE framework for a more holistic view.

I’ve written a lengthier post about measuring software development productivity, but here are a few practical examples for replacing Git analytics.

Outcomes

Ultimately, the most important role of product development is to move the needle of some pre-defined business outcomes. This is typically owned by Product Management and implemented with Objectives and Key Results (OKRs) or a similar framework. Marty Cagan has written two excellent books on the topic, Inspired and Empowered.

When considering priorities, outcomes always trump outputs. That said, it’s still a good idea to maximize your chances of success by making sure that your team is shipping continuously and working in healthy ways.

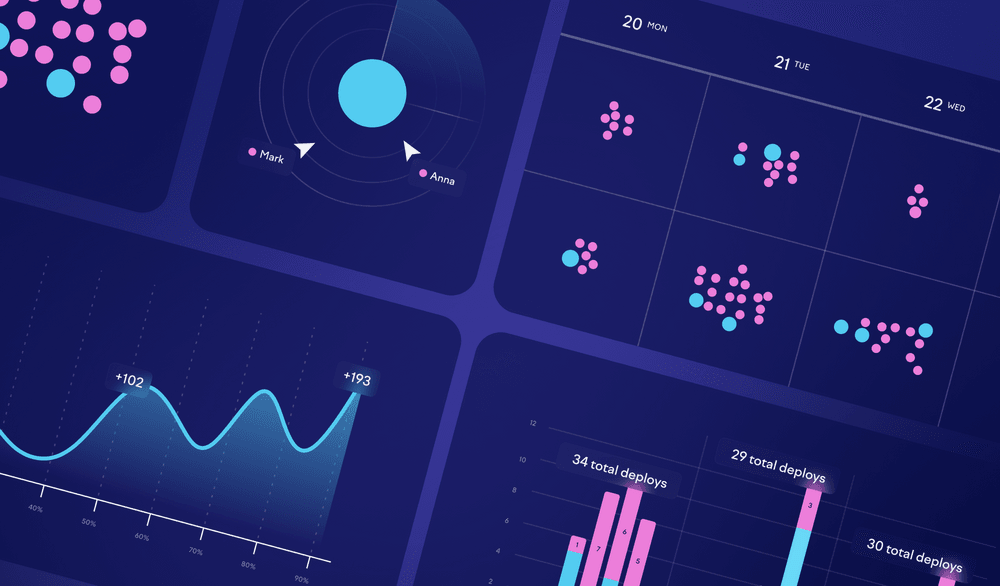

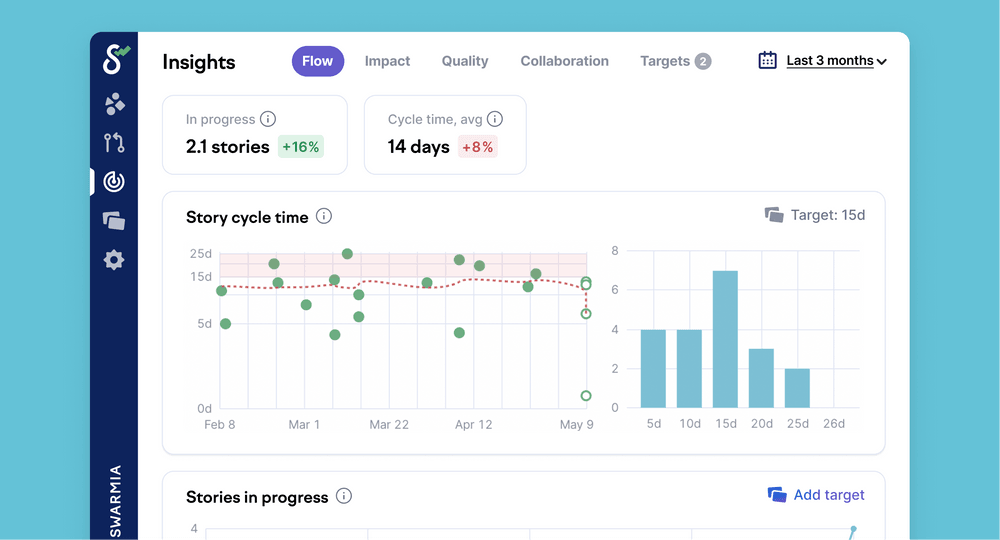

Flow metrics — cycle time, WIP, etc.

Cycle time or “lead time for change” communicates how long it takes to get your code to production. Rather than measuring individual outputs, it’s a great tool for understanding how your development process is running. Things like slow code reviews, manual testing rounds, and infrequent deployments will hurt your cycle time.

Rather than looking at an aggregate number, you’ll want to make sure that you’re able to drill into the root causes and the individual issues and pull requests. When the team gets to see this data for the first time, they’ll usually realize things like “we don’t have an owner for this microservice” or “we need to be more explicit in how we do code reviews.”

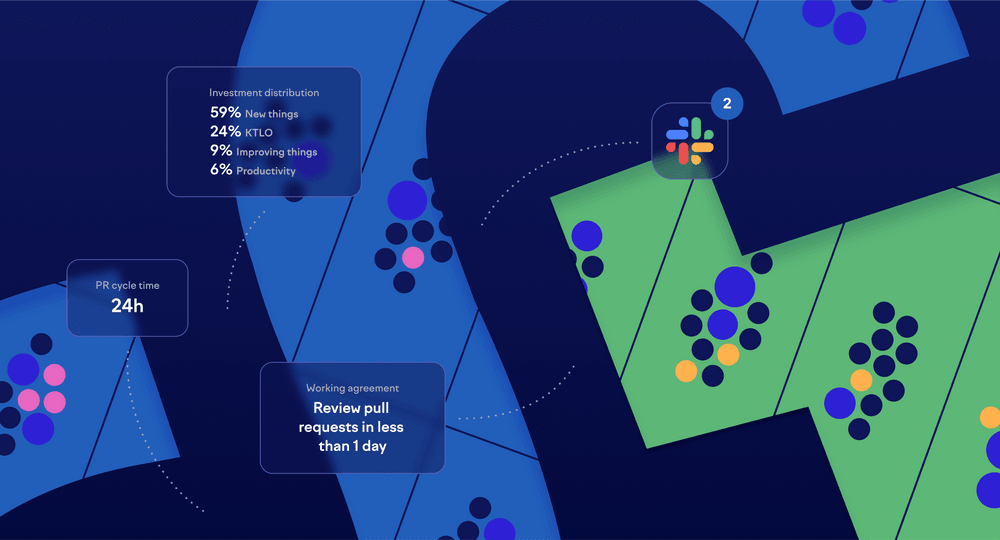

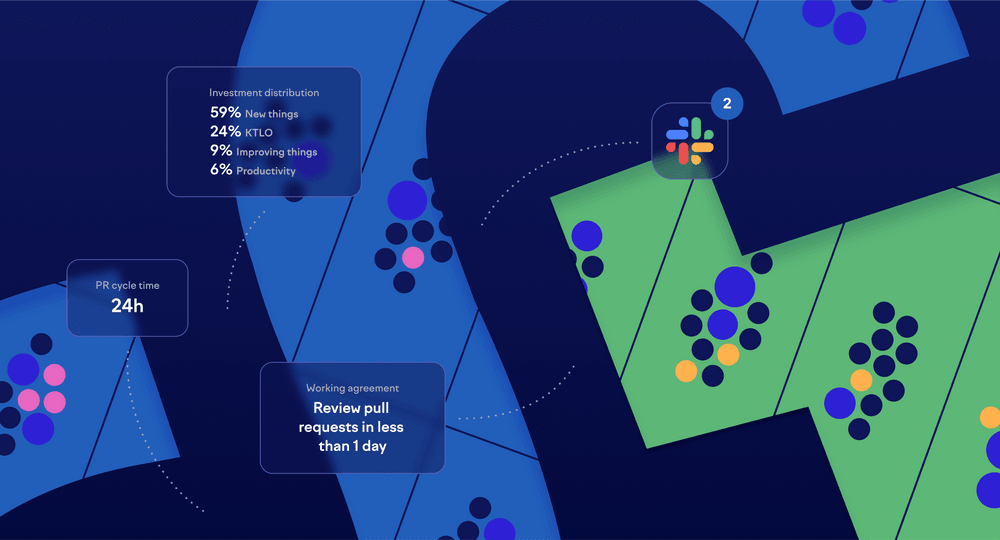

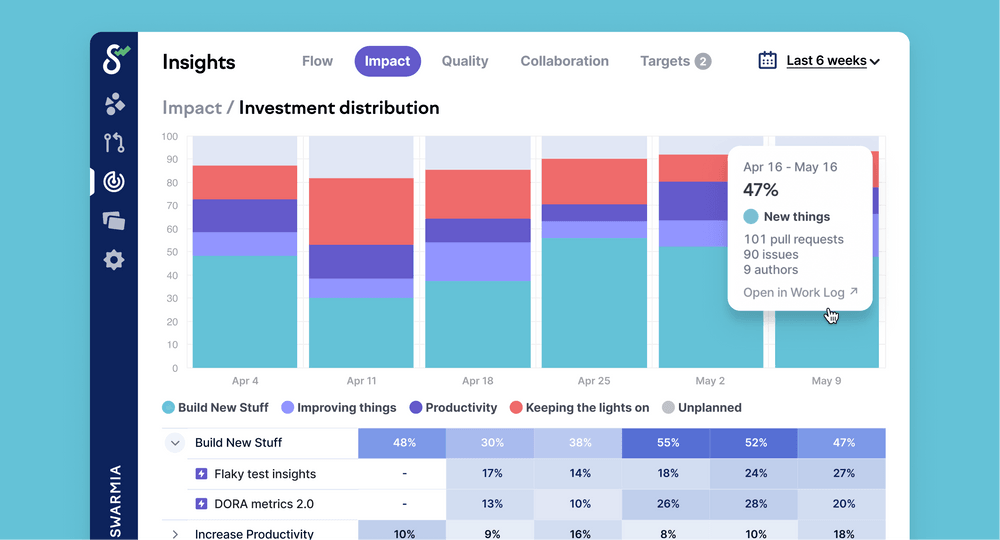

Investment distribution

Balancing different types of work is one of the key questions empowered product teams face. You’ll want to make sure that you’re shipping customer-facing features on a regular cadence but you also can’t afford to neglect other, equally important, types of work.

Investment distribution helps you understand how much you’re investing in mandatory “keeping the lights on” type of work, and what kind of issues are contributing to that. Once you’re able to identify patterns, you can do work to improve the productivity of your team. Finally, each feature has a cost beyond its initial launch, so it’s useful to understand how much you’re able to build new things vs. improve existing things. Both are important, but often the team’s scope is too broad and they don’t have the capacity to do both successfully.

Again, you’ll want to make sure that you can drill down from the high level. It’s useful for the team to see which actual tasks contributed to these categories, and understand how they should shift their priorities to find balance.

This view also helps developers make a case for refactorings that improve their productivity in the long run.

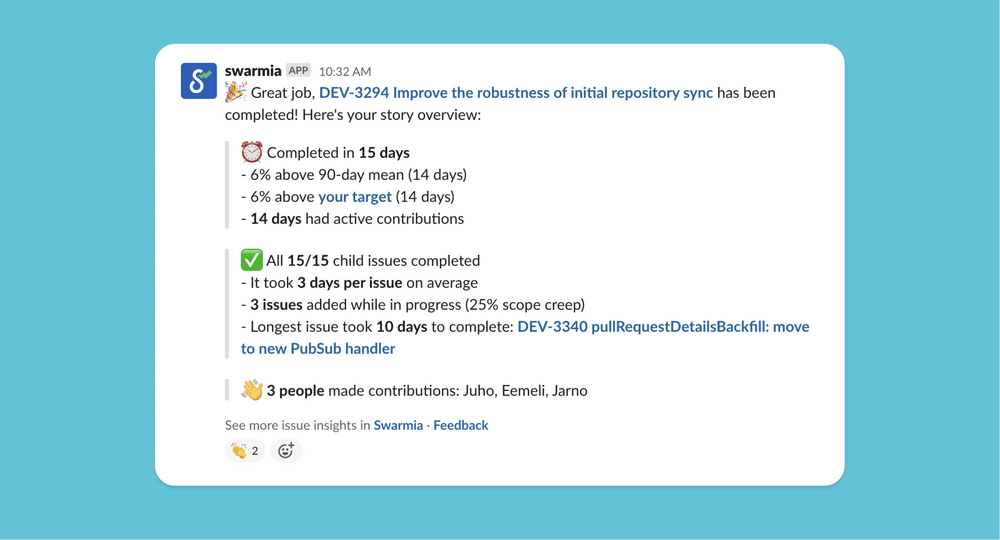

Focus on the work, not the numbers

At the end of the day, numbers are just numbers. And rather than treating these productivity measures as targets to hit, you’ll want to use them as a way to learn as a team, start conversations, and elevate the quality of your discussions.

Every time the team finishes a feature (or a user story/task), it’s good to look at it objectively and drill down into details to understand what really happened. These discussions — and not the numbers — are what help teams improve their workflow, collaboration, and productivity over time.

Implementing software development productivity metrics in practice

At Swarmia, we’ve been building our developer productivity and developer experience solution together with some of the best software organizations in the world, including Miro, Docker, and Webflow.

If you’re interested in implementing these recommendations for your team, schedule a demo or try it out for yourself with a free 14-day trial.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia