Engineering benchmarks: A guide to improving time to deploy

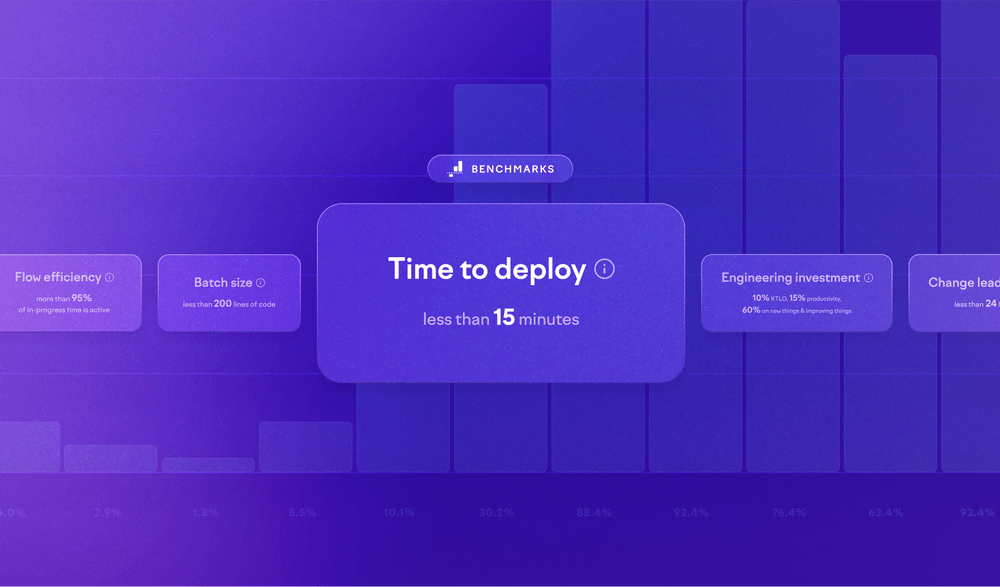

When you’re trying to improve the effectiveness of your software engineering organization, a handful of metrics can help you identify specific opportunities. One of those metrics is time to deploy, the time from when a task is approved for deployment from a development perspective until it’s deployed. This metric includes any manual QA that happens after code review but before code is deployed to production.

Time to deploy directly affects a team’s ability to deploy frequently: tautologically speaking, if deployments take more than a day, the team will never deploy more than once a day. It also influences the time to recover from an incident.

The most effective software teams deploy to production multiple times daily. Their deployments tend to take less than 15 minutes, and all of that time is hands-off, meaning engineers can go about their day in the meantime.

In many software engineering organizations, reality is far, far from this ideal state. Fortunately, the solutions tend to be predictable, if not necessarily trivial to implement: things like feature flags, CI/CD, automated tests, and a DevOps culture where a team owns its deployment destiny.

In this post, we’ll discuss the common tactics for improving deployment times.

Ship smaller things

Large changes are difficult to test, review, and deploy. They increase the risk of deployment failures and production bugs. Smaller changes demonstrably move through a delivery system faster than large changes. This is especially true when manual QA is involved.

Smaller, incremental changes are easier to test, review, and deploy, minimizing the potential impact of issues and reducing overall risk. Break large work items into small tasks that can be ready to deploy within a day or two after you start them. Use feature flags to decide when to expose new functionality to actual users.

Once you’re done with a small task, ask yourself: what stops me from shipping it right now? The answers will reveal the parts of your process that are getting in your way and ultimately impacting your change lead time.

Get manual QA out of the way

It’s easy (and typical) for a manual quality assurance (QA) process to balloon over time, slowing down the deployment pipeline and leading to ever-increasing deploy times. Even though it may have made sense at some point, what got you here isn’t going to get you there. Manual QA will ruin your ability to iterate quickly at larger scales.

Automate pre-release testing to streamline the QA process. By implementing automated tests, you can quickly validate code changes and reduce the reliance on time-consuming manual testing. Prioritize testing the most important functionalities, such as user login, to catch critical issues early. Adopting a "fail fast" approach ensures problems are identified and addressed swiftly.

Manual exploratory testing in the production environment can uncover subtle issues that automated tests might miss, enhancing overall software quality. Remember, not every issue that you detect with manual QA is worth blocking (or reverting) a deployment. As you see how your time to deploy improves, you’ll see that you have an opportunity to fix any important bugs soon enough; only urgent issues should cause you to block or revert a deploy.

Own your deployments

When the deployment process is outside of the hands of the team that has code to deploy, one of two things are true: either you have an amazing platform that you’ve invested in and that teams are broadly using, or you have a mess.

Lack of ownership over the product as a whole, plus siloed responsibilities and differing practices and priorities across product and deploy teams, all come together to make it hard for a product team to improve on its own.

In a DevOps culture, development and operations teams are one and the same: one team owns the whole process. Teams that deploy their code independently — and that “carry the pager” for when something goes wrong — are accountable to both sides of the process, development and deployment. This gives the team the autonomy to make improvements whenever they feel their existing process is slowing them down.

Automate, automate

Manual deployment processes are prone to errors and inefficiencies, leading to longer deployment times and increased risk. To speed up your deployments, you must eliminate the need for a human to monitor the process.

Embrace automation to enhance deployment efficiency. Start by implementing Continuous Integration/Continuous Deployment (CI/CD) pipelines to ensure that code changes are continuously tested and deployed. With CI/CD, you can write a series of steps to be performed, checks that need to pass before moving to the next deployment phase, and much more. GitHub Actions, GitLab CI, and Jenkins are some of the most common CI/CD solutions, but many exist.

Infrastructure as Code (IaC) enables consistent and reproducible environments, reducing the need for repetitive and risky manual configuration. Tools such as Terraform, OpenTofu, CloudFormation, and others allow you to specify all the resources needed for a given environment in code, and your deployment process can then be responsible for ensuring the necessary resources are automatically provisioned.

As mentioned above, implement mechanisms for easy reversion to the last stable build so any issues can be quickly addressed, minimizing downtime and reducing the impact of deployment issues or major production bugs.

Invest in your development environments

You know the classic: “It works on my machine.” It’s incredibly common for a company to get a long way with individual developers maintaining their own development environments. Issues arise when those development environments become difficult to maintain and, more importantly, out of sync with how the production environment works. The issues compound as more engineers join the organization.

When dev and production vary, surprising issues can get in the way of deployments — for example, if production doesn’t have a dependency that was available during development. Standardizing development environments to be as “prod-like” as possible makes it less likely that environment differences will slow down deployments.

Solutions like GitHub Codespaces or VS Code Remote development are one approach to this, where a full IDE is available locally but the working tree lives in the cloud, not on a developer’s machine.

Another challenge — and one that even some very successful companies haven’t solved — is achieving data parity across environments. Without data parity — making sure the data available in development and testing environments is substantially similar to production data — it can be very difficult to test end-to-end workflows outside of the production environment. The details of a solution depend on the security and privacy requirements of the application; recognize, too, that crafty engineers will find ways to get the data they need to test their changes, and you may not like their methods. Provide a golden path so they don’t need to make up their own.

Finally, if your product has a meaningful user interface, use branch previews to create temporary environments where people within the company can view running code from a feature branch. This capability is game-changing because it lets you get more eyes on a change long before it makes it to production.

Looking ahead

If your codebase is growing, you will never be “done” with keeping your deploy times in check. Once you have the basics from above in place, here are some more things to explore:

Selective test execution

Run only the relevant subset of tests based on the changes made to the codebase, rather than executing the entire test suite. You speed up the testing process by focusing on the areas affected by recent code changes, leading to quicker feedback and faster deployments.

Blue/green deploys

Maintain two identical production environments (blue and green) and switch traffic between them during deployments. This minimizes downtime and reduces the risk associated with new releases, allowing teams to deploy changes more quickly and confidently.

Automatically revert

If key production metrics indicate a problem, automatically revert to the last stable version. This reduces the time needed to recover from deployment issues, minimizes the impact on users, and allows teams to maintain a faster deployment cadence.

Merge queues

Automate the process of merging code changes and deploying them to production. By managing code integration and deployment in a continuous and systematic way, merge queues help reduce bottlenecks and ensure frequent and reliable deployments.

Incremental builds

Compile only the changed parts of the codebase rather than rebuilding the entire application. For large codebases, this significantly speeds up the build process, enabling faster feedback and quicker deployments, which in turn enhances overall productivity and reduces deployment time.

Further reading

If you’ve gotten this far and these sorts of changes seem completely unattainable, I recommend reading The Phoenix Project, by Gene Kim, Kevin Behr, and George Spafford. It’s the fictional but all-too-real story of a company with a horribly broken deploy process, and how they turned it around.

Summary

Swarmia’s software engineering benchmarks focus on just a handful of metrics — including time to deploy — that help you understand how effectively you’re operating. Focusing first on these key metrics will help you identify areas of maximum impact when it comes to improving engineering effectiveness.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia