- Product

- Changelog

- Pricing

- Customers

- LearnBlogInsights for software leaders, managers, and engineersHelp center↗Find the answers you need to make the most out of SwarmiaPodcastCatch interviews with software leaders on Engineering UnblockedBenchmarksFind the biggest opportunities for improvementBook: BuildRead for free, or buy a physical copy or the Kindle version

- About us

- Careers

Buyer’s guide for software engineering intelligence platforms

Your engineering team used to ship faster. You know it, your team knows it, and now you’d like to do something about it. And despite the AI coding assistants and automated workflows that promised to speed things up, many engineering organizations face a reality of longer delivery times and delayed projects.

This isn’t specifically a you problem. As codebases grow and teams expand, software development naturally slows without deliberate intervention.

Pinpointing exactly where things are breaking down is incredibly difficult. You might suspect issues with your review process, or that teams are taking on too much work at once, but without reliable data, these remain educated guesses rather than concrete evidence you can act on.

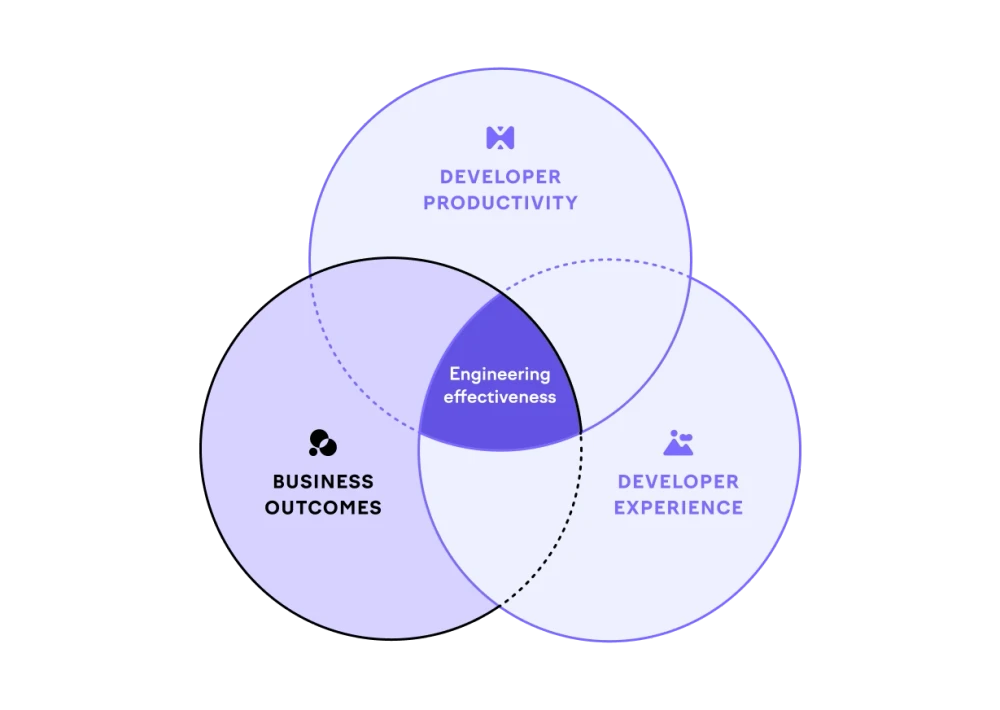

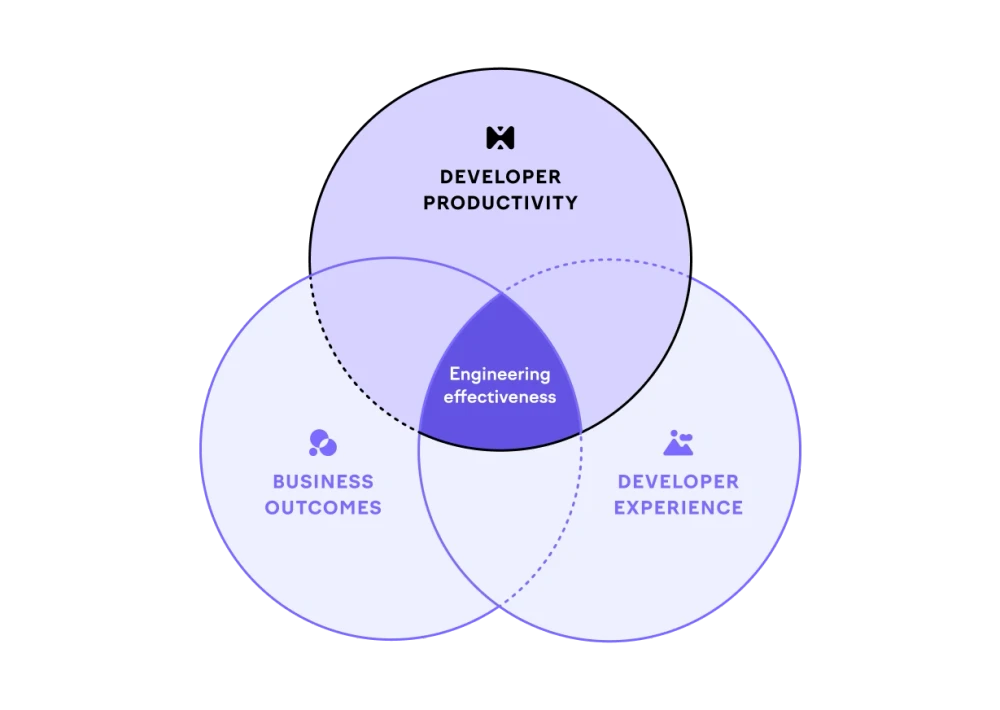

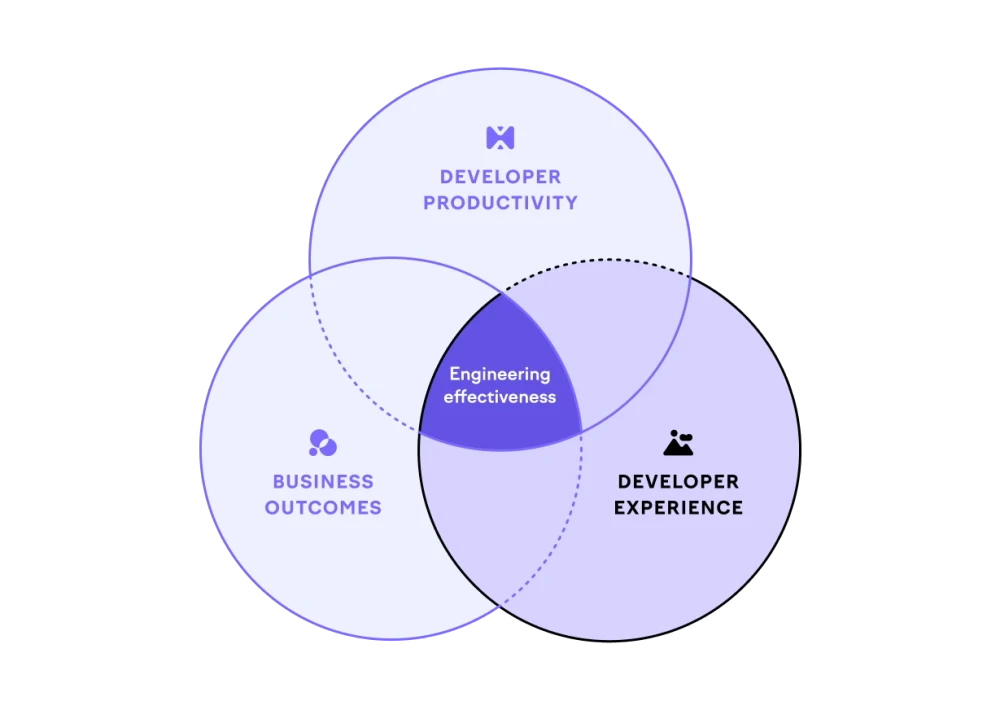

This is where software engineering intelligence platforms come in. A good platform connects the three pillars of engineering effectiveness that are often treated separately:

- Business outcomes: are we building the right things?

- Developer productivity: are we building things without getting stuck?

- Developer experience: are we achieving this without burning people out?

This guide covers everything you need to know about selecting the right software engineering intelligence platform for your business — including a helpful tool to objectively compare your shortlist of vendors, and a business case template to get your ideas across to leadership.

Let's go.

Part 1: What problem are you trying to solve?

It can be tempting to rush straight into applying the latest engineering metrics framework and call it a day, but identifying your organization’s unique challenges first will serve you better.

- Visibility challenges: Does leadership struggle to understand what’s happening across teams without endless status meetings and reports?

- Delivery slowdown: Are code reviews taking too long, deployments becoming less frequent, and releases taking longer than ever?

- Developer frustration: Are engineers spending more time in meetings and battling technical debt or bug fixes than building new features — or have you noticed decreased satisfaction and higher turnover?

- Business misalignment: Are your engineering efforts clearly mapped to business priorities, or is it difficult to justify investments or demonstrate impact?

What kind of organization do you want to build anyway?

Once you’ve figured out your organization’s key challenges, you’ll end up at a fork in the road, with one question to answer: what are we going to do about it?

The monitoring approach

You could focus on top-down monitoring, individual performance metrics, and treating symptoms rather than root causes. This approach might feel familiar if you’ve worked in organizations where:

- Individual developers are ranked or compared against each other

- Metrics are used primarily for performance reviews or cost-cutting decisions

- Teams feel like they’re being watched rather than supported

- Leadership focuses on outputs (lines of code, tickets closed) rather than outcomes

While this route may provide short-term visibility, it often creates a culture of fear and increases the risk of gaming the system just to get by.

The empowerment approach

On the other hand, you could choose to emphasize trust, autonomy, and ongoing, systematic improvement. Engineering organizations that choose this approach often notice:

- Teams using data to identify and solve their own bottlenecks

- Metrics focused on team and organizational performance, not individual comparison

- Developers who feel supported and understand how their work connects to business goals

- Leadership that gains visibility without micromanaging

Creating the right conditions for engineering teams isn’t just about being “nice” to developers — it directly impacts your ability to deliver more and better value to customers.

Part 2: The three pillars of engineering effectiveness

Many software engineering intelligence platforms focus on a single dimension of effectiveness — usually developer productivity metrics like deployment frequency or cycle time.

But engineering effectiveness doesn’t happen in isolation. Your teams might be deploying code daily, but if they’re building features nobody uses, that speed becomes meaningless. You could have perfect alignment with business goals, but if it takes you months to ship anything, you’ll miss every opportunity. And if you’re burning out your teams, you’re borrowing speed from the future — which can only end one way.

As mentioned earlier, truly effective engineering organizations excel across the three interconnected pillars, which is where we’ll focus our attention for this part of the guide. Onwards.

Business outcomes

When you’re evaluating how well a platform helps you connect engineering work to business value, here’s what matters:

- Investment balance insights: Beyond basic categorization, you need to understand if your engineering investments align with business priorities. Can the platform show you that Team A spends 70% of their time on maintenance while Team B focuses on new features? Can you track whether your stated goal of “reducing technical debt by 20%” is actually happening? Look for platforms that make these imbalances visible, actionable, and ideally automated.

- Cross-team initiative tracking: Major business initiatives rarely fit within a single team’s scope. The platform should automatically connect work across multiple teams, repositories, and projects to show unified progress. You want to see which teams are contributing, where dependencies are creating delays, and whether the initiative is on track — all based on actual development activity rather than manual status updates.

- Real-time bottleneck data: Work gets stuck, but you shouldn’t have to wait for retrospectives to find out where. Look for platforms that identify where tasks accumulate, which handoffs cause delays, and what types of work consistently slow down.

- Financial reporting capabilities: If you need software capitalization reports, the platform should generate them without creating overhead (think: timesheets) for engineering teams. It should automatically categorize development work as capitalizable or operational expense based on your rules, then combine this with developer effort (e.g. Full-Time Equivalent, or FTE) data to produce audit-ready reports.

Understanding whether you’re building the right things requires platforms that connect engineering activity to measurable business value — not just activity for activity’s sake. The platform you choose should make this connection visible, actionable, and continuous.

Developer productivity

When evaluating how well a platform addresses developer productivity, here’s what matters:

- Team-level metrics, not individual tracking: Developer productivity is about optimizing how work flows through your engineering system, not monitoring individual output. Look for platforms that aggregate insights at the team level and focus on system bottlenecks rather than personal metrics. If a platform emphasizes individual developer rankings or comparisons, that’s a red flag.

- DORA metrics and beyond: The platform should automatically calculate the four DORA metrics: deployment frequency, lead time for changes, change failure rate, and mean time to recovery. But metrics alone don’t tell the whole story. You need platforms that help you understand why your lead time is 30 days instead of 7, not just that it is.

- SPACE framework support: While DORA metrics focus on delivery performance, the SPACE framework provides a more holistic view of productivity. Look for platforms that address multiple SPACE dimensions — not just Activity (commits, PRs) but also Satisfaction (developer surveys), Performance (outcome metrics), Communication & collaboration (review patterns, team interactions), and Efficiency & flow (wait times, focus time).

- Flow efficiency visualization: Can the platform show you where work actually gets stuck? Look for solutions that track how much time work spends actively being developed versus sitting in queues.

- Real-time feedback mechanisms: Teams need to know about productivity issues while they can still fix them. Can the platform send automated alerts when pull requests sit unreviewed for too long? Will it notify teams when work-in-progress limits are exceeded? Look for proactive notifications that help teams maintain good habits without constant dashboard monitoring.

- Core workflow integration: The platform should integrate with your essential development tools — version control, issue tracking, and team communication. While you don’t need integration with every tool in your stack, the platform must capture enough data from your core workflows to provide meaningful insights about where work flows smoothly and where it gets stuck.

- Insights you can do something with (not just data): Raw metrics don’t drive improvement — understanding what to do about them does. Look for platforms that translate data into specific recommendations. Instead of just showing “high cycle time,” can it identify what caused it and what you could do next?

- Support for continuous improvement culture: The platform should help teams set their own productivity goals and track progress. Can teams establish working agreements about review times or deployment frequency? Does it support retrospectives with relevant data? Look for features that empower teams to own their improvement journey.

To understand developer productivity, you’ve got to treating it as a system optimization challenge, not a people management exercise. This means that the right platform will help your teams identify and eliminate bottlenecks collaboratively, and create conditions where good work can flow smoothly from idea to production.

Developer experience

Developer experience used to be considered a luxury: something nice to have once you’d solved all the “real” problems. Thankfully, organizations are finally recognizing that developer experience isn’t separate from productivity and business outcomes. It’s the foundation that makes everything else possible.

Think about it this way: you can build the world's fastest deployment pipeline, but if developers spend their days in meetings instead of writing code, that pipeline sits empty. You can have perfect alignment with business priorities, but if engineers are burned out and planning their exit, those priorities won’t get built.

With that in mind, here are a few developer experience green flags to look for in your buying journey:

- Survey capabilities that go beyond satisfaction scores: Look for platforms that can run regular developer experience surveys with specific, actionable questions rather than generic satisfaction ratings. Survey features should let you ask targeted questions about build times, code review processes, meeting load, and other concrete aspects of the development experience. They should also make it easy to track changes over time and connect survey responses to engineering metrics.

- Context and connection capabilities: Check if your shortlisted platforms show how individual work connects to larger initiatives and business outcomes. Not solely for the purposes of project tracking, but helping engineers understand why their work matters.

- Team empowerment tools: Your platform should provide tools for teams to identify and solve their own workflow problems rather than just reporting problems to management. This might include working agreements that teams can set and track themselves, or retrospective tools that help teams identify patterns in their own data.

- Natural workflow integration: The platform should integrate with the tools developers already use daily — GitHub, Slack, Jira — and providing value within those tools rather than requiring constant context switching to a separate dashboard.

- Respect for developer privacy and autonomy: Developers should be able to see their own data and understand how it contributes to team metrics, but the platform shouldn’t encourage individual comparison or ranking.

Part 3: Evaluating your shortlist of software engineering intelligence platforms

This is the part where things can get tricky. Naturally, demo environments are polished, reference customers are handpicked, and every vendor will tell you their platform solves exactly your problems. Meanwhile, you’re making a decision that could impact your organization for years — not just the platform investment, but the trust and momentum you’ll build or lose with your teams.

Data quality and trust

You’d expect data accuracy to be table stakes for engineering intelligence platforms, but that’s not always the case. We’ve seen teams invest in tools with impressive demos and complex metrics, only to discover later that the numbers don’t match what’s actually happening in their org. And once stakeholders lose confidence in the numbers, it’s incredibly difficult to rebuild that trust (which, of course, means the platform often goes unused).

What to investigate

- Engineering leaders need to verify that they can trust the metrics they’ll be sharing with other executives. Can you drill down to see the individual pull requests, issues, or activities behind any number? When someone questions a metric in a leadership meeting, can you prove it’s correct? Does the platform handle edge cases like large refactoring projects or team changes gracefully?

- Engineering managers should test whether the platform shows work their teams actually did. Do the cycle times match what they observed? Are the right people credited for the right work? Can they exclude statistical outliers that would skew team metrics?

- Software engineers need to see that their work is represented accurately. Do their commits, pull requests, and code reviews show up correctly? Are they associated with the right teams and projects? Does the platform respect the nuances of how they actually work?

- Finance teams require audit-level accuracy for any reporting they’ll use. Can the platform provide detailed breakdowns of how FTE is calculated? Are the categorization rules clear and defensible?

Proactive insights vs. pretty dashboards

Most platforms are great at collecting data and creating visualizations, but many fall short at translating that information into actionable recommendations. Without proactive intelligence, even the most comprehensive dashboards can end up serving as digital wallpaper.

What to investigate

- Engineering leaders need platforms that surface the most important patterns without requiring constant dashboard monitoring. Does the platform proactively identify teams that might need help? Can it predict potential bottlenecks before they impact delivery?

- Engineering managers should look for contextual insights that help with day-to-day decisions. Does the platform highlight when code reviews are piling up? Can it suggest when workloads are becoming unbalanced? Does it provide insights that improve team retrospectives?

- Software engineers benefit from platforms that deliver insights where they already work. Do they get helpful notifications in Slack about pull requests that need attention? Are insights delivered at the right time to be actionable rather than distracting? Do they just tell you “cycle time is high” or do they indicate why, and suggest specific actions to improve it?

- Product managers need insights that help with planning and coordination. Can the platform predict delivery timelines based on current team capacity? Does it surface cross-team dependencies that might cause delays?

Implementation and time to value

We'll have you up and running in a few days.

When vendors make this promise, it’s worth understanding what that really means for your organization.

Some platforms require extensive data cleanup, complex configuration, training, and even changing your internal workflows to fit the tool before you can trust what you're seeing. Others are designed to work with your existing workflows from day one, with proactive support to help you get the most value quickly.

What to investigate

- Engineering leaders need realistic timelines for seeing valuable insights How long before the platform provides data they’d be comfortable sharing with other executives? Does the platform require extensive process changes and meticulous data hygiene to deliver value? Will this create a distraction from delivery goals?

- Engineering managers should understand what’s required from themselves and their teams during onboarding. Is there a learning curve for interpreting metrics? How much time will managers spend on configuration versus getting insights they can work with — and is configuration something that can be done independently without the vendor’s help?

- Platform teams need to know the technical requirements and ongoing maintenance burden. Does the platform require dedicated engineering resources to maintain? Do integrations break when external tools update their APIs?

Supporting all stakeholders in your engineering org

Engineering intelligence platforms should create value for everyone who interacts with them.

When software engineers find the platform useful, adoption increases and data quality improves. When managers get insights that help with coaching, team performance increases. When product managers understand engineering capacity, planning gets better. When leadership gets the visibility they need, everyone is happy. You get the picture.

What to investigate

- Engineering leaders need comprehensive organizational visibility without drowning in details. Can they see patterns across teams? Do they get insights that help with resource allocation and strategic planning? Can they easily communicate engineering impact to business stakeholders?

- Engineering managers need team-level insights that help with coaching and process improvement. Can they identify bottlenecks specific to their team? Do they get data that improves one-on-one conversations and retrospectives?

- Software engineers need tools that actually improve their daily experience, not just measure it. Do they get helpful notifications about pull requests that need attention? Can they see how their work connects to larger goals? Does the platform respect their autonomy?

- Product managers need insights that improve collaboration with engineering. Can they understand realistic delivery timelines? Do they get visibility into how technical work supports product goals?

Growth and scalability

Nothing flattens momentum like outgrowing your tools. So try to imagine where you’re going, not just where you are today. This doesn’t mean over-engineering for hypothetical future needs, but it does mean understanding how platforms handle growth and change.

What to investigate

- Engineering leaders need platforms that will grow with their organization’s ambitions. Can the platform handle increasing team sizes and complexity? Will the platform support new organizational structures as the company evolves? And importantly, will your customer success manager provide ongoing support to keep your data in good shape as your needs evolve, or are you on your own after the initial setup?

- Platform teams should understand the technical scalability requirements. How does performance change as data volume increases? Are there limits on team sizes, repository counts, or integration complexity?

- Finance teams need to understand how pricing scales (like whether vendors offer volume or multi-year discounts) and whether the platform will remain cost-effective as your organization grows. Also evaluate what’s included in the per-head cost — some vendors include customer success and change management support, while others charge separately.

Making vendor comparisons

Now comes the decision you’ve been building up to. Exciting stuff. But how do you see through sales and marketing talk (yes, we understand the irony of reading this in a company blog post) and stay objective in your decision?

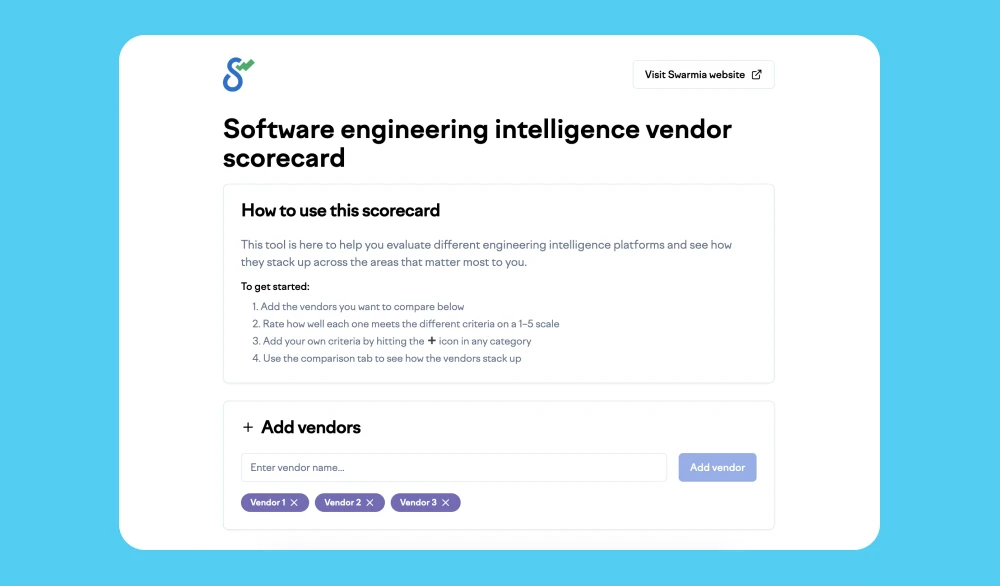

Try our vendor scorecard tool

Rather than getting lost in shiny slide decks or letting the loudest voice in the room drive what is ultimately your decision, our simple vendor scorecard tool helps you systematically evaluate platforms against areas that matter for engineering effectiveness.

Each area includes specific evaluation criteria scored on a 1-5 scale, with clear descriptions of what each score represents.

The aim of using the scorecard tool is to turn subjective preferences and gut feelings into objective comparisons while still capturing the nuances that matter for your organization.

Why it’s hard to quantify the true ROI of engineering intelligence platforms

We could create elaborate formulas with dozens of variables and impressive-looking multipliers, or we could keep it simple and focus on what actually matters.

For now, we’re going with simple.

Here’s the basic math: Swarmia, for example, costs around $40 per developer per month. Can we save each developer $40 worth of time? Of course — that’s less than 30 minutes of productivity improvement per month. But that’s not really the conversation we should be having.

The value drivers of engineering intelligence platforms comes from several interconnected improvements:

- Quantifying current inefficiency: Before calculating potential gains, you need to understand what inefficiency is costing you right now. Even modest inefficiencies add up: 5% of time waiting for code reviews, 5% in unnecessary meetings, and 3% fighting flaky tests means 13% of developer time lost to friction. For a developer costing $150k annually, that’s nearly $20k per person.

- Preventing regretted attrition: Replacing a developer is said to cost between 50-200% of their annual salary when you factor in recruiting, onboarding, and lost productivity. If better visibility into developer experience helps you retain even one engineer per year who would have otherwise left, that’s potentially six-figure savings. More importantly, you keep their domain knowledge and team relationships.

- Resource allocation improvements: When you can see that 60% of your engineering time goes to keeping the lights on, you can make informed decisions about technical debt paydown or architectural improvements.

- Leadership time savings: How many hours do your engineering leaders spend creating status reports, running update meetings, and trying to understand what’s actually happening across teams? With the right insights, leaders can reclaim hours per week for strategic work instead of information gathering.

- Financial reporting time savings: For many organizations, the ability to accurately track and report capitalizable software development costs saves hundreds of hours annually. Automated capitalization reporting not only saves time but provides the audit-ready accuracy that manual processes can't match.

What we can’t easily quantify (but still matters, a lot)

- Time to market acceleration: Getting features to customers even one week earlier can mean capturing market opportunities, beating your competitors, or responding to customer needs faster.

- Developer satisfaction dividends: Happier developers write better code, collaborate more effectively, and stick around longer. They also recruit their talented friends.

- Quality improvements: When teams have time to write tests, refactor problematic code, and review PRs properly, defect rates drop. The cost of prevented production incidents and reduced customer support burden adds up quickly.

- Strategic decision confidence: When leadership can see what’s actually happening in engineering, they make better decisions about hiring, prioritization, and technical investments.

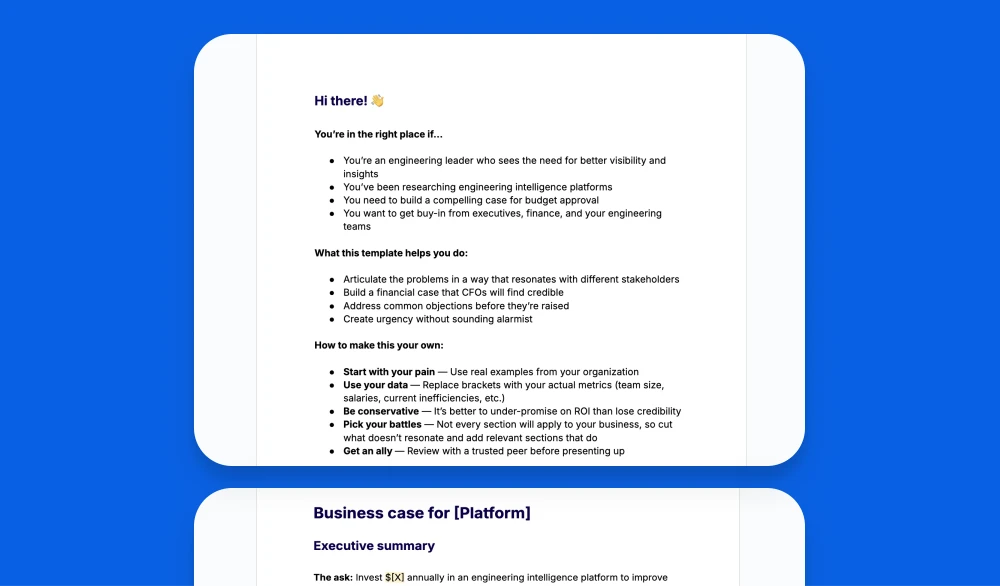

Building your business case

Even the best platform on the market will fail without organizational buy-in. You need engineers to trust it, managers to champion it, and executives to support it.

Executives care about outcomes, not implementation details. Quantify the cost of current inefficiencies and position this as an investment in organizational capability, not just a tool purchase.

In leadership speak, “We’ll deliver the roadmap 20% faster” sounds way more attractive than “We’ll reduce cycle time by 30%”.

Your business case needs to balance quantitative ROI with qualitative benefits. Include the points we spoke about in the last section: current costs of inefficiency, expected efficiency gains, and platform costs versus alternatives. But don’t forget the strategic value: competitive advantage from faster delivery, improved developer retention, and better decision-making.

If you can, tell a story. Start with the pain everyone feels, show a credible path to improvement, and be honest about what it will take to get there.

If you need a starting point, we’ve got you covered — make a copy of our business case template here.

Phew

You’ve made it through a lot — from identifying your organization’s challenges to evaluating platforms, navigating vendor selection, and building stakeholder buy-in.

Now we’ll leave it up to you.

Whatever software engineering intelligence platform you do choose, it should do three things: give you visibility into what’s happening in your engineering organization, help you understand why it’s happening, and empower your teams to improve it.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia