Introducing software engineering metrics to your organization

There’s increasing demand — from both senior engineering leaders and non-technical executives — to measure software engineering work more objectively. Done right, software engineering metrics can guide continuous improvement. Done wrong, they create perverse incentives and damage trust.

The push for measurement comes from several directions:

- Identifying bottlenecks. Metrics can surface issues that anecdotal evidence misses. Tracking cycle time might reveal that code reviews consistently drag on for days, or that testing phases eat up more time than anyone realized.

- Making smarter decisions about where to invest. When you know where time actually goes, you can make better calls about hiring, tooling, or whether it’s worth tackling that technical debt.

- Speaking a common language with non-technical stakeholders. Metrics can help translate engineering work into terms that executives and board members can understand and act on.

- Establishing baselines for improvement. You can’t tell if you’re getting better without knowing where you started. Metrics create a foundation for setting goals and tracking progress over time.

- Making delivery more predictable. When applied thoughtfully, metrics improve estimation accuracy and help set realistic expectations about timelines.

- Demonstrating AI tool ROI. Boards and executives are asking hard questions about the money spent on AI coding assistants and other AI-powered tools. Metrics can show whether these investments are paying off in faster delivery, better code quality, or improved productivity — or help identify where they’re not living up to the hype.

This post walks through which metrics matter, how to introduce them effectively, and the common ways metrics programs go sideways.

Which metrics should you track?

No set of metrics captures everything about software engineering, but a few categories have proven useful:

- DORA metrics measure software delivery performance through deployment frequency, lead time for changes, mean time to recovery, and change failure rate. These give you a high-level view of how quickly and reliably you ship changes.

- Code quality metrics look at the codebase itself: cyclomatic complexity, code duplication, test coverage, static analysis results. They highlight maintainability issues or code that’s likely to cause problems.

- Process efficiency metrics track how work moves through your pipeline. Cycle time and throughput help you spot bottlenecks and smooth out your process.

- Business outcome metrics show where engineering time goes and whether that work creates the intended business value.These get at the heart of engineering effectiveness.

When you’re starting out, pick metrics that address your biggest pain point. Struggling with unpredictable delivery? Start with DORA metrics. Quality issues keeping you up at night? Focus on code quality. Don’t try to measure everything at once.

Getting started: baselines, data collection, and visibility

Before introducing new metrics, figure out where you are first, and document it. After all, you need a baseline to measure improvement against.

Next, set up automated data collection. Seriously — try to avoid spreadsheets that humans fill out. Manual data entry leads to gaps, inconsistencies, and metrics nobody trusts. Set up validation checks, write clear definitions for each metric, and audit the data regularly to catch issues early.

Make metrics visible to everyone who needs them. Create dashboards, send regular reports, or integrate metrics into your existing project management tools. The point is making data accessible and easy to understand.

Here’s a critical rule: don’t track anything at the leadership level that teams and individuals can’t see. Hidden metrics breed distrust and lead to bad data. Team members need to understand what you’re measuring, why it matters, and how their work connects to these numbers.

Work metrics into your existing processes rather than treating them as extra work. Review metrics in retrospectives, use them in project planning, factor them into team evaluations. Make measurement feel like a natural part of how things get done.

As you roll this out:

- Pick metrics that align with team and organizational goals

- Use metrics to drive improvement, not to punish people

- Reassess regularly whether your metrics still make sense

- Combine quantitative data with qualitative insights like developer surveys

Start small, involve engineers directly, and iterate based on what you learn.

Building ownership and setting goals

Push ownership of metrics down to the team level. When team members feel accountable for the numbers, they’re more likely to act on them. This might mean assigning metric champions or creating cross-functional groups to tackle specific challenges. Let your team propose and lead improvements based on what the data shows.

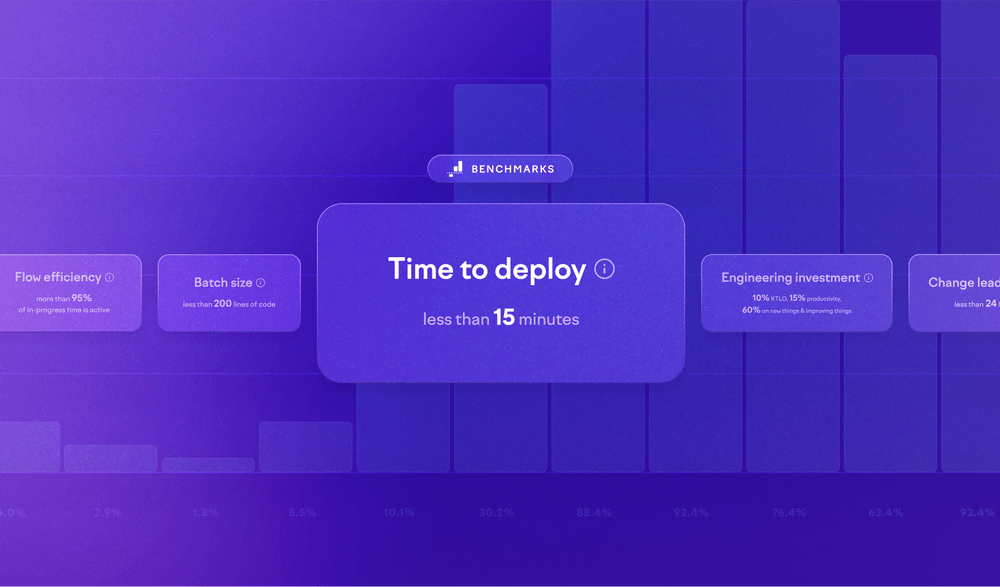

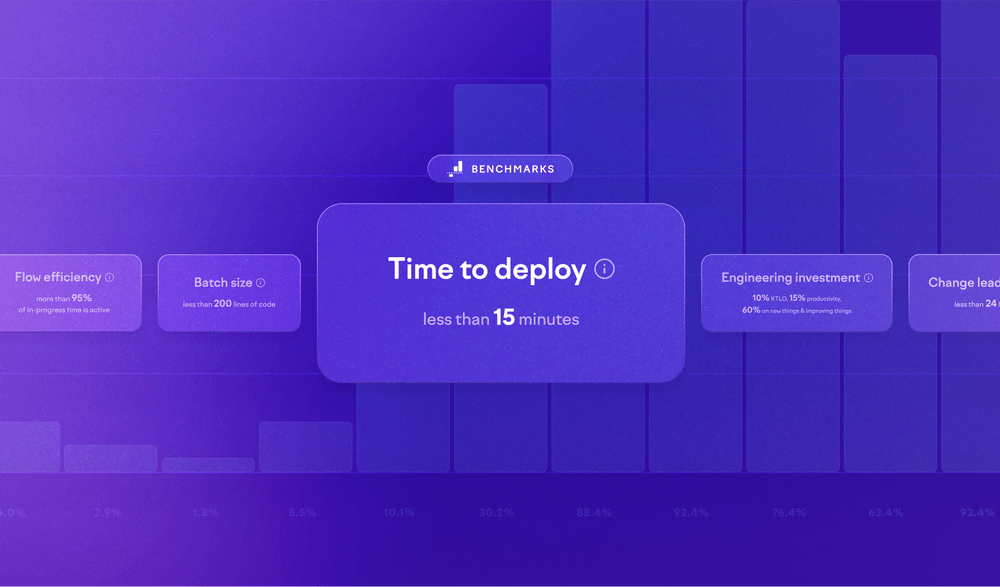

When setting goals, look at your baseline measurements, check industry benchmarks, and account for your organization’s specific constraints. Focus on steady progress rather than dramatic overnight changes. Some teams will have an easier time improving than others based on their starting point and circumstances.

Review metrics weekly or bi-weekly at the team level, monthly or quarterly at senior levels. Look for trends, outliers, and connections between different metrics. Ask what’s driving the numbers — both the good and the bad. This analysis should inform your decisions and help you identify where to focus improvement efforts.

When you spot an opportunity for improvement, run experiments. If you’re trying to reduce bugs, test different code review approaches or automated testing tools. Compare the metrics before and after to see what actually works before rolling changes out widely.

Celebrate when you hit your goals. Recognition reinforces that the metric-driven approach has value. Encourage engineers to fix small productivity issues as part of regular work. When metrics reveal shortcomings, treat them as learning opportunities rather than failures.

Remember that metrics exist to drive real improvements to your engineering processes and business outcomes. Keep asking how metrics and improvements connect to broader business objectives and software quality.

When introducing engineering metrics goes wrong

Metrics can backfire in predictable ways. Here are the most common mistakes:

- Fixating on numbers. It’s easy to lose sight of what metrics represent when you’re focused on hitting specific targets. Metrics inform decisions — they don’t make decisions for you.

- Ignoring context. Raw numbers rarely tell the whole story. A drop in productivity might signal a problem, or it might reflect necessary training time or someone’s extended leave. Consider team composition, project complexity, and external factors when interpreting data.

- Incentivizing the wrong behaviors. If you measure lines of code, people will write verbose code. If you overemphasize bug counts, developers might stop reporting issues. Make sure your metrics align with what you actually want and review regularly whether they’re driving the right behaviors.

- Failing to evolve as things change. As your team grows, your stack evolves, or business priorities shift, yesterday’s useful metrics can become irrelevant or counterproductive. Regularly reassess whether metrics still provide meaningful insights. Retire metrics that no longer serve you and introduce new ones that reflect current goals.

A few metrics to avoid

Velocity metrics using story points or ticket counts get misused constantly. Story points are subjective and vary wildly between teams or even between sprints. They don’t account for quality or long-term impact. Tracking ticket counts encourages breaking work into tiny, meaningless chunks or knocking out easy tasks instead of tackling hard, important work.

These metrics push teams to inflate their ”velocity” rather than deliver value, which means people rush through tasks without thinking about code quality, maintainability, or user needs. It damages productivity and quality over time.

Technical debt metrics are tricky to quantify. Code churn can mislead — high churn might mean active improvement rather than problematic code. Low churn in legacy code might hide significant debt that nobody wants to touch (or that isn’t worth fixing).

Take a more nuanced approach to technical debt. Do regular code reviews, assess architecture, and talk with your team about what slows them down or increases bug risk. Qualitative feedback from developers often provides better insights than automated metrics.

Focus on outcomes, not outputs. Metrics tied to business goals and user satisfaction — feature usage, system reliability, time to market — tell you more about engineering effectiveness than activity metrics ever will.

Too many metrics is its own problem

An overabundance of metrics leads to information overload. Teams can’t figure out what matters when they’re drowning in data points. This creates analysis paralysis where information hinders rather than helps decision-making.

Maintaining and analyzing too many metrics also drains time and resources that could go toward actual development work. Worse, excessive measurement can trigger Goodhart’s Law: “When a measure becomes a target, it ceases to be a good measure.” Teams start gaming the system instead of improving quality or productivity.

Pick a small set of key indicators that reflect your specific goals and challenges. Review and adjust them regularly. Knowing your metrics well means using data effectively without getting overwhelmed or distracted by measurement theater.

Metrics as a tool for improvement

When used thoughtfully, metrics provide insights into processes, productivity, and quality that drive continuous improvement and better decision-making.

Success with metrics requires careful selection, solid data collection systems, regular analysis, and awareness of potential pitfalls. Whether you’re just starting or refining your approach, stay focused on what you’re trying to accomplish and remain open to course corrections.

Start with one or two metrics that address your biggest challenge. Get those working well, learn from what you discover, and expand from there. If you treat measurement itself as an iterative process, not a one-time implementation, you’re bound to see progress.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia